Table of Contents

Neural networks have been around for decades, but the recent advancements in deep learning have made them more powerful than ever before. Neural networks are a type of machine learning algorithm that is modeled after the human brain. They are used to recognize patterns and classify data, making them useful in a wide range of applications, including image recognition, language translation, and speech recognition.

One of the most important applications of neural networks is classification. Classification is the process of identifying which category a piece of data belongs to. For example, a neural network could be trained to recognize different types of animals based on their pictures. This is a complex task that requires the network to learn the features that distinguish one animal from another. Deep learning has made it possible to build neural networks that are capable of learning these features automatically, without the need for explicit feature engineering.

The Power of Neural Networks for Classification

Neural networks have revolutionized the field of machine learning, enabling significant advancements in classification tasks. Neural networks are computational models that simulate the behavior of the human brain. They are designed to learn from data and identify complex patterns and relationships that traditional algorithms cannot.

Neural networks can be used for a variety of classification tasks, including image recognition, speech recognition, and natural language processing. They can handle large amounts of data and can learn from experience, improving their accuracy over time.

One of the key advantages of neural networks is their ability to handle non-linear relationships between inputs and outputs. This is particularly useful in classification tasks where the relationship between input features and output labels can be complex and difficult to model with traditional algorithms.

Another advantage of neural networks is their ability to learn from unstructured data. This is particularly useful in natural language processing tasks where the input data can be in the form of text, audio, or video.

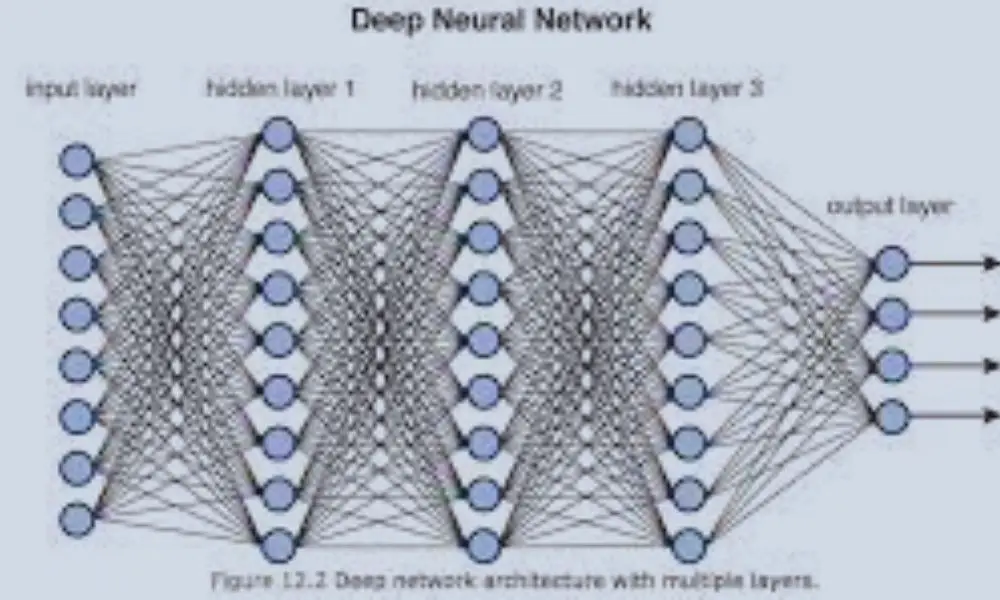

Deep neural networks, in particular, have shown remarkable performance in classification tasks. These networks are composed of multiple layers of interconnected neurons, allowing them to learn more complex representations of the input data. Recent research has shown that very deep networks can be Bayes optimal for classification tasks.

In conclusion, neural networks are a powerful tool for classification tasks, enabling significant advancements in fields such as image recognition, speech recognition, and natural language processing. Their ability to handle non-linear relationships and learn from unstructured data make them an essential tool for modern machine learning applications.

Deep Learning: A Brief Overview

Deep learning is a subset of machine learning that involves the use of artificial neural networks with multiple layers to perform complex tasks such as image and speech recognition, natural language processing, and decision making. Deep learning algorithms learn from large amounts of data and can improve their accuracy over time.

The key advantage of deep learning over traditional machine learning algorithms is its ability to automatically extract features from raw data, eliminating the need for manual feature engineering. This makes deep learning ideal for dealing with complex and unstructured data such as images, video, and audio.

Deep learning has been around for a while, but it wasn’t until the early 2010s that it started to gain widespread attention. This was due in part to the availability of large amounts of data, powerful computing resources, and breakthroughs in neural network architectures such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs).

CNNs are particularly suited for image and video recognition tasks, while RNNs are ideal for natural language processing and speech recognition. Deep learning models can also be combined with other machine learning techniques such as reinforcement learning to create more powerful models.

Despite its many advantages, deep learning also has some limitations. One major challenge is the need for large amounts of labeled data to train the models. Another challenge is the complexity of the models, which can make them difficult to interpret and explain.

Overall, deep learning has revolutionized the field of machine learning and has the potential to transform many industries such as healthcare, finance, and transportation.

Convolutional Neural Networks

Convolutional Neural Networks (CNNs) are a type of neural network that is particularly well-suited for image classification tasks. They have been used with great success in many applications, including object recognition, face detection, and more.

The key innovation of CNNs is the use of convolutional layers, which allow the network to learn features in an image in a hierarchical manner. These layers operate by applying a set of filters to the input image, producing a set of feature maps that capture different aspects of the image. The filters are learned during training, allowing the network to adapt to the specific features of the input images.

CNNs typically consist of several layers of convolutional and pooling operations, followed by one or more fully connected layers. The pooling layers help to reduce the dimensionality of the feature maps, while the fully connected layers perform the actual classification.

One of the main advantages of CNNs is their ability to learn features automatically, without the need for manual feature engineering. This makes them well-suited for tasks where the features of interest are not known in advance.

However, CNNs can be computationally expensive to train, especially when dealing with large datasets. To address this issue, researchers have developed a number of techniques for speeding up training, such as using smaller filter sizes, reducing the number of parameters in the network, and using pre-trained models.

Overall, CNNs are a powerful tool for image classification, and have been used with great success in many applications. However, they are not a silver bullet, and their performance can be highly dependent on the specific task and dataset at hand.

Recurrent Neural Networks

Recurrent Neural Networks (RNNs) are a type of artificial neural network that can process sequential data. They are especially useful for time-series data, natural language processing, and speech recognition. Unlike traditional feedforward neural networks, RNNs can maintain information about previous inputs in their internal memory, allowing them to make predictions based on context.

One of the key features of RNNs is their ability to handle variable-length sequences. This is because the network has a memory component that can store information about previous inputs and use it to make predictions about future inputs. This makes RNNs well-suited for tasks such as speech recognition, where the length of the input sequence can vary.

One of the most popular types of RNNs is the Long Short-Term Memory (LSTM) network. LSTMs are designed to address the problem of vanishing gradients, which can occur when training RNNs. The LSTM architecture includes gates that control the flow of information through the network, allowing it to selectively remember or forget information based on its relevance to the task at hand.

Another popular type of RNN is the Gated Recurrent Unit (GRU) network. GRUs are similar to LSTMs in that they use gates to control the flow of information through the network. However, they are simpler than LSTMs and have fewer parameters, making them faster to train and less prone to overfitting.

Overall, RNNs are a powerful tool for processing sequential data. They are widely used in natural language processing, speech recognition, and time-series analysis. With the advent of deep learning, RNNs have become even more powerful, allowing researchers to tackle more complex problems than ever before.

Multilayer Perceptron

The Multilayer Perceptron (MLP) is a type of feedforward artificial neural network that is widely used for supervised learning, specifically for classification tasks. It is composed of multiple layers of nodes, with each layer connected to the next, forming a network of interconnected nodes. The MLP has at least three layers: an input layer, one or more hidden layers, and an output layer.

The input layer consists of nodes that receive input data and pass it on to the next layer. The hidden layers are composed of nodes that perform computations on the input data, using activation functions to determine the output of each node. The output layer consists of nodes that produce the final output of the network, which is a predicted class label for the input data.

MLPs are capable of learning non-linear decision boundaries and can handle complex input-output mappings. They are trained using backpropagation, a supervised learning algorithm that adjusts the weights of the connections between nodes in the network to minimize the error between the predicted output and the actual output.

MLPs have several advantages, including:

- Capability to learn non-linear models

- Capability to learn models in real-time using partial_fit

- Ability to handle large datasets

- Ability to handle input data with missing values

However, MLPs also have some disadvantages, including:

- Non-convex loss function with multiple local minima

- Susceptibility to overfitting if the network is too complex or the training data is insufficient

- Difficulty in determining the optimal number of hidden layers and nodes

Overall, the Multilayer Perceptron is a powerful tool for classification tasks and is widely used in various fields, including computer vision, natural language processing, and finance.

Choosing the Right Neural Network for Your Data

When it comes to using Neural Networks for classification, choosing the right neural network for your data is crucial. Not all neural networks are created equal, and each type has its strengths and weaknesses. Here are a few things to consider when choosing the right neural network for your data:

Size of the Dataset

The size of your dataset can greatly influence the type of neural network you should use. If you have a small dataset, a simpler neural network with fewer layers may be more appropriate. On the other hand, if you have a large dataset, a more complex neural network with more layers may be necessary to achieve optimal results.

Complexity of the Data

The complexity of the data you are working with is another important factor to consider. If the data is relatively simple, a basic neural network like a feedforward network may suffice. However, if the data is more complex and contains multiple features, a convolutional neural network or a recurrent neural network may be more appropriate.

Type of Data

The type of data you are working with can also influence the type of neural network you should use. For example, if you are working with image data, a convolutional neural network may be the best choice. If you are working with sequential data, such as text or time-series data, a recurrent neural network may be more appropriate.

Available Resources

The resources you have available, such as computing power and time, can also influence the type of neural network you should use. More complex neural networks require more computing power and can take longer to train. If you have limited resources, a simpler neural network may be a more practical choice.

In conclusion, choosing the right neural network for your data is a critical step in achieving optimal results. By considering the size of your dataset, the complexity of your data, the type of data you are working with, and the resources you have available, you can select the neural network that is best suited for your needs.

Training Neural Networks

Training neural networks is a crucial step in building effective deep learning models for classification tasks. It involves optimizing the model’s parameters to minimize the difference between the predicted output and the actual output.

The most commonly used algorithm for training neural networks is backpropagation, which involves calculating the gradient of the loss function with respect to the model’s parameters and updating them accordingly. This process is repeated iteratively until the model’s performance on the training data is satisfactory.

To prevent overfitting, regularization techniques such as dropout and weight decay are often used during training. Dropout randomly drops out a proportion of the neurons during each training iteration, forcing the model to learn more robust features. Weight decay adds a penalty term to the loss function to encourage the model to use smaller weights, preventing it from fitting too closely to the training data.

In addition to these techniques, pre-training can be used to initialize the model’s parameters before fine-tuning them on the classification task. Pre-training involves training the model on a related task, such as unsupervised feature learning, to learn generalizable features that can be used for the classification task.

Overall, training neural networks requires careful consideration of various techniques and parameters to ensure the model learns effective features and generalizes well to new data.

Evaluating Neural Network Performance

Evaluating the performance of a neural network is crucial to ensure that it is functioning optimally. There are several metrics that can be used to evaluate the performance of a neural network, including accuracy, precision, recall, F1 score, and confusion matrix.

Accuracy: The accuracy of a neural network is the ratio of correctly classified data points to the total number of data points. It is a simple and commonly used metric to evaluate the performance of a neural network.

Precision: Precision is the ratio of true positives to the sum of true positives and false positives. It measures the proportion of correctly classified positive instances out of all instances classified as positive.

Recall: Recall is the ratio of true positives to the sum of true positives and false negatives. It measures the proportion of correctly classified positive instances out of all actual positive instances.

F1 Score: The F1 score is the harmonic mean of precision and recall. It is a useful metric when the dataset is imbalanced.

Confusion Matrix: A confusion matrix is a table that summarizes the performance of a classification algorithm. It shows the number of true positives, true negatives, false positives, and false negatives.

In addition to these metrics, it is important to evaluate the performance of a neural network on a validation dataset. This helps to identify overfitting and ensures that the neural network can generalize well to new data.

Overall, evaluating the performance of a neural network is essential to ensure that it is functioning optimally and can generalize well to new data. By using a combination of metrics and validation datasets, we can gain insights into the strengths and weaknesses of the neural network and make improvements where necessary.

Challenges and Limitations of Neural Networks for Classification

Although neural networks have been successfully applied to various classification tasks, they are not without their challenges and limitations. Here are some of the most significant ones:

- Data requirements: Neural networks require large amounts of data to train effectively. Small datasets may lead to overfitting, where the model memorizes the training data instead of learning the underlying patterns, resulting in poor generalization to new data.

- Computational resources: Deep neural networks with many layers and parameters require significant computational resources to train. This can be a bottleneck for researchers or organizations without access to high-performance computing clusters or cloud computing resources.

- Hyperparameter tuning: Neural networks have many hyperparameters that need to be tuned, such as learning rate, batch size, and regularization strength. Finding the optimal values for these hyperparameters can be time-consuming and requires expertise.

- Interpretability: Neural networks are often referred to as “black boxes” because it can be challenging to understand how they arrive at their predictions. This lack of interpretability can be a significant limitation in applications where transparency and accountability are essential.

- Limited applicability: Although neural networks have shown remarkable success in image and speech recognition, natural language processing, and other domains, they may not be the best choice for all classification tasks. For example, decision trees or logistic regression may be more interpretable and easier to train for some problems.

In conclusion, while neural networks have revolutionized the field of classification, they are not a silver bullet. Researchers and practitioners need to be aware of the challenges and limitations of neural networks and choose appropriate methods for their specific problems.

Frequently Asked Questions

What is the difference between shallow and deep neural networks for classification?

Shallow neural networks have only one hidden layer, while deep neural networks have multiple hidden layers. Deep neural networks are better at learning complex features and patterns in data, making them more suitable for solving complex classification problems.

What are the advantages of using neural networks for classification over traditional machine learning algorithms?

Neural networks have the ability to learn from large amounts of data and can identify complex patterns that traditional machine learning algorithms may miss. They are also capable of handling high-dimensional data and can adapt to new data, making them more versatile.

How can overfitting be avoided when training neural networks for classification?

Overfitting occurs when a neural network learns the training data too well and fails to generalize to new data. Regularization techniques, such as L1 and L2 regularization, dropout, and early stopping, can be used to prevent overfitting.

What are some common challenges when using neural networks for classification?

Some common challenges include selecting the appropriate architecture and hyperparameters, dealing with imbalanced data, and avoiding overfitting. Additionally, neural networks can be computationally expensive and require large amounts of data to train effectively.

What are some real-world applications of neural networks for classification?

Neural networks are used in a variety of applications, including image and speech recognition, natural language processing, fraud detection, and recommender systems.

What are some techniques for optimizing the performance of neural networks for classification?

Techniques such as batch normalization, weight initialization, and adaptive learning rates can be used to optimize the performance of neural networks. Additionally, using transfer learning and ensembling can improve the accuracy of the model.