Table of Contents

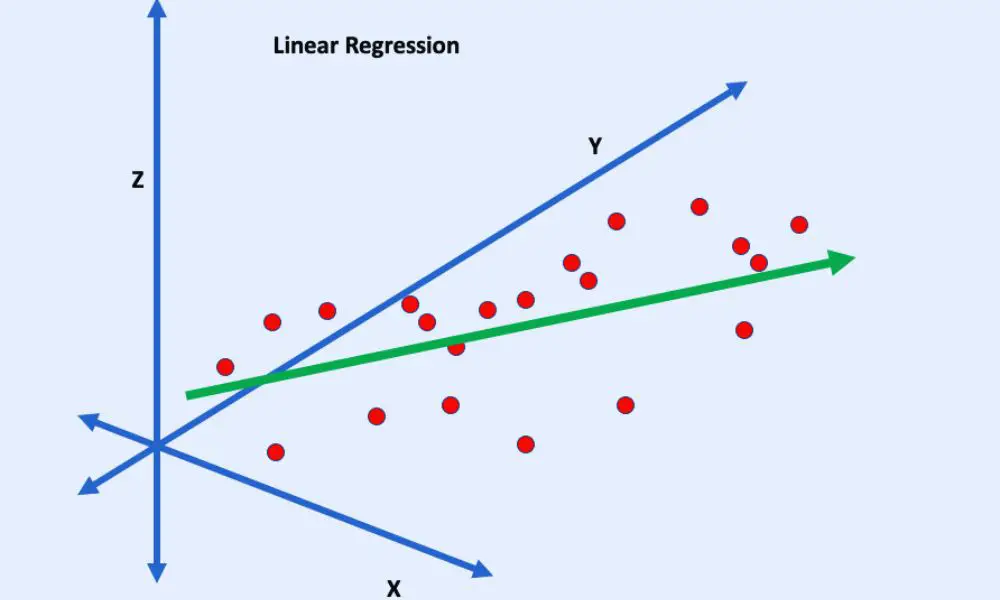

Linear regression is a statistical method used to model the relationship between two continuous variables. It is one of the simplest and most widely used predictive modeling techniques. By fitting a linear equation to a dataset, linear regression allows us to make predictions about future events based on past observations.

The basic idea behind linear regression is to find the line of best fit that represents the relationship between the two variables. This line can then be used to make predictions about future values of the dependent variable based on the values of the independent variable. Linear regression is commonly used in fields such as finance, economics, and marketing to make predictions about consumer behavior, market trends, and financial performance. It is also used in scientific research to model the relationship between variables in experiments.

In simple linear regression, there is only one independent variable, whereas, in multiple linear regression, there are two or more independent variables. The equation of a simple linear regression can be represented as:

Y = β0 + β1*X + ε

Where:

- Y is the dependent variable or the variable we want to predict.

- X is the independent variable or the variable used to predict Y.

- β0 is the y-intercept or the value of Y when X is zero.

- β1 is the slope or the change in Y per unit change in X.

- ε is the error term or the difference between the predicted and observed values.

The estimated coefficients β0 and β1 are determined using mathematical formulas based on the least squares method. These coefficients represent the best-fit line that minimizes the overall error between the observed and predicted values. Once the coefficients are estimated, the linear regression model can be used to make predictions on new data by plugging in the values of the independent variables.

The quality of a linear regression model can be assessed using various metrics, such as the coefficient of determination (R-squared), which measures the proportion of the variance in the dependent variable that can be explained by the independent variables. Other metrics, like the mean squared error (MSE) or root mean squared error (RMSE), quantify the average prediction error of the model.

Predictive Modeling Basics

Predictive modeling is a data analysis technique that uses statistical algorithms and machine learning techniques to predict future outcomes based on historical data. It is a powerful tool that can be used to make informed decisions and improve business operations. The process involves building a model using historical data, testing the model’s accuracy, and then using it to make predictions on new data.

Linear regression is a popular predictive modeling technique that uses a linear relationship between the input variables and the output variable to make predictions. It is a simple but effective method that can be used to model a wide range of phenomena, from stock prices to weather patterns. The basic idea behind linear regression is to find the line of best fit that describes the relationship between the input and output variables.

There are two types of linear regression: simple linear regression and multiple linear regression. Simple linear regression involves one input variable and one output variable, while multiple linear regression involves multiple input variables and one output variable. In both cases, the goal is to find the line of best fit that minimizes the sum of the squared errors between the predicted values and the actual values.

Predictive modeling is a powerful tool that can be used to make informed decisions and improve business operations. It is important to choose the right technique for the problem at hand and to carefully analyze the data before building a model. With the right approach, predictive modeling can be used to gain valuable insights and make accurate predictions about future outcomes.

Linear regression has several assumptions that need to be met for the model to be valid. These assumptions include linearity, independence of errors, constant variance (homoscedasticity), and normality of errors. Violation of these assumptions may lead to biased or inefficient estimates and inaccurate predictions.

Despite its simplicity, linear regression remains a powerful tool in predictive modeling. It provides interpretable and intuitive insights into the relationship between variables and allows for straightforward predictions. Moreover, it serves as the foundation for more complex regression techniques and machine learning algorithms. By understanding the basics of linear regression, one can build a solid understanding of predictive modeling and leverage its capabilities for a wide range of applications.

Frequently Asked Questions

What is the difference between simple and multiple linear regression?

Simple linear regression is used to model the relationship between a dependent variable and a single independent variable. On the other hand, multiple linear regression is used when there are two or more independent variables that are used to predict the dependent variable. In simple linear regression, the relationship between the dependent and independent variables is linear, while in multiple linear regression, the relationship can be non-linear as well.

What are the assumptions of linear regression?

Linear regression makes several assumptions about the data, including linearity, independence, normality, and homoscedasticity. Linearity assumes that the relationship between the dependent and independent variables is linear. Independence assumes that the observations are independent of each other. Normality assumes that the residuals are normally distributed. Homoscedasticity assumes that the variance of the residuals is constant across all levels of the independent variable.

How do you interpret the coefficients in a linear regression model?

The coefficients in a linear regression model represent the change in the dependent variable associated with a one-unit change in the independent variable. The intercept represents the expected value of the dependent variable when all independent variables are zero. The slope coefficients represent the change in the dependent variable per unit change in the independent variable, holding all other variables constant.

What is the purpose of using linear regression in predictive modeling?

Linear regression is used in predictive modeling to model the relationship between the dependent and independent variables and to predict the value of the dependent variable for new observations. It is commonly used in applications such as forecasting, trend analysis, and risk assessment.

How do you handle outliers in linear regression?

Outliers can have a significant impact on the results of a linear regression model. One approach to handling outliers is to remove them from the dataset. Another approach is to use robust regression methods that are less sensitive to outliers. It is important to carefully evaluate the impact of outliers on the model and to choose an appropriate approach based on the specific application.

What are some common evaluation metrics for linear regression models?

Common evaluation metrics for linear regression models include mean squared error (MSE), root mean squared error (RMSE), and coefficient of determination (R-squared). MSE and RMSE measure the average squared difference between the predicted and actual values of the dependent variable. R-squared measures the proportion of the variance in the dependent variable that is explained by the independent variables in the model.