Table of Contents

Random Forest Regression is a powerful machine learning algorithm that has gained popularity in recent years due to its ability to handle complex regression problems. It is an ensemble learning method that combines multiple decision trees to produce accurate and robust predictions. Random Forest Regression is particularly useful when dealing with high-dimensional data, noisy data, and data with missing values.

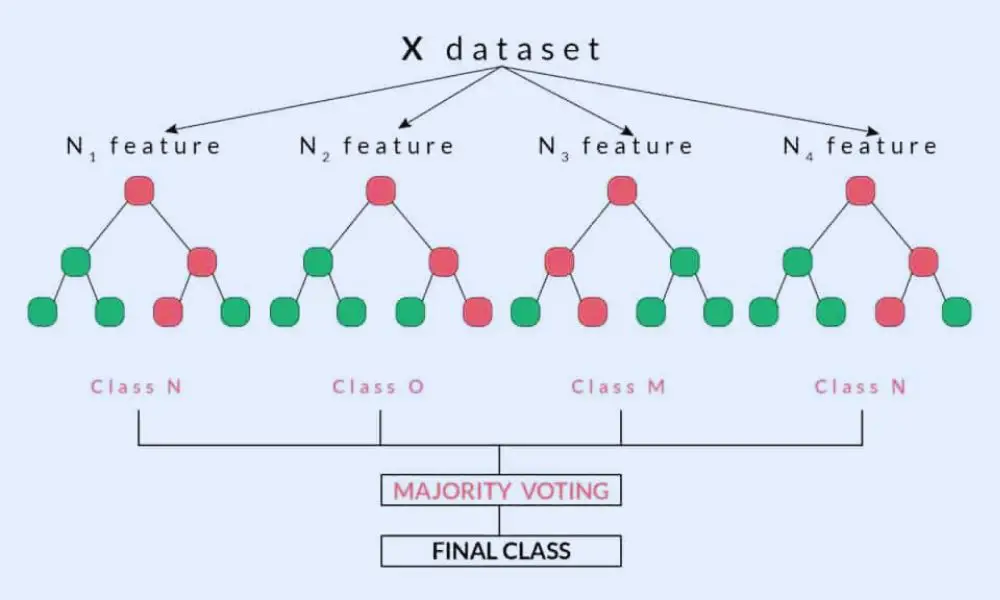

The basic idea behind Random Forest Regression is to create a large number of decision trees, each of which is trained on a random subset of the data. The trees are then combined to produce a final prediction by aggregating the predictions of all the individual trees. This approach helps to reduce overfitting and improve the generalization performance of the model. Random Forest Regression is also capable of handling both categorical and continuous variables, making it a versatile algorithm for a wide range of regression tasks.

Overall, Random Forest Regression is a powerful and flexible machine-learning algorithm that can be used to solve a wide range of regression problems. Its ability to handle high-dimensional data, noisy data, and data with missing values make it a popular choice among data scientists and machine learning practitioners. In the next sections, we will delve deeper into the inner workings of Random Forest Regression and explore how it can be applied to solve real-world regression problems.

Overview

Random Forest Regression is an ensemble learning method that combines the power of decision trees with the concept of bagging. Ensemble learning involves combining multiple models to achieve better performance than any individual model. Random Forest Regression is a popular algorithm that can be used for predicting continuous values, making it ideal for regression tasks.

The Random Forest Regression algorithm works by creating a forest of decision trees, where each tree is trained on a random subset of the data. Each tree in the forest is trained to predict the target variable using a subset of the features. The final prediction is then calculated by taking the average of the predictions made by all the trees in the forest.

One of the key advantages of Random Forest Regression is that it is less prone to overfitting than other regression algorithms. This is because each tree in the forest is trained on a different subset of the data, which helps to reduce the variance of the model. Additionally, the algorithm can handle missing data and outliers, making it a robust choice for real-world applications.

Random Forest Regression is a versatile algorithm that can be used for a wide range of regression tasks, including predicting stock prices, housing prices, and customer churn rates. It is also relatively easy to use, as it does not require extensive data preprocessing or feature engineering. Overall, Random Forest Regression is a powerful tool for data scientists and machine learning practitioners who need to build accurate regression models.

Advantages of Random Forest Regression

Random Forest Regression is a powerful machine learning technique that offers several advantages over other traditional regression methods. Here are some of the key advantages of using Random Forest Regression:

- Highly Accurate: Random Forest Regression is one of the most accurate machine learning algorithms available. It can produce a highly accurate classifier for many data sets, making it an excellent choice for regression problems.

- Efficient on Large Datasets: Random Forest Regression is an efficient algorithm that can handle large databases with ease. It can handle thousands of input variables without variable deletion, making it ideal for large-scale regression tasks.

- Reduces Overfitting: Random Forest Regression is an ensemble learning method that uses multiple decision trees to make predictions. By combining the predictions of multiple trees, Random Forest Regression reduces overfitting and improves the accuracy of the model.

- Handles Non-Linear Relationships: Random Forest Regression can handle non-linear relationships between the input and output variables. It can capture complex interactions between variables that other regression methods may miss.

- Flexible: Random Forest Regression is a flexible algorithm that can be used for both regression and classification tasks. It can handle both continuous and categorical variables, making it a versatile tool for data analysis.

In summary, Random Forest Regression is a powerful machine-learning algorithm that offers several advantages over traditional regression methods. It is highly accurate, efficient on large datasets, reduces overfitting, can handle non-linear relationships, and is a flexible tool for data analysis.

Disadvantages of Random Forest Regression

While Random Forest Regression is a powerful algorithm, it also has some limitations that must be considered before deciding to use it. Here are some of the disadvantages of Random Forest Regression:

- Overfitting: Random Forest can overfit the data if the number of trees in the forest is too large. This can lead to poor performance on new, unseen data. To avoid overfitting, the number of trees should be carefully tuned.

- Computational Complexity: Random Forest Regression can be computationally expensive, especially when dealing with large datasets or a large number of features. This is because each tree in the forest must be constructed and evaluated independently.

- Bias-Variance Tradeoff: Random Forest Regression is a tradeoff between bias and variance. While it can reduce the variance of the model, it can also introduce bias. This is because the algorithm averages the predictions of multiple trees, which can lead to a loss of information.

- Interpretability: Random Forest Regression is not very interpretable. It can be difficult to understand how the model arrived at a particular prediction, especially when dealing with a large number of trees.

- Handling Missing Data: Random Forest Regression does not handle missing data well. If there are missing values in the training data, the algorithm may under or over predict, depending on the trend.

Overall, while Random Forest Regression is a powerful algorithm, it is not without its limitations. It is important to carefully consider these limitations before deciding to use it for a particular problem.

Applications of Random Forest Regression

Random Forest Regression is a powerful technique that has found applications in various fields. Some of the prominent applications are:

1. Predictive Modeling

Random Forest Regression is widely used for predictive modeling in industries such as finance, healthcare, and marketing. It can be used to predict customer churn, loan defaults, and sales forecasting.

2. Image Processing

Random Forest Regression can be used for image processing tasks such as image classification, object detection, and image segmentation. It is particularly useful in cases where the features of the image are non-linearly related to the output.

3. Natural Language Processing

Random Forest Regression can be used for natural language processing tasks such as sentiment analysis, text classification, and language translation. It can handle high-dimensional data and non-linear relationships between the input and output.

4. Recommender Systems

Random Forest Regression can be used to build recommender systems that suggest products or services to users based on their preferences. It can handle large datasets and can generate accurate recommendations even in the presence of sparse data.

5. Time Series Analysis

Random Forest Regression can be used for time series analysis and forecasting. It can handle non-linear relationships between the input and output and can capture complex patterns in the data.

In summary, Random Forest Regression is a versatile technique that has found applications in a wide range of fields. Its ability to handle non-linear relationships, high-dimensional data, and large datasets makes it a popular choice for predictive modeling, image processing, natural language processing, recommender systems, and time series analysis.

Frequently Asked Questions

How can I implement random forest regression in Python using scikit-learn (sklearn)?

You can implement random forest regression in Python using scikit-learn (sklearn) library. Sklearn provides a RandomForestRegressor class that you can use to create a random forest regression model. You can set the number of trees in the forest, the maximum depth of the trees, and other hyperparameters.

Can I use random forest regression for evaluating feature importance in my dataset?

Yes, you can use random forest regression for evaluating feature importance in your dataset. Random forest regression provides a feature importance score that indicates the contribution of each feature to the model’s performance. You can use this score to identify the most important features in your dataset.

What are some common hyperparameters to tune for a random forest regression model?

Some common hyperparameters to tune for a random forest regression model include the number of trees in the forest, the maximum depth of the trees, the minimum number of samples required to split an internal node, and the minimum number of samples required to be at a leaf node.

How do I evaluate the performance of a random forest regression model?

You can evaluate the performance of a random forest regression model using metrics such as mean squared error (MSE), mean absolute error (MAE), and R-squared. MSE and MAE measure the difference between the predicted and actual values, while R-squared measures the proportion of variance in the target variable that is explained by the model.

Is it possible to use random forest regression for time series forecasting?

Yes, it is possible to use random forest regression for time series forecasting. You can use lagged variables as features and train the model on a sliding window of historical data. However, random forest regression may not be the best choice for time series forecasting, as it does not take into account the temporal dependencies between the observations.

What is the difference between random forest regression and other ensemble methods like gradient boosting?

Random forest regression and gradient boosting are both ensemble methods that combine multiple decision trees to make predictions. However, there are some differences between the two methods. Random forest regression builds each tree independently, while gradient boosting builds each tree sequentially and tries to correct the errors of the previous trees. Additionally, random forest regression uses bagging to reduce overfitting, while gradient boosting uses boosting to improve the model’s performance.