Zero-Shot Learning (ZSL) stands out as a revolutionary approach.

In this comprehensive article, we are going to look into the principles, techniques, and applications of Zero-Shot Learning, emphasizing its pivotal role in expanding the capabilities of machine learning models.

Zero-Shot Learning (ZSL) represents a paradigm shift in machine learning, where models are trained to recognize classes they have never encountered during training.

This introduces a level of generalization that traditional models often struggle to achieve. As we explore the intricacies of ZSL, we’ll uncover its significance and preview the techniques and applications that make it a transformative force.

Table of Contents

Understanding Zero-Shot Learning

Definition and Core Principles

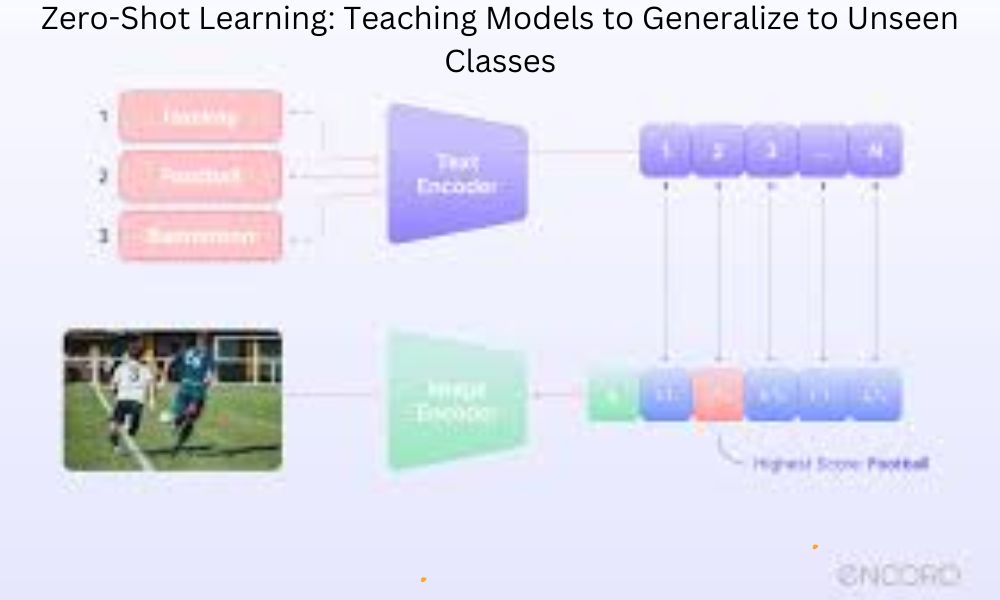

Zero-shot learning fundamentally redefines the learning process. Rather than relying solely on labeled examples, models are equipped to generalize to unseen classes by leveraging underlying principles such as transfer learning and embedding methods.

Differentiating ZSL from Traditional Machine Learning

To grasp the essence of ZSL, it’s crucial to understand how it differs from traditional machine learning. While conventional models excel in recognizing predefined classes, ZSL takes a leap by extending this recognition to classes not explicitly present in the training data.

Importance of Generalization in ZSL

The core strength of ZSL lies in its ability to generalize knowledge. This section will explore why generalization is pivotal and how ZSL achieves it, setting the stage for a deeper dive into the techniques employed.

The Challenge of Unseen Classes

Limitations of Traditional Machine Learning Models

Traditional models face constraints when confronted with novel classes. Here, we’ll dissect the challenges these models encounter, laying the foundation for the need to embrace ZSL.

Concept of Unseen Classes in ZSL

Defining and understanding the concept of unseen classes is integral to appreciating the significance of ZSL. We’ll explore scenarios where unseen classes pose challenges and how ZSL provides a solution.

Real-World Examples

To solidify our understanding, real-world examples will be presented, illustrating situations where ZSL becomes indispensable. From image recognition to natural language processing, these examples will underscore the practical relevance of ZSL.

Techniques in Zero-Shot Learning

Embedding-based Approaches

Description of Embedding Methods

Embedding methods play a pivotal role in ZSL. This section will provide a detailed description of various embedding techniques and their application in achieving zero-shot recognition.

Case Studies on Embeddings

To demonstrate the effectiveness of embeddings, we’ll delve into case studies showcasing how embedding-based approaches have excelled in recognizing unseen classes.

Attribute-based Approaches

Utilizing Attributes for Classification

Attributes offer a unique avenue for classifying unseen classes. This sub-section will explore how leveraging attributes enhances the zero-shot learning process.

Advantages and Challenges

While attribute-based approaches bring distinct advantages, they also pose challenges. We’ll navigate through the pros and cons, offering a comprehensive understanding of this ZSL technique.

Transfer Learning in ZSL

Leveraging Transfer Learning

Transfer learning acts as a catalyst in ZSL, enabling models to apply knowledge gained from one task to another. This section will dissect the nuances of transfer learning in the context of zero-shot recognition.

Applications and Potential Pitfalls

Understanding the practical applications of transfer learning in ZSL is vital. We’ll explore successful applications while being mindful of potential pitfalls that need to be addressed.

Applications of Zero-Shot Learning

Image Recognition and Computer Vision

Improving Image Recognition

ZSL brings unprecedented improvements to image recognition. We’ll explore how models trained with ZSL showcase superior performance in identifying novel objects.

Real-World Applications in Computer Vision

From autonomous vehicles to healthcare, we’ll unravel the real-world applications of ZSL in the realm of computer vision, showcasing its impact on diverse industries.

Natural Language Processing (NLP)

ZSL Applications in NLP

In the realm of natural language processing, ZSL offers unique advantages. This section will delve into how ZSL enhances language models’ ability to understand and generate text.

Enhancing Language Models

By showcasing examples, we’ll highlight instances where ZSL has played a transformative role in enhancing language models, making them adept at generalizing to unseen linguistic patterns.

Anomaly Detection

Role of ZSL in Detecting Anomalies

Beyond traditional applications, ZSL finds its place in anomaly detection. This sub-section will elaborate on how ZSL aids in identifying novel anomalies across various industries.

Use Cases for Anomaly Detection

Real-world use cases will be presented, demonstrating how ZSL’s anomaly detection capabilities offer a proactive approach to identifying and addressing irregularities.

Challenges and Future Directions

Current Challenges in Zero-Shot Learning

Data Scarcity and Bias Issues

The challenges in ZSL are multifaceted. We’ll dissect issues related to data scarcity and bias, offering insights into mitigating these challenges.

Interpretability and Explainability

Ensuring the interpretability and explainability of ZSL models is critical. This sub-section will explore strategies to enhance the transparency of zero-shot learning algorithms.

Emerging Trends

Ongoing Research and Development

As the field of ZSL evolves, staying abreast of ongoing research is essential. This section will provide a glimpse into the latest trends and developments shaping the future of ZSL.

Promising Directions

Looking forward, we’ll discuss promising directions that researchers and practitioners are exploring to push the boundaries of Zero-Shot Learning.

Case Studies

Transformative Impact of ZSL

By examining case studies across diverse domains, we’ll illustrate how ZSL has brought about transformative changes, proving its efficacy in real-world scenarios.

Conclusion

In wrapping up our exploration of Zero-Shot Learning, it’s evident that this paradigm shift holds immense potential in pioneering generalization within machine learning. By teaching models to transcend their training data, ZSL opens doors to unprecedented applications and advancements.

Frequently Asked Questions (FAQ)

How does Zero-Shot Learning differ from traditional machine learning?

Zero-shot learning differs from traditional machine learning by enabling models to recognize classes not explicitly present in the training data. Traditional models excel in recognizing predefined classes, while ZSL leaps generalization.

What are the core principles of Zero-Shot Learning?

The core principles of ZSL include leveraging transfer learning and embedding methods. These principles empower models to generalize knowledge and recognize unseen classes.

Can you provide examples of real-world applications of Zero-Shot Learning?

Certainly. In computer vision, ZSL enhances image recognition for novel objects. In natural language processing, ZSL improves language models’ ability to understand and generate text. Moreover, ZSL finds applications in anomaly detection across various industries.

What challenges does Zero-Shot Learning face?

ZSL faces challenges related to data scarcity, bias issues, and the interpretability of models. Addressing these challenges is crucial for the widespread adoption of ZSL in diverse applications.

How is Zero-Shot Learning shaping the future of machine learning?

Zero-shot learning is shaping the future of machine learning by pushing the boundaries of generalization. Ongoing research and developments, coupled with promising directions, indicate that ZSL will continue to play a pivotal role in advancing the capabilities of machine learning models.