Table of Contents

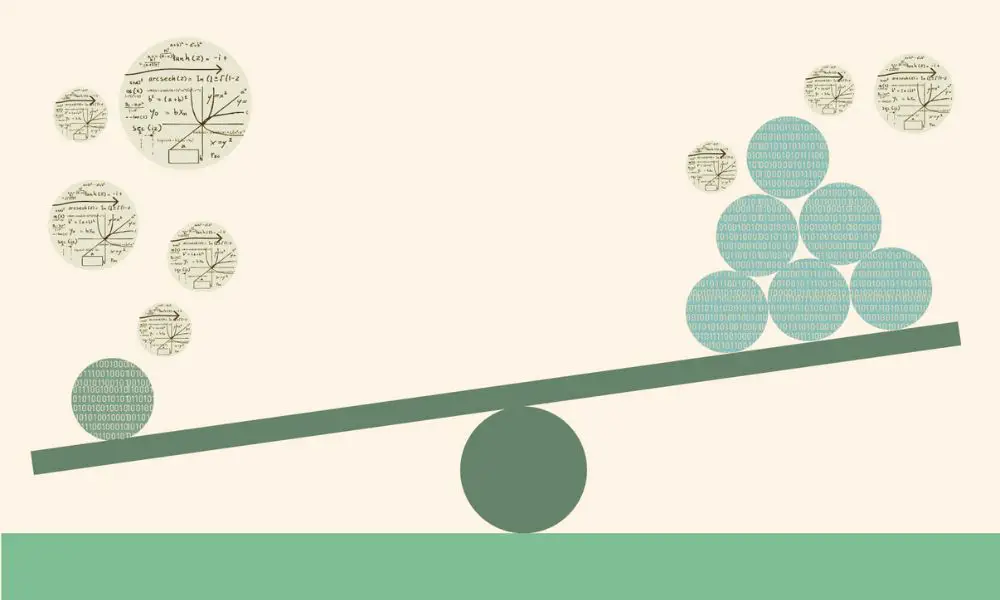

Imbalanced data is a common problem in machine learning, especially in binary classification tasks. It occurs when the training dataset has an unequal distribution of classes, leading to a potential bias in the trained model. The class imbalance problem can cause the model to be over-represented by the majority class, leading to poor performance in predicting the minority class.

To tackle this issue, there are several strategies that can be used. Resampling methods, cost-sensitive models, and threshold tuning are three standard approaches for dealing with class imbalance. Resampling methods involve either oversampling the minority class or undersampling the majority class to balance the dataset. Cost-sensitive models adjust the cost of misclassifying the minority class to reduce the bias towards the majority class. Threshold tuning involves adjusting the decision threshold to increase the sensitivity of the model towards the minority class.

Understanding Class Imbalance

Class imbalance is a common problem in machine learning, particularly in binary classification tasks. It occurs when the training dataset has an unequal distribution of classes, leading to a potential bias in the trained model. For example, in a medical diagnosis task, the number of healthy patients may far outweigh the number of sick patients, resulting in a model that is biased towards predicting healthy patients.

Class imbalance can pose several challenges for machine learning algorithms. In particular, it can lead to poor performance metrics, such as accuracy, precision, and recall, as the model may be biased towards predicting the majority class. As a result, it is essential to address class imbalance to ensure that the model can accurately predict both classes.

There are several ways to address class imbalance, including subsampling, oversampling, and cost-sensitive learning. Subsampling involves randomly selecting a subset of the majority class to balance the dataset. Oversampling involves duplicating instances of the minority class to balance the dataset. Cost-sensitive learning involves assigning different misclassification costs to different classes to account for class imbalance.

It is important to note that there is no one-size-fits-all solution to class imbalance. The choice of strategy depends on the specific problem, dataset, and machine learning algorithm used. Therefore, it is essential to evaluate the performance of different strategies using appropriate metrics and cross-validation techniques to determine the best approach for a particular task.

Strategies for Handling Class Imbalance

Dealing with imbalanced data is a common challenge in data science. Here are some strategies for handling class imbalance:

1. Resampling

One of the most common approaches to handling class imbalance is resampling. Resampling involves either oversampling the minority class or undersampling the majority class. Oversampling involves adding more instances of the minority class to the dataset, while undersampling involves removing instances from the majority class. Both methods can help balance the classes and improve model performance.

2. Cost-sensitive learning

Another approach is cost-sensitive learning. This involves adjusting the cost of misclassifying instances in the minority class. By increasing the cost of misclassifying minority instances, the model is encouraged to prioritize correctly classifying these instances.

3. Threshold tuning

Threshold tuning involves adjusting the decision threshold for the model. By moving the threshold closer to the minority class, the model is encouraged to classify more instances as the minority class, which can improve overall performance.

4. Robust performance measures

Robust performance measures such as precision, recall, F1-score, and area under the receiver operating characteristic curve (AUC-ROC) can help evaluate model performance in the presence of class imbalance. These measures take into account the relative performance of the model on both the minority and majority classes, providing a more accurate assessment of overall performance.

Overall, handling class imbalance is an essential step in building effective machine learning models. By using a combination of these strategies, data scientists can improve model performance and ensure that their models are effective and accurate.

Evaluation Metrics for Imbalanced Data

When working with imbalanced datasets, traditional evaluation metrics such as accuracy can be misleading. This is because these metrics do not take into account the class distribution of the data. Therefore, it is important to use evaluation metrics that are sensitive to class imbalance.

One commonly used evaluation metric for imbalanced data is the area under the receiver operating characteristic curve (AUC-ROC). This metric measures the trade-off between the true positive rate and the false positive rate for different threshold values. AUC-ROC values range from 0 to 1, with a value of 0.5 indicating random guessing and a value of 1 indicating perfect classification.

Another useful evaluation metric for imbalanced data is the area under the precision-recall curve (AUC-PR). This metric measures the trade-off between precision and recall for different threshold values. AUC-PR values range from 0 to 1, with a value of 0 indicating random guessing and a value of 1 indicating perfect classification.

Precision, recall, and F1-score are also commonly used evaluation metrics for imbalanced data. Precision measures the proportion of true positives among all predicted positives, while recall measures the proportion of true positives among all actual positives. F1-score is the harmonic mean of precision and recall.

It is important to note that the choice of evaluation metric should be based on the specific problem and goals of the analysis. For example, if the goal is to identify all positive cases, recall may be a more important metric than precision. On the other hand, if the goal is to minimize false positives, precision may be a more important metric than recall.

Overall, selecting appropriate evaluation metrics for imbalanced data is crucial for accurately assessing the performance of classification models.

Challenges in Tackling Imbalanced Data

Handling imbalanced data is a challenging task in machine learning. Imbalanced data refers to a situation where the number of instances in one class is significantly lower than the other class. This problem is common in real-world datasets, such as fraud detection, medical diagnosis, and credit scoring. In such cases, the minority class often contains valuable information that the model needs to learn, but it is difficult to capture due to the class imbalance.

One of the significant challenges in tackling imbalanced data is that traditional machine learning algorithms are biased towards the majority class. This bias leads to poor performance in predicting the minority class instances. Consequently, the model’s accuracy is skewed towards the majority class, leading to a high false-negative rate and low recall.

Another challenge is that the minority class instances are often noisy and difficult to distinguish from the majority class instances. As a result, the model may misclassify the minority class instances as the majority class, leading to a high false-positive rate.

Moreover, the imbalance in data distribution affects the model’s ability to generalize to new data. The model may overfit to the majority class instances, leading to poor performance on the minority class instances. This problem is particularly severe when the minority class instances are rare and difficult to obtain.

To overcome these challenges, various techniques have been proposed, such as resampling, cost-sensitive learning, and ensemble methods. These techniques aim to balance the data distribution and improve the model’s performance on the minority class instances. However, each technique has its limitations and requires careful consideration before application.

In summary, tackling imbalanced data is a challenging task that requires careful consideration of the data distribution and the model’s performance on the minority class instances. The success of the model depends on the appropriate selection and application of the techniques that balance the data distribution and improve the model’s performance.

Conclusion

In conclusion, handling class imbalance is a crucial aspect of machine learning. Imbalanced data sets can lead to biased models and poor predictions, which can have severe consequences in real-world applications.

There are several strategies for tackling class imbalance, including resampling methods, cost-sensitive models, threshold tuning, and relabeling approaches. Each of these strategies has its own advantages and disadvantages, and the choice of approach depends on the specific problem and data set.

Resampling methods, such as oversampling and undersampling, are the most popular strategies for handling class imbalance. They involve either duplicating instances from the minority class (oversampling) or removing instances from the majority class (undersampling) to balance the data set. However, these methods can lead to overfitting or underfitting, and they do not always improve model performance.

Cost-sensitive models, on the other hand, assign different costs to misclassifying instances from the minority and majority classes. This approach can be effective when the cost of misclassification is not equal between classes, but it requires careful cost assignment and may not work well with complex models.

Threshold tuning involves adjusting the classification threshold to improve performance on the minority class. This approach can be effective when the cost of false negatives is high, but it may not work well with imbalanced data sets with a large number of classes.

Relabeling approaches involve creating pseudo-classes from the minority class to balance the data set. This approach can be effective when there is a cluster structure in the minority class, but it requires careful selection of the number of pseudo-classes and may not work well with non-linear models.

Overall, there is no one-size-fits-all solution for handling class imbalance, and the choice of approach depends on the specific problem and data set. It is important to carefully evaluate the performance of different strategies and select the one that works best for the problem at hand.

Frequently Asked Questions

How can you identify if a dataset is imbalanced?

A dataset is considered imbalanced if one class has significantly more samples than the other. To identify if a dataset is imbalanced, you can calculate the class distribution of the dataset. If one class has a much higher percentage of samples than the other, then the dataset is likely imbalanced.

What are the consequences of using imbalanced data for classification?

Using imbalanced data for classification can lead to biased models that perform poorly on the minority class. The model may be biased towards the majority class, resulting in poor performance on the minority class. This can lead to false negatives, where the model fails to identify instances of the minority class, and false positives, where the model incorrectly identifies instances of the minority class as the majority class.

What are some common techniques for handling class imbalance?

There are several techniques for handling class imbalance, including undersampling, oversampling, and hybrid methods. Undersampling involves removing some samples from the majority class to balance the dataset. Oversampling involves duplicating some samples from the minority class to balance the dataset. Hybrid methods combine both undersampling and oversampling to balance the dataset.

How do you evaluate the performance of a model trained on imbalanced data?

When evaluating the performance of a model trained on imbalanced data, it is important to use metrics that are sensitive to the minority class. Metrics such as precision, recall, and F1-score are commonly used to evaluate the performance of models trained on imbalanced data.

Can data augmentation help with imbalanced datasets?

Data augmentation can help with imbalanced datasets by generating synthetic samples for the minority class. This can help to balance the dataset and improve the performance of the model on the minority class. However, it is important to ensure that the synthetic samples are realistic and representative of the minority class.

What are some limitations of using oversampling or undersampling to balance data?

Oversampling and undersampling can lead to overfitting and underfitting, respectively. Oversampling can also lead to the model learning from noisy or irrelevant samples, while undersampling can result in the loss of important information from the majority class. It is important to carefully select the appropriate technique for handling class imbalance based on the specific characteristics of the dataset and the problem at hand.