Table of Contents

NearMiss is an undersampling technique that can be used to handle imbalanced data. In many real-world applications, datasets are often imbalanced, where the number of samples in one class is significantly higher than the other. This can lead to biased models that perform poorly on the minority class.

To address this issue, various techniques have been developed to balance the class distribution, one of which is undersampling. Undersampling involves reducing the number of samples in the majority class, which can balance the class distribution and improve model performance. NearMiss is one such undersampling technique that selects samples from the majority class that are closest to the minority class, effectively reducing the overlap between the two classes.

NearMiss is a powerful technique that can significantly improve the performance of models trained on imbalanced data. It is easy to implement and can be used in combination with other techniques such as oversampling to further improve model performance. In the following article, we will explore NearMiss in more detail, discuss its advantages and limitations, and provide examples of how it can be used in real-world applications.

Imbalanced Data

Imbalanced data is a common problem in machine learning, where the distribution of classes in the dataset is not uniform. In some cases, one class may be significantly more prevalent than the others. This can lead to poor performance of machine learning models, as they tend to favor the majority class and ignore the minority class.

Imbalanced data can occur in various domains, such as medical diagnosis, fraud detection, and anomaly detection. In these domains, the minority class is often the one of interest, as it represents the rare event that needs to be detected. Therefore, it is crucial to handle imbalanced data appropriately to achieve accurate and reliable results.

There are several methods for handling imbalanced data, including oversampling and undersampling techniques. Oversampling involves increasing the number of instances in the minority class, while undersampling involves reducing the number of instances in the majority class. In this article, we will focus on the NearMiss undersampling technique.

Undersampling is often preferred over oversampling because it is less computationally expensive and can prevent overfitting. However, undersampling can also result in the loss of valuable information from the majority class, which may lead to a less accurate model. Therefore, it is essential to choose an appropriate undersampling technique that can balance the dataset while preserving the important information.

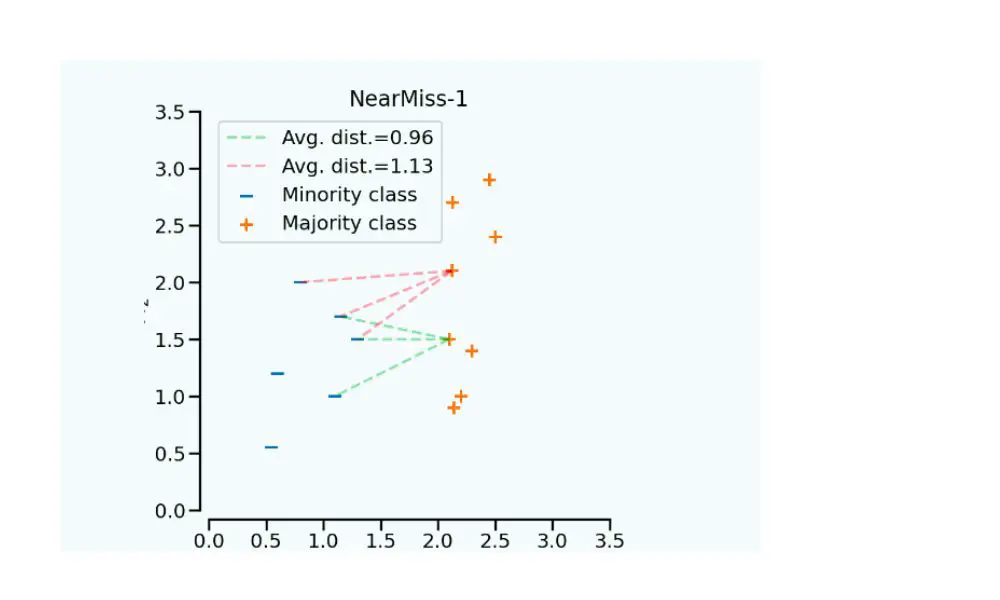

NearMiss is an effective undersampling technique that selects the instances from the majority class that are closest to the instances in the minority class. This technique aims to keep the instances that are most informative and representative of the majority class while removing the redundant and noisy instances. NearMiss has three variations, namely NearMiss-1, NearMiss-2, and NearMiss-3, each with a different criterion for selecting the instances.

Overall, NearMiss is a useful technique for handling imbalanced data, as it can balance the dataset while preserving the important information. However, it is essential to choose the appropriate variation of NearMiss based on the dataset characteristics and the problem at hand.

NearMiss

NearMiss is a popular undersampling algorithm used for handling imbalanced data. It is a simple yet effective technique that reduces the number of majority class samples while retaining the most informative ones. The algorithm works by identifying the samples from the majority class that are closest to the minority class and keeping only a subset of them.

One of the main advantages of NearMiss is that it is easy to implement and computationally efficient. It also helps to reduce the risk of overfitting and can improve the performance of classifiers on imbalanced datasets. However, it may not work well in cases where the minority class is spread out over a large area of the feature space.

There are three variants of NearMiss: NearMiss-1, NearMiss-2, and NearMiss-3. NearMiss-1 selects the samples from the majority class that are closest to the minority class, while NearMiss-2 selects the samples that are farthest from the majority class. NearMiss-3 is a hybrid of the first two variants and combines their strengths.

In addition to the three variants, NearMiss also has several parameters that can be tuned to improve its performance. These include the number of neighbors to consider when selecting samples, the distance metric used to measure the proximity between samples, and the sampling strategy used to determine the number of samples to keep.

Overall, NearMiss is a useful technique for handling imbalanced data and can be combined with other methods like oversampling or ensemble methods to further improve the performance of classifiers.

Undersampling Techniques

Undersampling is a resampling technique that involves reducing the number of instances in the majority class to balance the distribution of classes in an imbalanced dataset. This technique is useful when the majority class has a much larger number of instances than the minority class, and the classifier is biased towards the majority class.

There are several undersampling techniques, including:

- Random undersampling: randomly selects instances from the majority class to create a balanced dataset. This technique may lead to the loss of useful information, especially if the dataset is small.

- Cluster centroids: identifies clusters of the majority class and replaces them with the centroids of these clusters. This technique preserves the original distribution of the majority class while reducing its size.

- Tomek’s links: identifies pairs of instances from different classes that are the nearest neighbors of each other and removes the majority class instance. This technique removes noisy instances from the majority class.

- NearMiss: selects instances from the majority class that are closest to the minority class instances. This technique preserves the information of the majority class while reducing its size.

Each undersampling technique has its advantages and disadvantages, and the choice of technique depends on the specific problem and dataset. It is essential to evaluate the performance of the classifier on the undersampled dataset and compare it with the performance on the original imbalanced dataset.

Undersampling is a simple and effective technique for handling imbalanced data, but it may not always be the best solution. Other techniques, such as oversampling, may be more appropriate depending on the problem and dataset. It is crucial to explore different techniques and evaluate their performance before selecting the best approach for a specific problem.

Frequently Asked Questions

What is the NearMiss technique and how does it work?

NearMiss is an undersampling technique used to handle imbalanced data. The technique works by randomly removing samples from the majority class until the dataset is balanced. The algorithm selects samples from the majority class that are closest to the minority class and removes them. This process is repeated until the dataset is balanced.

How does undersampling help with imbalanced data?

Undersampling helps with imbalanced data by reducing the number of samples in the majority class. This balances the dataset and allows the minority class to have a greater impact on the model. This can improve the model’s ability to correctly identify the minority class.

What are the limitations of using undersampling techniques like NearMiss?

One limitation of using undersampling techniques like NearMiss is that it can lead to the loss of important information from the majority class. This can result in a decrease in the overall accuracy of the model. Additionally, undersampling can lead to overfitting, where the model becomes too specialized to the training data.

Can NearMiss be used with other techniques like SMOTE?

Yes, NearMiss can be used with other techniques like SMOTE. This combination of oversampling and undersampling is known as a hybrid approach. The hybrid approach can improve the model’s ability to correctly identify both the minority and majority classes.

What are some best practices for handling imbalanced datasets?

Some best practices for handling imbalanced datasets include using appropriate evaluation metrics, such as precision, recall, and F1 score, to evaluate model performance. Additionally, using cross-validation can help ensure that the model is not overfitting to the training data. Finally, using a combination of oversampling and undersampling techniques can improve the model’s ability to correctly identify both the minority and majority classes.

Is there a performance tradeoff when using NearMiss compared to other techniques?

Yes, there can be a performance tradeoff when using NearMiss compared to other techniques. NearMiss can result in a decrease in the overall accuracy of the model, but it can improve the model’s ability to correctly identify the minority class. It is important to evaluate the tradeoff between accuracy and the ability to correctly identify the minority class when selecting a technique for handling imbalanced data.