Table of Contents

In many real-world classification problems, the distribution of classes in the data is often imbalanced, meaning that one class has significantly fewer instances than the other(s). Class imbalance can pose challenges for machine learning algorithms, as they may struggle to accurately classify the minority class due to the bias towards the majority class. To mitigate this issue, cost-sensitive learning techniques, particularly the weighted approach, can be employed.

Cost-sensitive learning is a subfield of machine learning that aims to address the issue of class imbalance in datasets. In many real-world scenarios, the number of instances in one class is significantly larger than the other, making it difficult for machine learning algorithms to accurately classify the minority class. Cost-sensitive learning involves assigning different costs to different types of classification errors, which helps the algorithm to prioritize the correct classification of the minority class.

There are various techniques used in cost-sensitive learning, including data resampling, algorithm modifications, and ensemble methods. Data resampling involves either oversampling the minority class or undersampling the majority class to balance the dataset. Algorithm modifications involve adjusting the algorithm’s decision boundary to prioritize the correct classification of the minority class. Ensemble methods involve combining multiple models to improve the overall performance of the algorithm.

One of the key challenges in cost-sensitive learning is determining the appropriate costs to assign to different types of classification errors. This requires a thorough understanding of the problem domain and the potential costs associated with misclassification. Additionally, there is a trade-off between the cost of misclassification and the overall accuracy of the algorithm, which must be carefully balanced to achieve optimal results.

Understanding Class Imbalance

Class imbalance is a common problem in machine learning, where the number of instances in one class is significantly higher than in the other. This problem is prevalent in many real-world applications, such as fraud detection, medical diagnosis, and network intrusion detection.

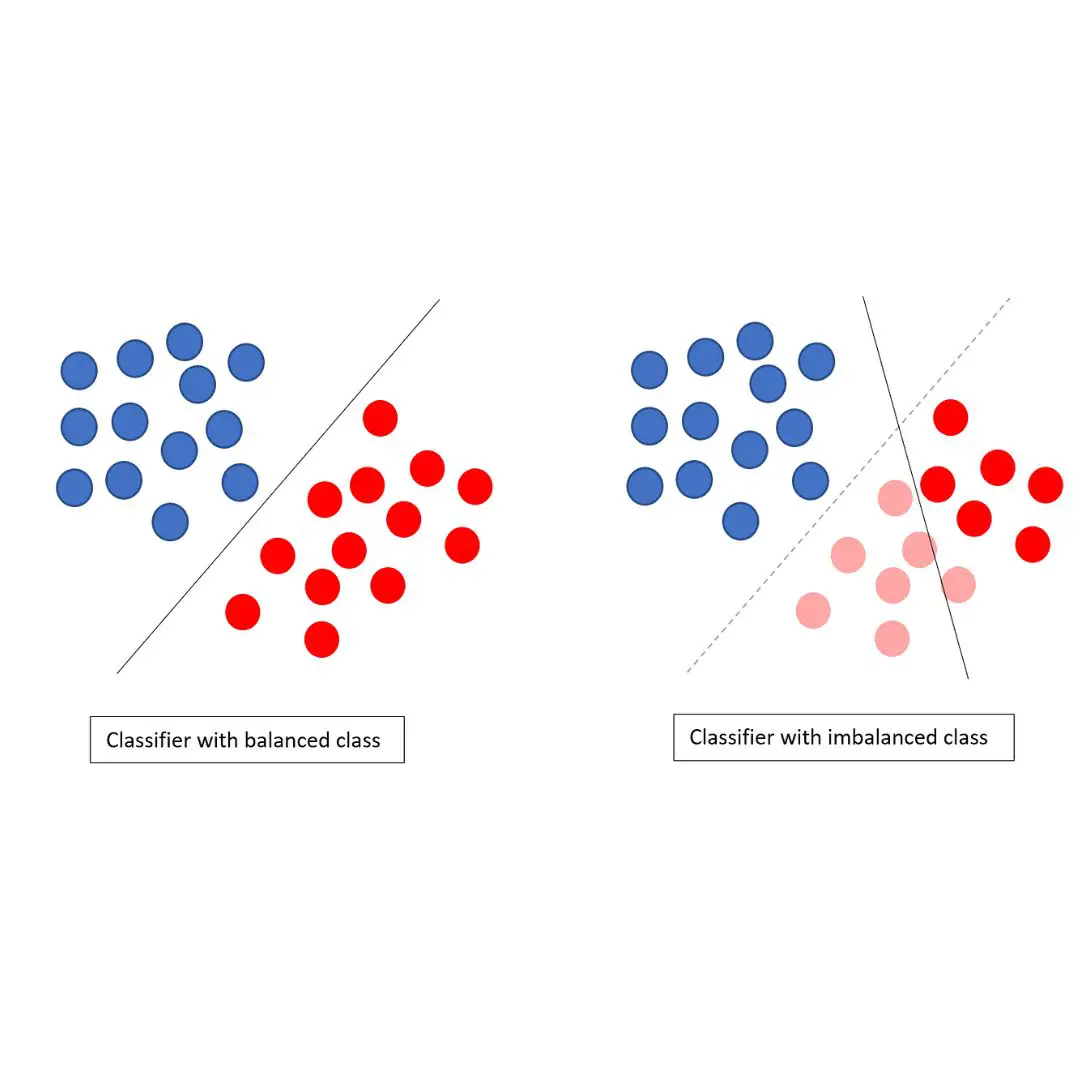

When the class distribution is imbalanced, traditional machine learning algorithms tend to perform poorly, as they are biased towards the majority class. As a result, the minority class is often misclassified, leading to a high false negative rate.

For example, in a fraud detection scenario, the number of fraudulent transactions is much lower than the number of legitimate ones. If the model is not trained to handle this imbalance, it will classify most of the transactions as legitimate, leading to a high rate of false negatives, and the fraud will go undetected.

To address this problem, cost-sensitive learning techniques can be used. These techniques assign different misclassification costs to different classes, allowing the model to focus on the minority class and reduce the false negative rate.

There are several ways to assign misclassification costs to different classes. One approach is to use domain expertise, where subject matter experts provide the costs based on the consequences of misclassification. Another approach is to use a hyperparameter search, where the costs are determined by tuning the model’s hyperparameters. A best practice for assigning costs is to use the inverse of the class distribution present in the training dataset.

In the next section, we will discuss how cost-sensitive learning techniques can be used to address class imbalance and improve the performance of machine learning models.

Cost-sensitive Learning

What is Cost-sensitive Learning?

Cost-sensitive learning is a machine learning technique that involves explicitly defining and using costs when training machine learning algorithms. The goal of cost-sensitive learning is to minimize the total cost of misclassifications, rather than just minimizing the number of misclassifications.

How Does Cost-sensitive Learning Work?

Cost-sensitive learning can be implemented in several ways, including data resampling, algorithm modifications, and ensemble methods. In data resampling, the dataset is modified to balance the class distribution. This can be done by oversampling the minority class, undersampling the majority class, or generating synthetic samples.

Algorithm modifications involve adjusting the learning algorithm to account for the cost of misclassifications. For example, decision trees can be modified to consider the cost of each decision, and support vector machines can be adjusted to optimize for a weighted combination of accuracy and cost.

Ensemble methods involve combining multiple models to improve performance. In cost-sensitive learning, ensemble methods can be used to combine multiple models that are optimized for different cost matrices.

Overall, cost-sensitive learning is a powerful technique for addressing class imbalance in machine learning. By explicitly considering the cost of misclassifications, cost-sensitive learning can help improve the performance of machine learning algorithms in real-world applications.

Different Weights

What are Different Weights?

Different weights are a way to address class imbalance in cost-sensitive learning. They allow for assigning different penalties to different types of misclassifications, depending on the importance of each class. By doing so, the model can learn to prioritize the minority class and reduce the impact of the majority class on the overall accuracy.

In cost-sensitive learning, different weights are often used in the loss function of the model. The loss function is a measure of how well the model is performing, and it is used to update the model’s parameters during training. By adjusting the weights of the loss function, the model can learn to focus more on the minority class and less on the majority class.

How to Determine Different Weights?

Determining the right weights for different classes can be challenging, as it requires a good understanding of the problem and the costs associated with different types of misclassifications. There are several methods that can be used to determine the weights, including:

- Equal weights: Assigning equal weights to all classes is the simplest approach, but it may not be appropriate for imbalanced datasets.

- Inverse frequency: Assigning weights that are inversely proportional to the frequency of each class in the dataset. This approach gives more weight to the minority class and less weight to the majority class.

- Cost matrix: Creating a cost matrix that specifies the cost of each type of misclassification. This approach allows for assigning different costs to different types of errors, depending on the specific problem.

- Expert knowledge: Consulting with domain experts to determine the appropriate weights based on their knowledge of the problem and the costs associated with different types of misclassifications.

In practice, a combination of these methods may be used to determine the weights. It is important to evaluate the performance of the model using different weights and select the weights that result in the best performance on the validation set.

Benefits of Cost-sensitive Learning

Cost-sensitive learning is a technique used to address the class imbalance problem in machine learning. It is a subfield of machine learning that takes into account the costs of prediction errors when training a model. Cost-sensitive learning is particularly useful when dealing with imbalanced datasets where the number of instances in one class is much larger than the other. Here are some benefits of using cost-sensitive learning:

Improved Performance

Cost-sensitive learning can improve the performance of a machine learning model by reducing the number of false positives or false negatives. By assigning different costs to different types of misclassifications, the model can learn to prioritize correctly classifying the minority class, which is often the class of interest.

Better Utilization of Data

Cost-sensitive learning can help to better utilize the available data by reducing the need for oversampling or undersampling techniques. Oversampling or undersampling can lead to the loss of valuable information and can also lead to overfitting. Cost-sensitive learning can help to balance the class distribution without losing any data.

Flexibility

Cost-sensitive learning is a flexible technique that can be used with a variety of machine learning algorithms. It can also be used with different types of costs, such as asymmetric costs, misclassification costs, or application-specific costs. This flexibility makes cost-sensitive learning a versatile tool that can be used in a variety of applications.

Cost Reduction

Cost-sensitive learning can help to reduce costs associated with misclassification errors. By prioritizing correctly classifying the minority class, cost-sensitive learning can help to reduce the number of false negatives, which can be costly in certain applications such as medical diagnosis or fraud detection.

In summary, cost-sensitive learning is a valuable technique for addressing the class imbalance problem in machine learning. It can improve performance, better utilize data, provide flexibility, and reduce costs associated with misclassification errors.

Challenges of Cost-sensitive Learning

Cost-sensitive learning is a subfield of machine learning that involves explicitly defining and using costs when training machine learning algorithms. This type of learning is particularly useful when dealing with imbalanced datasets where the number of instances in one class is significantly higher than the number of instances in the other class. However, there are several challenges associated with cost-sensitive learning that need to be addressed to ensure the effectiveness of this technique.

One of the main challenges of cost-sensitive learning is the selection of appropriate misclassification costs. The misclassification costs are used to weigh the importance of correctly classifying instances in each class. If the misclassification costs are not well-defined, the algorithm may produce biased results, leading to poor performance. Therefore, selecting appropriate misclassification costs is critical to the success of cost-sensitive learning.

Another challenge of cost-sensitive learning is the selection of an appropriate algorithm. Different algorithms may perform differently on imbalanced datasets, and the choice of algorithm may affect the performance of the model. Moreover, some algorithms may require more computational resources than others, which may limit their applicability in certain situations.

Furthermore, cost-sensitive learning requires a well-balanced dataset. Imbalanced datasets can lead to performance degradation of the classifier, and cost-sensitive learning may not be effective in such cases. Therefore, it is essential to balance the dataset before applying cost-sensitive learning techniques.

In addition to these challenges, cost-sensitive learning may also require more data to achieve the desired level of accuracy. This is because cost-sensitive learning algorithms need to learn the misclassification costs, which may require more data than traditional machine learning algorithms.

Overall, while cost-sensitive learning is a powerful technique for addressing class imbalance, it is not without its challenges. Addressing these challenges is critical to the success of cost-sensitive learning and improving its effectiveness in real-world applications.

Frequently Asked Questions

What are some common methods for addressing class imbalance in machine learning?

Class imbalance is a common problem in machine learning. Some common methods for addressing class imbalance include data resampling, algorithm modifications, and ensemble methods. Data resampling techniques include oversampling the minority class, undersampling the majority class, and generating synthetic data. Algorithm modifications include modifying the decision threshold and using cost-sensitive learning. Ensemble methods include bagging and boosting.

What is cost-sensitive learning and how does it help with class imbalance?

Cost-sensitive learning is a type of machine learning that takes into account the cost of misclassification. In cost-sensitive learning, misclassification costs are explicitly defined and incorporated into the learning process. This approach helps to address class imbalance by assigning higher misclassification costs to the minority class, which encourages the classifier to focus more on correctly classifying the minority class.

How can misclassification cost formulas be used in cost-sensitive learning?

Misclassification cost formulas can be used in cost-sensitive learning to assign different costs to different types of errors. For example, a false negative error may be assigned a higher cost than a false positive error. These formulas can be incorporated into the learning process by modifying the loss function used in the algorithm.

What are some performance analysis techniques for cost-sensitive learning on imbalanced medical data?

Performance analysis techniques for cost-sensitive learning on imbalanced medical data include precision-recall curves, receiver operating characteristic (ROC) curves, and area under the curve (AUC) analysis. These techniques can help to evaluate the performance of a classifier on imbalanced medical data and compare the performance of different classifiers.

How can class imbalance be reduced in neural networks?

Class imbalance can be reduced in neural networks by using data resampling techniques, modifying the loss function, and using cost-sensitive learning. Data resampling techniques include oversampling the minority class, undersampling the majority class, and generating synthetic data. Modifying the loss function and using cost-sensitive learning can help to assign higher misclassification costs to the minority class, which encourages the classifier to focus more on correctly classifying the minority class.

What are some examples of cost-sensitive learning in Python and Keras?

Some examples of cost-sensitive learning in Python and Keras include using the class_weight parameter in the fit() function of the Keras Sequential model and using the cost_matrix parameter in the fit() function of the scikit-learn LogisticRegression model. These parameters allow the user to specify the misclassification costs for each class and incorporate cost-sensitive learning into the learning process.