Table of Contents

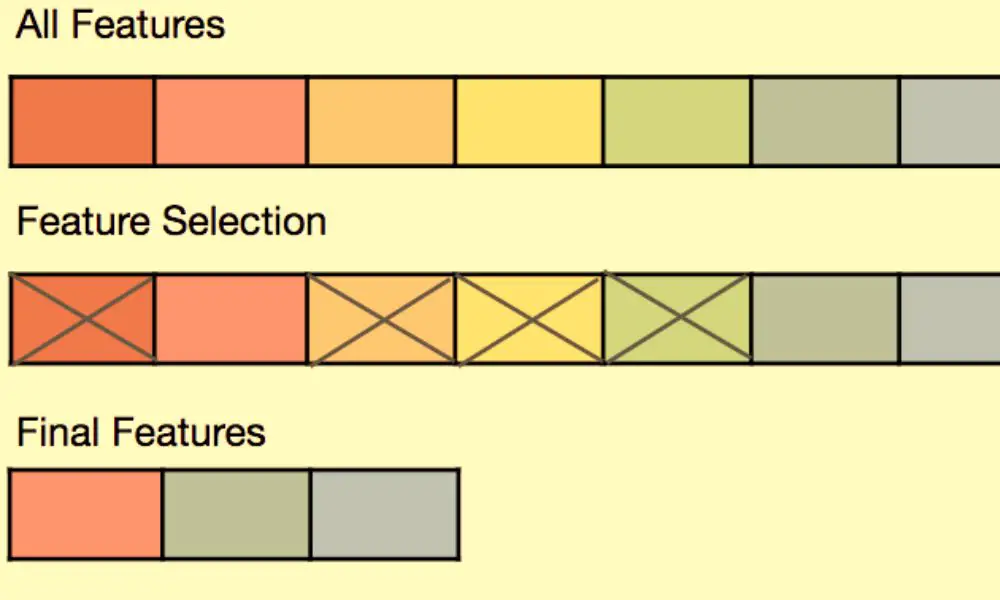

Feature selection is a crucial step in data science that involves selecting the most relevant features from a dataset to improve model performance and interpretability. It is desirable to reduce the number of input variables to both reduce the computational cost of modeling and, in some cases, to improve the performance of the model.

A common approach for feature selection is to examine the variable importance scores for a machine learning model, as a way to understand which features are the most relevant for making predictions. Given the significance of feature selection, it is crucial for the calculated importance scores to reflect reality. However, there are several challenges associated with feature selection, including the selection of an appropriate method, the choice of relevant features, and the potential tradeoff between model performance and interpretability.

In this article, we will explore different feature selection methods and their impact on model performance and interpretability. We will discuss the advantages and disadvantages of each method and provide practical guidance on how to choose the best method for a given problem. Additionally, we will examine the importance of interpretability in machine learning models and how feature selection can enhance it. By the end of this article, readers will have a better understanding of feature selection methods and their role in improving model performance and interpretability.

What are Feature Selection Methods?

Feature selection is a crucial step in developing a predictive model. It involves identifying the most relevant input variables that contribute to the accuracy of the model while discarding irrelevant or redundant variables. Feature selection methods aim to reduce the computational cost of modeling and improve the performance of the model.

There are several types of feature selection methods, including filter, wrapper, and embedded methods. Filter methods evaluate the relevance of features based on statistical measures, such as correlation coefficients or mutual information. Wrapper methods select features based on the performance of a specific machine learning algorithm. Embedded methods incorporate feature selection into the model building process.

Feature selection methods provide several benefits, such as:

- Reducing the dimensionality of the data, which can improve the performance of the model and reduce overfitting.

- Enhancing the interpretability of the model by identifying the most important input variables.

- Saving computational resources by reducing the number of input variables.

However, feature selection methods also have some limitations. They may overlook important features that are not highly correlated with the target variable or may select redundant features. Therefore, it is essential to carefully evaluate the performance of feature selection methods and select the most appropriate method for a specific problem.

Why Use Feature Selection Methods?

In machine learning, feature selection methods are used to identify and select the most relevant features from a dataset. These methods help to reduce the dimensionality of the data, which in turn enhances the model’s performance and interpretability.

There are several reasons why feature selection methods are important in machine learning. First, they help to improve the accuracy of the model by reducing the noise and irrelevant information in the dataset. This is particularly important when dealing with high-dimensional data, where the number of features can be very large compared to the number of observations.

Second, feature selection methods help to reduce overfitting, which occurs when a model is too complex and fits the noise in the data rather than the underlying patterns. By selecting only the most relevant features, the model is less likely to overfit and can generalize better to new data.

Third, feature selection methods can help to improve the interpretability of the model by identifying the most important features and their relationships with the target variable. This is particularly important in applications where understanding the underlying factors that drive the predictions is important, such as in healthcare or finance.

Overall, feature selection methods are an essential tool in machine learning that can help to improve the accuracy, generalization, and interpretability of models. By selecting only the most relevant features, these methods can reduce the noise and irrelevant information in the data, leading to more accurate and interpretable models.

There are various feature selection methods available, each with its own advantages and considerations. Here are some commonly used techniques:

- Univariate Selection: This method selects features based on their individual relationship with the target variable, typically using statistical tests such as chi-square for categorical variables or analysis of variance (ANOVA) for continuous variables. Features with the highest scores or p-values below a certain threshold are selected. Univariate selection is simple and computationally efficient but doesn’t consider feature interactions.

- Recursive Feature Elimination (RFE): RFE is an iterative method that starts with all features and progressively eliminates the least important ones. It trains the model, evaluates feature importance, and removes the least important feature in each iteration until a predefined number of features remains. RFE works well with models that provide feature importance or weight rankings, such as decision trees or support vector machines.

- Regularization Methods: Regularization techniques, such as Lasso (Least Absolute Shrinkage and Selection Operator) and Ridge regression, incorporate penalty terms into the model training process to shrink or eliminate coefficients for irrelevant features. Lasso performs both feature selection and coefficient shrinkage, while Ridge regression focuses more on shrinkage. These methods are effective when dealing with high-dimensional datasets and can lead to sparse models with only the most informative features.

- Feature Importance from Tree-based Models: Tree-based models like decision trees and random forests provide a measure of feature importance based on how much they contribute to the overall model performance. Features with higher importance scores are considered more relevant and can be selected for the final model. Random forests also offer a permutation-based feature importance measure, which assesses the decrease in model performance when a particular feature is randomly permuted.

- Forward/Backward Stepwise Selection: Stepwise selection methods iteratively add or remove features from the model based on statistical criteria, such as p-values or information criteria like AIC (Akaike Information Criterion) or BIC (Bayesian Information Criterion). Forward selection starts with an empty model and adds the best-performing features, while backward elimination begins with a full model and progressively removes the least significant features. These methods can be computationally intensive but can yield good results with appropriate stopping criteria.

When selecting features, it’s essential to consider the domain knowledge, the problem at hand, and the limitations of the data. Feature selection should be performed on a training set to avoid information leakage and then applied consistently to the validation and test sets. It’s also important to evaluate the impact of feature selection on model performance using appropriate evaluation metrics and techniques such as cross-validation.

In summary, feature selection methods play a vital role in enhancing model performance and interpretability. They help identify the most relevant features, reduce overfitting, improve computational efficiency, and enable a more focused interpretation of the model’s predictions. By carefully selecting the right set of features, data scientists can build more robust and understandable models that generalize well to unseen data.

Types of Feature Selection Methods

There are various types of feature selection methods that can be used to enhance model performance and interpretability. In this section, we will discuss three main types of feature selection methods: Filter Methods, Wrapper Methods, and Embedded Methods.

Filter Methods

Filter methods are one of the most commonly used feature selection methods. They involve selecting features based on some statistical measure, such as correlation or mutual information, without considering the performance of the model. Filter methods are computationally efficient and can handle high-dimensional data. However, they do not take into account the interaction between features and may lead to suboptimal feature subsets.

Wrapper Methods

Wrapper methods select features by evaluating the performance of the model with different subsets of features. They use a specific learning algorithm to train the model on different subsets of features and select the subset that gives the best performance. Wrapper methods are computationally expensive but can handle non-linear interactions between features. They are more accurate than filter methods but can lead to overfitting.

Embedded Methods

Embedded methods are a combination of filter and wrapper methods. They select features as part of the model training process. Embedded methods are computationally efficient and can handle high-dimensional data. They can also handle non-linear interactions between features and avoid overfitting. However, they may not always select the optimal subset of features.

In summary, each type of feature selection method has its own advantages and disadvantages. The choice of method depends on the specific problem and the available resources. It is important to carefully evaluate the performance of different feature selection methods before selecting the best one for a given task.

Evaluation Metrics for Feature Selection

When evaluating the effectiveness of feature selection methods, it is important to use appropriate evaluation metrics. The choice of metrics depends on the type of machine learning problem being addressed, such as classification or regression.

Classification Metrics

In classification problems, the following metrics are commonly used:

- Accuracy: measures the percentage of correctly classified instances.

- Precision: measures the proportion of true positive instances among all predicted positive instances.

- Recall: measures the proportion of true positive instances among all actual positive instances.

- F1 score: the harmonic mean of precision and recall, which balances both metrics.

Regression Metrics

In regression problems, the following metrics are commonly used:

- Mean squared error (MSE): measures the average squared difference between the predicted and actual values.

- Root mean squared error (RMSE): the square root of MSE, which is more interpretable as it is in the same units as the target variable.

- R-squared (R2): measures the proportion of variance in the target variable explained by the model.

Similar to classification problems, these metrics can be computed for each feature selection method and compared to determine which method performs better in terms of regression accuracy.

In summary, appropriate evaluation metrics are crucial for comparing and selecting feature selection methods. The choice of metrics depends on the type of machine learning problem being addressed, such as classification or regression.

Challenges in Feature Selection

Feature selection is a crucial step in data science that involves selecting the most relevant features from a dataset to improve model performance and interpretability. However, selecting the right features can be challenging due to several factors.

One of the main challenges in feature selection is dealing with high-dimensional data. High-dimensional data may contain a large number of features, which can lead to overfitting and poor model performance. Moreover, high-dimensional data can also lead to computational challenges, as it may take a long time to train models on such data.

Another challenge in feature selection is dealing with correlated features. Correlated features can lead to redundancy in the feature set, which can affect the interpretability of the model. Moreover, correlated features can also lead to overfitting and poor model performance.

Additionally, selecting the right feature selection method can be challenging. There are several feature selection methods available, including filter methods, wrapper methods, and embedded methods. Each method has its own strengths and weaknesses, and selecting the right method depends on the specific problem at hand.

Furthermore, feature selection can be challenging when dealing with missing data. Missing data can affect the performance of the feature selection method, as it may not be able to accurately assess the relevance of the missing features.

Overall, feature selection is a crucial step in data science that can enhance model performance and interpretability. However, selecting the right features can be challenging due to several factors, including high-dimensional data, correlated features, selecting the right feature selection method, and missing data.

Best Practices for Feature Selection

Feature selection is a crucial step in machine learning as it helps to improve model performance and interpretability. Here are some best practices for feature selection:

- Understand the Data: Before selecting features, it’s essential to understand the data. This includes identifying the type of data, identifying missing values, and understanding the relationships between variables.

- Use Domain Knowledge: Domain knowledge can help identify relevant features. Experts in the field can provide insights into which features are likely to be the most useful for the model.

- Consider Feature Correlation: Highly correlated features can negatively impact model performance. It’s important to identify and remove correlated features.

- Use Appropriate Feature Selection Methods: There are various feature selection methods available, including filter, wrapper, and embedded methods. Each method has its strengths and weaknesses, and the selection of the appropriate method depends on the data and the problem at hand.

- Evaluate Model Performance: After selecting features, it’s important to evaluate model performance. This includes checking metrics such as accuracy, precision, recall, and F1 score.

- Iterate and Refine: Feature selection is an iterative process. It’s essential to refine the feature selection process by trying different methods and evaluating model performance.

By following these best practices, we can improve the performance and interpretability of machine learning models.

Conclusion

In this article, we have discussed various feature selection methods that can be used to enhance model performance and interpretability. Feature selection is a crucial step in the data science pipeline that involves selecting the most relevant features from a dataset to improve model performance and interpretability.

We started by discussing the various types of feature selection methods, including filter, wrapper, and embedded methods. We then went on to discuss some popular feature selection algorithms, including Recursive Feature Elimination (RFE), Lasso Regression, and Principal Component Analysis (PCA).

We also discussed the importance of interpretability in machine learning models and how feature selection can help improve interpretability. We explored some interpretability methods, including Partial Dependence Plots (PDPs) and SHapley Additive exPlanations (SHAP).

It is important to note that there is no one-size-fits-all solution when it comes to feature selection. The choice of feature selection method depends on the specific problem and dataset at hand. It is important to evaluate the performance of different feature selection methods and algorithms before selecting the best one for a particular problem.

In conclusion, feature selection is an essential step in the data science pipeline that can help improve model performance and interpretability. By carefully selecting the most relevant features, we can build more accurate and interpretable machine learning models.

Frequently Asked Questions

What are some common feature selection methods used to enhance model performance and interpretability?

There are several commonly used feature selection methods to enhance model performance and interpretability. These include statistical-based methods such as correlation analysis, t-tests, and ANOVA, as well as model-based methods such as Lasso and Ridge regression. Additionally, there are wrapper methods such as Recursive Feature Elimination (RFE) and embedded methods such as Regularized Random Forests (RRF).

How does dimensionality reduction play a role in feature selection?

Dimensionality reduction is a technique used to reduce the number of features in a dataset while retaining as much information as possible. Principal Component Analysis (PCA) and Linear Discriminant Analysis (LDA) are examples of dimensionality reduction techniques that can be used in feature selection to reduce the number of features in high-dimensional datasets.

What is the Lasso method and how can it be used for feature selection?

The Lasso method is a regression technique used for feature selection. It is a linear regression model that incorporates a penalty term to shrink the coefficients of less important features to zero. The Lasso method can be used for feature selection by identifying the most important features and excluding the less important ones.

Can stepwise regression be used for feature selection and how does it work?

Stepwise regression is a technique used to select the best subset of features for a model. It works by iteratively adding or removing features from the model until the best subset of features is identified. Stepwise regression can be used for feature selection by selecting the best subset of features that maximizes the model’s performance.

What are some popular feature scoring algorithms used in feature selection?

Popular feature scoring algorithms used in feature selection include mutual information, chi-squared, and correlation-based feature selection. These algorithms score features based on their relevance to the target variable and their redundancy with other features.

What are the best feature selection methods for classification tasks?

The best feature selection methods for classification tasks depend on the specific dataset and problem at hand. However, some commonly used methods include Recursive Feature Elimination (RFE), Lasso regression, and Random Forests. It is important to evaluate the performance of different feature selection methods on the specific dataset to determine the best approach.