Table of Contents

Random Forest is an ensemble learning method that is widely used in classification, regression, and other tasks. It is based on constructing multiple decision trees at training time and then aggregating their outputs to make a final prediction. The algorithm is known for its high accuracy and robustness to noise and outliers, making it a popular choice in many applications. It is widely used in various domains, including machine learning, data analysis, and pattern recognition.

In this article, we will explore the Random Forest algorithm in depth, with the goal of providing a comprehensive understanding of its mechanisms, properties, and limitations. We will start by introducing the basic concepts of decision trees and ensemble learning, and then move on to explain how Random Forest works and why it is so effective. We will also discuss some of the key parameters and hyperparameters that can be tuned to optimize the performance of the algorithm.

What is Random Forest?

Random Forest is an ensemble learning algorithm that combines multiple decision trees to generate a more accurate and stable prediction. It is a supervised learning algorithm that can be used for both regression and classification tasks.

Decision Trees

Random Forest is built on the foundation of decision trees. Decision trees are a type of machine learning algorithm that uses a tree-like model of decisions and their possible consequences. It is a simple yet powerful algorithm that can be used for both classification and regression tasks. A decision tree has a flowchart-like structure where each internal node represents a test on a feature, each branch represents the outcome of the test, and each leaf node represents a class label or a prediction. Decision trees are popular models due to their interpretability and ability to handle both categorical and numerical data.

Ensemble Learning

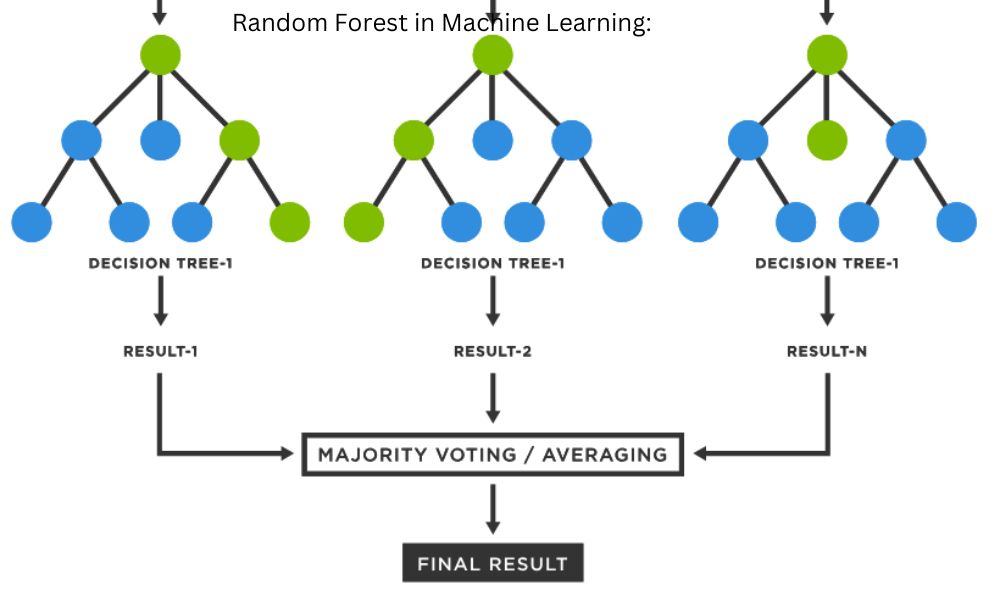

Random Forest uses the concept of ensemble learning to improve the performance of decision trees. Ensemble learning is a technique that uses multiple models to improve the accuracy and stability of predictions. In Random Forest, multiple decision trees are built using a randomly selected subset of features and training data. The predictions of these trees are then combined to generate the final output.

Random Forest is known for its high accuracy, stability, and scalability. It is a popular algorithm in the machine learning community due to its ability to handle large datasets with high dimensionality. However, it is important to note that Random Forest is not a one-size-fits-all solution. It may not always be the best choice for every problem and dataset.

The algorithm constructs multiple decision trees using different subsets of the training data and features.

The following steps are involved in Random Forest:

- Random Sampling: Random Forest randomly selects a subset of the original training data with replacement. This process is known as bootstrapping or random sampling with replacement. This sampling strategy creates diverse subsets, ensuring that each tree is trained on a slightly different set of examples.

- Feature Randomness: In addition to a random sampling of data, Random Forest also introduces randomness in feature selection. At each node of the decision tree, instead of considering all features, only a random subset of features is considered for splitting. This random feature selection helps to decorrelate the trees and reduces the risk of overfitting.

- Tree Construction: Using the selected data subset and features, Random Forest constructs individual decision trees. The construction process follows the standard decision tree algorithm, where nodes are split based on information gain, Gini impurity, or other metrics.

- Aggregation: Once all the trees are constructed, predictions are made by each tree independently. For classification tasks, the class with the majority of votes among the trees is chosen as the final prediction. In regression tasks, the predictions of all trees are averaged to obtain the final prediction.

How does Random Forest work?

Random Forest is an ensemble learning algorithm that combines multiple decision trees to improve the accuracy and stability of the model. The algorithm is based on the idea of bagging, where multiple trees are built on different subsets of the training data and their predictions are combined to make the final prediction. In this section, we will explore the three main components of Random Forest: Bagging, Random Subspace Method, and Voting.

Bagging

Bagging (Bootstrap Aggregating) is a technique used in Random Forest to reduce the variance of the model. In bagging, multiple trees are built on different subsets of the training data. Each tree is trained on a randomly selected subset of the data, with replacement. This means that some data points may be repeated in different subsets, while others may not be included at all. By building multiple trees on different subsets of the data, the variance of the model is reduced, and the accuracy of the model is improved.

Random Subspace Method

Random Subspace Method is another technique used in Random Forest to reduce the correlation between the trees. In this method, instead of using all the features to build each tree, a random subset of features is selected for each tree. This means that each tree is built on a different set of features, reducing the correlation between the trees. By reducing the correlation between the trees, the accuracy of the model is improved.

Voting

Voting is the final step in Random Forest, where the predictions of all the trees are combined to make the final prediction. In Random Forest, there are two types of voting: Hard Voting and Soft Voting. In Hard Voting, the final prediction is the majority vote of all the trees. In Soft Voting, the final prediction is the average of the predicted probabilities of all the trees. Soft Voting is generally preferred over Hard Voting as it takes into account the confidence of each tree’s prediction.

In conclusion, Random Forest is an ensemble learning algorithm that combines multiple decision trees to improve the accuracy and stability of the model. The algorithm uses bagging, random subspace method, and voting to reduce the variance of the model, reduce the correlation between the trees, and combine the predictions of all the trees to make the final prediction.

Advantages of Random Forest

Random Forest is a popular ensemble learning approach that combines multiple decision trees to make predictions. Here are some of the advantages of using Random Forest:

Reduced Overfitting

One of the main advantages of Random Forest is that it reduces overfitting. Overfitting occurs when a model is too complex and fits the training data too closely, resulting in poor generalization to new data. Random Forest reduces overfitting by averaging the predictions of multiple decision trees, which reduces the variance of the model.

High Accuracy

Random Forest is known for its high accuracy in both classification and regression problems. This is because it combines the predictions of multiple decision trees, which reduces the bias of the model. The more decision trees in the forest, the higher the accuracy of the model.

Feature Importance

Random Forest provides a measure of feature importance, which is useful for feature selection. Feature importance is calculated by measuring the decrease in accuracy when a feature is removed from the model. Features with high importance are considered more relevant to the target variable and can be used for feature selection.

In summary, Random Forest is a powerful ensemble learning approach that provides reduced overfitting, high accuracy, and feature importance. These advantages make it a popular choice for machine learning tasks.

Disadvantages of Random Forest

Slow Training Time

One of the main disadvantages of Random Forest is that it can be computationally expensive and slow to train, especially with large datasets. This is because Random Forest builds a large number of decision trees, each of which requires a significant amount of time to construct. Additionally, Random Forest uses bootstrapping and feature randomization, which can further increase the training time.

Poor Interpretability

Another disadvantage of Random Forest is its poor interpretability. Since Random Forest combines multiple decision trees, it can be difficult to understand how the model arrived at a particular prediction. While Random Forest provides feature importance measures, these measures can be misleading and difficult to interpret. Furthermore, Random Forest does not provide a clear explanation of how each decision tree contributes to the final prediction.

To address the issue of poor interpretability, researchers have proposed various methods, such as partial dependence plots and permutation feature importance. However, these methods can be time-consuming and may not always provide a clear understanding of the model’s behavior.

In summary, while Random Forest is a powerful machine learning algorithm, it has some notable disadvantages. These include slow training time and poor interpretability. Researchers continue to explore ways to address these issues and improve the performance and interpretability of Random Forest.

Frequently Asked Questions

What is the random forest algorithm and how does it work?

Random forest is a popular ensemble learning algorithm that combines the outputs of multiple decision trees to make a final prediction. Each decision tree in the forest is built using a random subset of the training data and a random subset of the input features. The final prediction is made by taking the majority vote of the predictions made by each individual tree.

How does the random forest algorithm differ from a decision tree?

While decision trees are prone to overfitting, random forests reduce the risk of overfitting by using multiple trees and aggregating their outputs. Random forests also handle missing data and noisy features more effectively than decision trees.

What are the advantages of using a random forest model?

Random forests have several advantages, including their ability to handle large datasets, noisy data, and missing values. They are also robust to outliers and do not require data normalization. Additionally, random forests can handle both classification and regression problems.

How do you choose the optimal number of trees in a random forest?

The optimal number of trees in a random forest depends on the size of the dataset and the complexity of the problem. Generally, increasing the number of trees in the forest will improve the accuracy of the model, but at a certain point, the improvement will plateau. One common approach is to use cross-validation to determine the optimal number of trees.

What is the difference between random forest regression and classification?

Random forest regression is used for predicting continuous variables, such as stock prices or temperatures. Random forest classification is used for predicting categorical variables, such as whether a customer will churn or not.

How does the random forest algorithm handle missing data?

Random forests can handle missing data by using the mean or median value of the feature to fill in the missing values. Alternatively, the algorithm can use the most common value or a regression model to impute the missing values.