Table of Contents

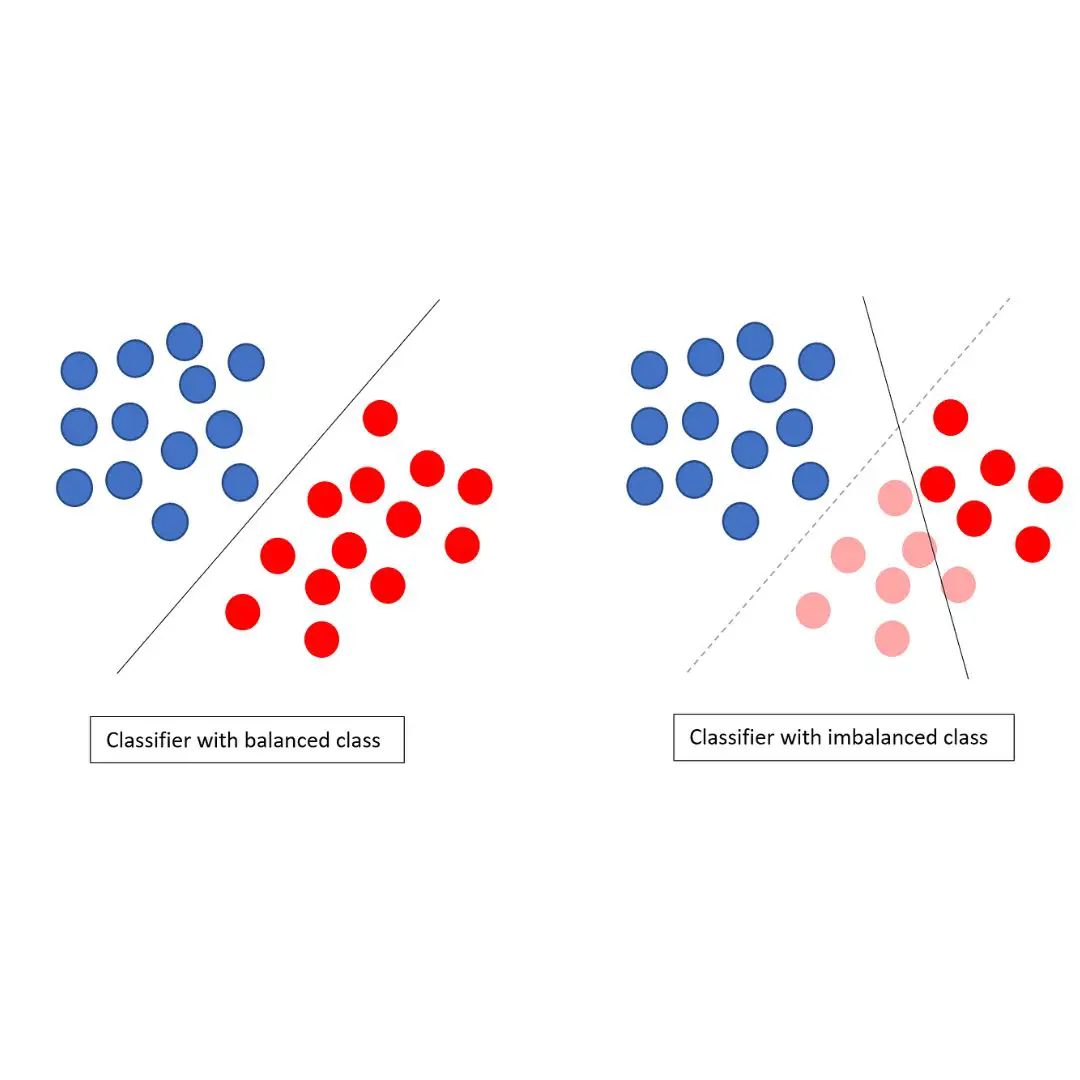

Handling class imbalance is a common challenge in machine learning, where the number of examples representing one class is much lower than the ones of the other classes. This issue occurs in many real-world applications, such as fraud detection, medical diagnosis, and text classification. In such scenarios, the classifier may be biased towards the majority class, leading to poor performance on the minority class.

Ensemble techniques have been proposed as a promising solution to the class imbalance problem. Ensemble learning is a machine learning paradigm that combines multiple base classifiers to improve the overall performance. Ensemble methods can be roughly categorized into bagging-style methods, boosting-based methods, and hybrid ensemble methods. Bagging-style methods aim to reduce the variance of the base classifiers by generating multiple bootstrap samples from the original dataset. Boosting-based methods focus on improving the accuracy of the base classifiers by iteratively reweighting the training examples. Hybrid ensemble methods combine both bagging and boosting techniques to achieve better performance.

Class Imbalance Problem

The class imbalance problem is a common challenge in machine learning, particularly in medical data. It refers to the situation where the number of instances in one class is significantly lower than the other class(es). This leads to biased models that perform poorly in predicting the minority class, which is often the class of interest.

The class imbalance problem is prevalent in many real-world scenarios. For example, in fraud detection, the number of fraudulent transactions is much lower than legitimate transactions. In medical diagnosis, the number of patients with a rare disease is much lower than those without the disease.

There are two main strategies for handling the class imbalance problem: data-level and algorithm-level techniques. Data-level techniques involve modifying the dataset to balance the classes, while algorithm-level techniques modify the learning algorithm to handle the imbalance.

Data-level techniques include undersampling, oversampling, and hybrid approaches. Undersampling involves randomly removing instances from the majority class to balance the dataset. Oversampling involves replicating instances from the minority class to balance the dataset. Hybrid approaches combine both undersampling and oversampling techniques.

Algorithm-level techniques include cost-sensitive learning, threshold-moving, and ensemble learning. Cost-sensitive learning involves assigning different misclassification costs to different classes. Threshold-moving involves adjusting the decision threshold to favor the minority class. Ensemble learning involves combining multiple classifiers to improve the overall performance.

In summary, the class imbalance problem is a significant challenge in machine learning, particularly in medical data. There are various techniques to address this problem, including data-level and algorithm-level approaches. Ensemble learning, which combines multiple classifiers, is a promising technique for handling the class imbalance problem.

Ensemble Techniques for Handling Class Imbalance

Ensemble techniques are a popular approach for handling class imbalance in machine learning tasks. Ensemble techniques combine multiple models to improve the overall performance of a classification task. In class imbalance learning, ensemble techniques can be used to improve the performance of classifiers by addressing the issue of class imbalance.

Bagging

Bagging is a popular ensemble technique that involves creating multiple models using bootstrap samples of the training data. In the context of class imbalance learning, bagging can be used to create multiple models that are trained on different subsets of the minority class, which can help to improve the overall performance of the classifier.

One popular variant of bagging for class imbalance learning is SMOTEBagging, which combines the SMOTE oversampling technique with bagging to create multiple models that are trained on balanced subsets of the data.

Boosting

Boosting is another popular ensemble technique that involves combining multiple weak classifiers to create a strong classifier. In the context of class imbalance learning, boosting can be used to give more weight to misclassified instances of the minority class, which can help to improve the overall performance of the classifier.

One popular variant of boosting for class imbalance learning is RUSBoost, which combines random undersampling with boosting to create multiple models that are trained on balanced subsets of the data.

Stacking

Stacking is a more advanced ensemble technique that involves combining multiple models using a meta-model. In the context of class imbalance learning, stacking can be used to create multiple models that are trained on different subsets of the data, and then combine them using a meta-model to improve the overall performance of the classifier.

One popular variant of stacking for class imbalance learning is EasyEnsemble, which combines multiple models that are trained on balanced subsets of the data using a meta-model to create a final ensemble model.

Overall, ensemble techniques are a powerful approach for handling class imbalance in machine learning tasks. By combining multiple models, ensemble techniques can help to improve the overall performance of a classifier, particularly in the context of class imbalance learning.

Combining Strengths of Ensemble Techniques

Ensemble techniques are a powerful tool for handling class imbalance in machine learning. By combining multiple models, ensemble techniques can overcome the limitations of individual models and improve the overall performance of the system. In this section, we will explore the different ways in which ensemble techniques can be combined to create stronger models.

Bagging and Boosting

Bagging and boosting are two of the most popular ensemble techniques. Bagging involves training multiple models on different subsets of the data and combining their predictions. Boosting, on the other hand, involves training multiple models sequentially, with each subsequent model focusing on the errors made by the previous model.

Both bagging and boosting can be effective for handling class imbalance. Bagging can help to reduce the influence of the majority class, while boosting can focus on the minority class and improve its representation in the final model. By combining these techniques, we can create a stronger model that is better able to handle class imbalance.

Hybrid Sampling and Ensemble Learning

Hybrid sampling and ensemble learning are two other techniques that can be combined to create stronger models. Hybrid sampling involves using a combination of undersampling and oversampling techniques to balance the classes in the data. Ensemble learning involves training multiple models on the balanced data and combining their predictions.

By combining these techniques, we can create a model that is not only balanced but also takes advantage of the strengths of ensemble learning. This can lead to improved performance and better handling of class imbalance.

Dynamic Selection and Data Preprocessing

Dynamic selection and data preprocessing are two more techniques that can be combined to create stronger models. Dynamic selection involves selecting the best models for each instance in the data, while data preprocessing involves transforming the data to improve its representation.

By combining these techniques, we can create a model that is not only better able to handle class imbalance but also takes advantage of the strengths of dynamic selection and data preprocessing. This can lead to improved performance and better handling of complex datasets.

In conclusion, ensemble techniques are a powerful tool for handling class imbalance in machine learning. By combining different techniques, we can create stronger models that are better able to handle complex datasets and improve overall performance.

Evaluation Metrics

When evaluating the performance of ensemble techniques for handling class imbalance, it is important to use appropriate evaluation metrics. In this section, we will discuss some commonly used evaluation metrics for classification tasks.

Accuracy

Accuracy is the most commonly used metric for evaluating classification models. It measures the proportion of correct predictions made by the model. However, accuracy can be misleading in the case of imbalanced datasets, where the majority class may dominate the metric. Therefore, it is important to use additional evaluation metrics.

Precision

Precision measures the proportion of true positive predictions among all positive predictions made by the model. It is a useful metric when the cost of false positives is high. For example, in medical diagnosis, a false positive result can lead to unnecessary treatments or surgeries.

Recall

Recall measures the proportion of true positive predictions among all actual positive instances in the dataset. It is a useful metric when the cost of false negatives is high. For example, in fraud detection, a false negative result can lead to significant financial losses.

F1-Score

F1-Score is the harmonic mean of precision and recall. It provides a balance between precision and recall, making it a useful metric for imbalanced datasets. F1-Score is a better metric than accuracy in the case of imbalanced datasets because it considers both false positives and false negatives.

In conclusion, when evaluating the performance of ensemble techniques for handling class imbalance, it is important to use appropriate evaluation metrics such as precision, recall, and F1-Score. These metrics provide a better understanding of the model’s performance on imbalanced datasets than accuracy alone.

Conclusion

In conclusion, class imbalance is a common problem in many real-world applications. Ensemble techniques have been shown to be effective in handling class imbalance problems by combining the strengths of multiple classifiers. This article has provided an overview of several popular ensemble techniques, including bagging, boosting, and stacking, and discussed their advantages and limitations.

Bagging is a simple and effective technique that can reduce the variance of the base classifiers. Boosting can improve the performance of weak classifiers by focusing on misclassified instances. Stacking can combine the strengths of multiple base classifiers by training a meta-classifier on their outputs.

In addition, this article has discussed several recent developments in ensemble techniques, such as cost-sensitive learning, active learning, and deep learning. These techniques can further improve the performance of ensemble methods in handling class imbalance problems.

Overall, ensemble techniques are a valuable tool for handling class imbalance problems. However, it is important to carefully select the base classifiers and the ensemble method, and to properly evaluate the performance of the ensemble. By combining the strengths of multiple classifiers, ensemble techniques can help improve the accuracy and robustness of machine learning models in real-world applications.

Frequently Asked Questions

What are some ensemble methods for handling class imbalance?

Ensemble methods are a set of machine learning algorithms that combine multiple models to improve predictive performance. Some popular ensemble methods for handling class imbalance include Bagging-style methods, Boosting-based methods, and Hybrid ensemble methods. Examples of these methods include UnderBagging, OverBagging, SMOTEBagging, SMOTEBoost, RUSBoost, DataBoost-IM, EasyEnsemble, and BalanceCascade.

How does ensemble learning help address the class imbalance problem?

Ensemble learning helps address the class imbalance problem by combining multiple models to improve predictive performance. Ensemble methods can help improve the minority class’s representation by oversampling the minority class or undersampling the majority class. Ensemble methods can also help improve the model’s generalization by reducing overfitting.

What are the benefits of using ensemble techniques for imbalanced datasets?

The benefits of using ensemble techniques for imbalanced datasets include improved predictive performance, better generalization, and reduced overfitting. Ensemble methods can help improve the minority class’s representation, which is often underrepresented in imbalanced datasets.

Which classification model is best suited for imbalanced data?

There is no one-size-fits-all answer to this question as it depends on the specific dataset and problem at hand. However, some classification models that perform well on imbalanced datasets include Random Forest, Gradient Boosting, and Support Vector Machines.

What are some methods to handle imbalanced datasets in machine learning?

Some methods to handle imbalanced datasets in machine learning include oversampling the minority class, undersampling the majority class, using cost-sensitive learning, and using ensemble methods. Oversampling methods include Synthetic Minority Over-sampling Technique (SMOTE), Adaptive Synthetic Sampling (ADASYN), and Borderline-SMOTE. Undersampling methods include Random Under-Sampling (RUS), Tomek Links, and Cluster Centroids.

How can class imbalance be dealt with in multi-class classification?

Class imbalance in multi-class classification can be dealt with by using techniques such as One-vs-Rest (OvR), One-vs-One (OvO), and Error-Correcting Output Codes (ECOC). OvR trains a separate classifier for each class, treating all other classes as negative. OvO trains a separate classifier for each pair of classes. ECOC encodes each class into a binary code and trains a separate classifier for each code.