Table of Contents

Machine learning is a rapidly growing field that has revolutionized the way we approach data analysis. One of the most powerful techniques in machine learning is ensemble learning, which involves combining the predictions of multiple models to produce a more accurate overall prediction. Two popular methods of ensemble learning are bagging and boosting.

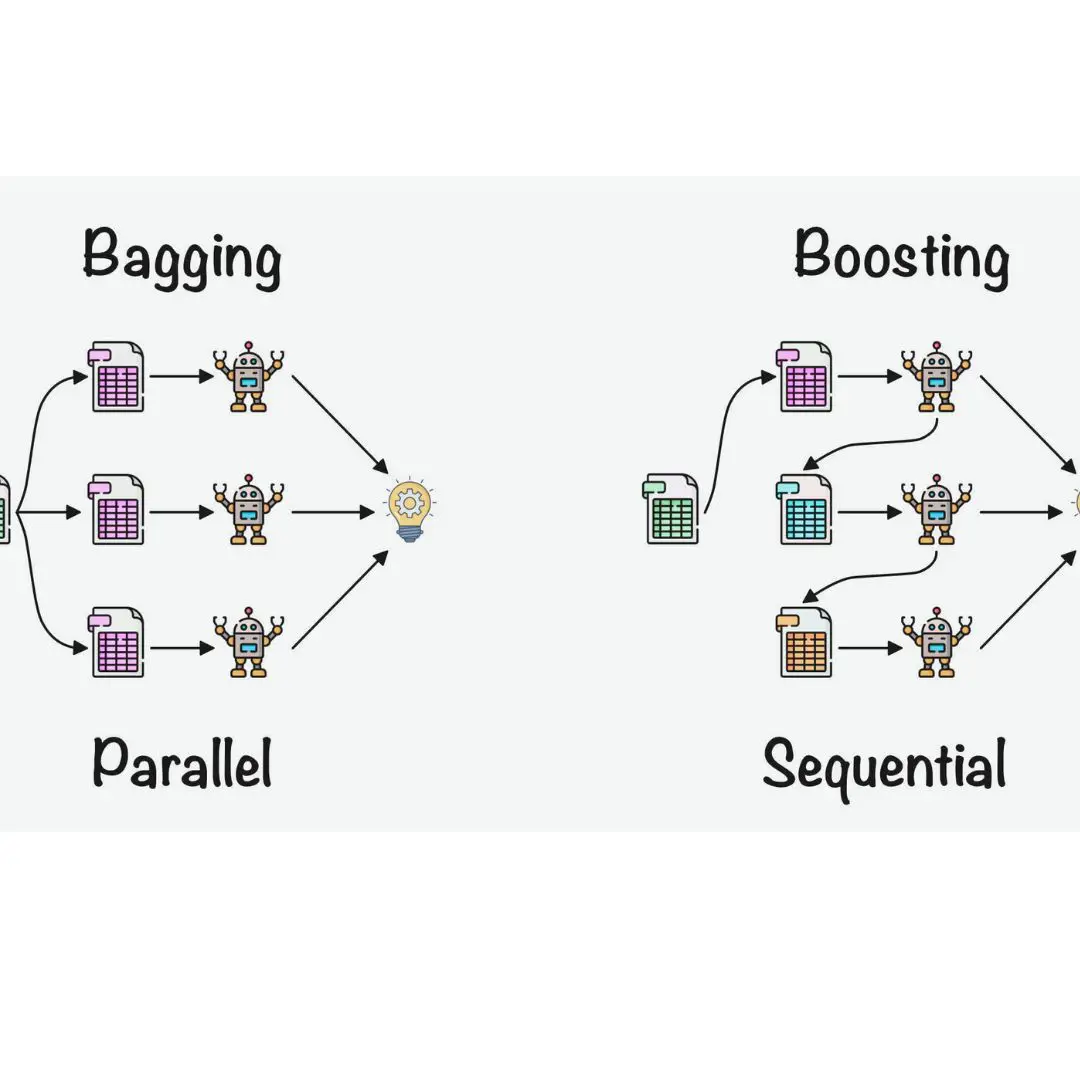

Bagging, or bootstrap aggregation, is a technique that involves creating multiple models using bootstrapped samples of the training data. These models are then combined by taking the average of their predictions. Bagging is particularly effective when dealing with noisy data, as it reduces the variance in the predictions of the individual models. On the other hand, boosting involves iteratively training models on the same dataset, with each subsequent model focusing on the examples that were misclassified by the previous model. Boosting is particularly effective when dealing with biased data, as it reduces the bias in the predictions of the individual models.

Ensemble Methods

Ensemble methods are a set of machine learning techniques that combine the decisions of several base models to create a more accurate and robust predictive model. The idea behind ensemble methods is that by combining the predictions of multiple models, we can reduce the risk of overfitting and improve the overall accuracy of the model.

Bagging

Bagging, also known as bootstrap aggregating, is an ensemble learning method that reduces variance within a noisy dataset. In bagging, a random sample of data in a training set is selected with replacement, meaning that the individual data points can be chosen more than once. After several data samples are generated, these samples are used to train a set of base models. The predictions of these models are then combined to create the final ensemble model.

Bagging is particularly effective when the base models are unstable or prone to overfitting. By training multiple models on different subsets of the data, bagging can help reduce the impact of outliers and noise in the data.

Boosting

Boosting is another ensemble learning method that is used to improve the accuracy of a predictive model. Unlike bagging, which trains each base model independently, boosting trains each model sequentially, with each subsequent model attempting to correct the errors of the previous model.

Boosting is particularly effective when the base models are weak or underfitting. By sequentially training models to correct the errors of the previous model, boosting can help improve the accuracy of the final ensemble model.

In summary, ensemble methods are a powerful set of machine learning techniques that can help improve the accuracy and robustness of predictive models. Bagging and boosting are two popular ensemble learning methods that can be used to reduce variance and improve accuracy respectively. By combining the predictions of multiple models, ensemble methods can help reduce the risk of overfitting and improve the overall accuracy of the model.

Random Forest is a widely used algorithm based on bagging. It combines multiple decision tree models, each trained on a different bootstrap sample, and aggregates their predictions through voting. Random Forest’s strength lies in its ability to handle high-dimensional data, maintain interpretability, and provide an estimate of feature importance. Boosting focuses on iteratively improving the performance of a weak base model by sequentially training new models that address the shortcomings of their predecessors. Boosting algorithms assign weights to each training instance and initially give equal importance to all instances. The base model is trained on the weighted data, and the weights are updated based on the errors made by the model. The subsequent models are then trained on the updated weights, with the goal of giving more weight to the misclassified instances in order to improve their classification. The final prediction is obtained by combining the predictions of all the models, typically using weighted voting.

Gradient Boosting Machines (GBMs) are a popular family of boosting algorithms. GBMs iteratively build decision trees in a forward stage-wise manner, where each new tree is fitted to the residuals (or errors) of the previous ensemble. By gradually reducing the residuals, GBMs effectively minimize the overall prediction error and can handle complex relationships in the data.

Bagging vs. Boosting

Bagging

Bagging stands for Bootstrap Aggregating, which is a parallel ensemble learning method. In bagging, multiple models are trained on different subsets of the training data, and the final prediction is made by taking the average of the predictions of all models. Bagging reduces the variance of the model and prevents overfitting. It is commonly used with decision trees as weak learners.

The following table summarizes the main characteristics of bagging:

| Characteristics | Description |

|---|---|

| Type of ensemble | Parallel |

| Learning method | Aggregating |

| Weak learners | Trained independently |

| Data sampling | Random sampling with replacement |

| Voting method | Averaging |

Boosting

Boosting is a sequential ensemble learning method that trains a series of weak learners in a specific order. Each new model iteration focuses on the misclassified samples of the previous model, increasing their weights. Boosting reduces bias and improves the accuracy of the model. It is commonly used with decision trees, as well as other algorithms such as AdaBoost and Gradient Boosting.

The following table summarizes the main characteristics of boosting:

| Characteristics | Description |

|---|---|

| Type of ensemble | Sequential |

| Learning method | Boosting |

| Weak learners | Trained sequentially |

| Data sampling | Weighted sampling |

| Voting method | Weighted sum |

Bagging and boosting are both ensemble methods that combine multiple models to improve the performance of a single model. Bagging is best suited for reducing variance by creating diverse models while boosting is best suited for reducing bias. The choice between bagging and boosting depends on the specific problem and data set. Boosting also aims to decrease bias by iteratively improving the performance of weak models.

Bagging is more suitable when the base model tends to overfit, as it helps to smooth out the individual model’s predictions. Boosting, on the other hand, excels when the base model is weak and can be improved by iteratively emphasizing the misclassified instances.

Applications of Ensemble Methods

Ensemble methods have been successfully applied in various fields, including finance, healthcare, and image recognition. Here are some examples:

Finance

In finance, ensemble methods have been used to predict stock prices, identify fraudulent transactions, and evaluate credit risk. By combining the predictions of multiple models, ensemble methods can improve the accuracy and robustness of financial predictions.

Healthcare

In healthcare, ensemble methods have been used to diagnose diseases, predict patient outcomes, and identify risk factors. By combining the predictions of multiple models, ensemble methods can improve the accuracy and reliability of medical diagnoses and treatments.

Image Recognition

In image recognition, ensemble methods have been used to classify objects in images, recognize faces, and detect anomalies. By combining the predictions of multiple models, ensemble methods can improve the accuracy and speed of image recognition tasks.

Other Applications

Ensemble methods have also been applied in other fields, such as natural language processing, fraud detection, and recommendation systems. By combining the predictions of multiple models, ensemble methods can improve the performance of various machine learning tasks.

Overall, ensemble methods are a powerful technique that can improve the accuracy, reliability, and robustness of machine learning models in various applications.

Challenges and Limitations of Ensemble Methods

While ensemble methods have proven to be effective in improving the accuracy of machine learning models, they also come with several challenges and limitations. Here are some of the most significant ones:

Overfitting

One of the main challenges of ensemble methods is the risk of overfitting. When the base models are too complex, they can capture the noise in the data and create a model that performs well on the training set but poorly on the test set. To avoid overfitting, it is essential to use simple base models and limit their complexity.

Computational Complexity

Ensemble methods require a significant amount of computational resources, especially when dealing with large datasets. Building multiple models and combining their predictions can be time-consuming and resource-intensive. Additionally, some ensemble methods, such as boosting, require training the base models sequentially, which can further increase the computational complexity.

Lack of Transparency

Ensemble methods can be challenging to interpret and explain, especially when using complex models. Unlike single models, where the decision-making process is clear, ensemble models combine the decisions of multiple models, making it harder to understand how the final decision was reached. This can be a problem in industries where transparency is crucial, such as healthcare and finance.

Imbalanced Data

Ensemble methods can struggle with imbalanced datasets, where the distribution of classes is uneven. If the majority class dominates the dataset, the ensemble model may favor it, leading to poor performance on the minority class. To address this issue, techniques such as data resampling and weighting can be used to balance the dataset.

In conclusion, while ensemble methods can improve the accuracy of machine learning models, they also come with several challenges and limitations that must be considered. By understanding these limitations, we can use ensemble methods more effectively and avoid potential pitfalls.

Frequently Asked Questions

What is the difference between bagging and boosting in ensemble learning?

Bagging and boosting are two popular ensemble methods in machine learning. Bagging involves combining multiple models trained on different subsets of the training data, while boosting involves incrementally improving the performance of a single model by focusing on the misclassified data points.

What is the general principle of ensemble methods in machine learning?

The general principle of ensemble methods in machine learning is to combine the predictions of multiple models to improve the overall accuracy and robustness of the prediction. Ensemble methods can help to reduce the variance and bias of the model and can lead to better generalization performance.

What is the example of bagging and boosting in machine learning?

An example of bagging is the random forest algorithm, which combines multiple decision trees trained on different subsets of the training data. An example of boosting is the AdaBoost algorithm, which incrementally improves the performance of a single weak learner by adjusting the weights of the misclassified data points.

What is the characteristic of boosting when compared to bagging in ensemble methods?

Boosting tends to focus on the misclassified data points and can lead to better performance on the training data, but may be more prone to overfitting. Bagging, on the other hand, tends to reduce the variance of the model and can lead to better generalization performance.

What are the most commonly used ensemble methods in machine learning?

The most commonly used ensemble methods in machine learning include bagging, boosting, random forests, and gradient boosting.

How does gradient boosting differ from AdaBoost in ensemble learning?

Gradient boosting is a type of boosting algorithm that uses gradient descent to optimize the loss function, while AdaBoost uses a weighted sum of the weak learners. Gradient boosting can be more flexible and can handle a wider range of loss functions, but may be more computationally expensive.