Table of Contents

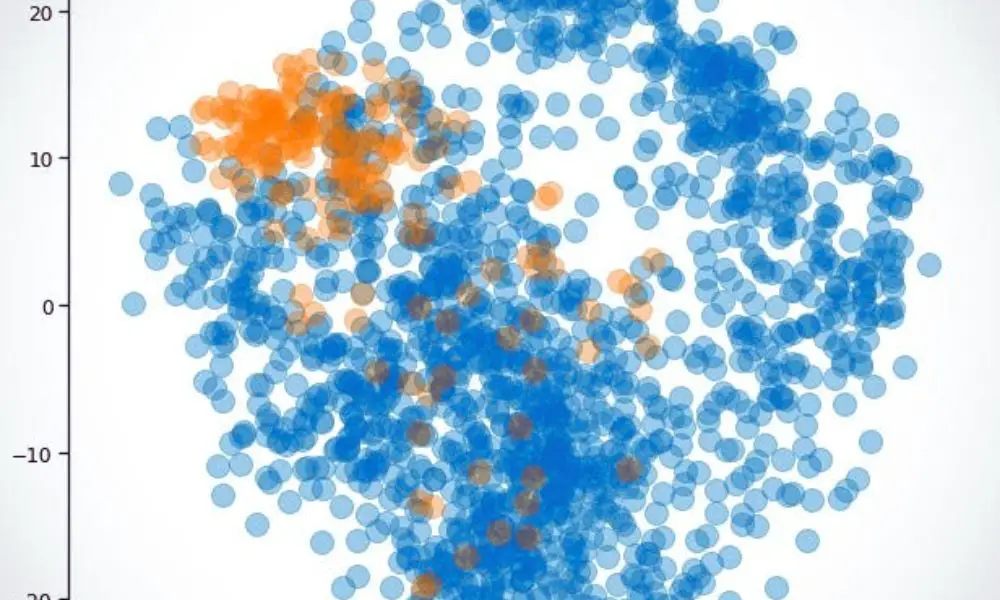

SMOTE (Synthetic Minority Over-sampling Technique) is a powerful tool for handling imbalanced data in machine learning. In many real-world scenarios, datasets are often imbalanced, meaning that one class may have significantly fewer observations than others. This can lead to biased models that perform poorly on the minority class. SMOTE is a technique that helps to address this issue by generating synthetic samples of the minority class, thereby balancing the dataset.

The SMOTE algorithm was first introduced in a 2002 paper by Nitesh V. Chawla, Kevin W. Bowyer, Lawrence O. Hall, and W. Philip Kegelmeyer. Since then, it has become a widely used technique for handling imbalanced data in machine learning. SMOTE works by randomly selecting a minority class observation and generating new synthetic observations that are similar to it. This is done by selecting one or more of its nearest neighbors and creating new observations along the line segments that connect them. The result is a larger, more balanced dataset that can be used to train machine learning models that are less biased towards the majority class.

What is SMOTE?

SMOTE, which stands for Synthetic Minority Over-sampling Technique, is a powerful technique used in machine learning to address the problem of imbalanced data sets. An imbalanced data set is a dataset in which one class is significantly more represented than the other. For example, in a fraud detection dataset, the number of fraudulent transactions can be much less than the number of legitimate transactions.

Traditional classification algorithms often struggle to learn from imbalanced data because they tend to prioritize the majority class and fail to capture the underlying patterns of the minority class.

SMOTE is a type of oversampling technique that creates synthetic samples of the minority class. It generates new examples by interpolating between existing minority class examples. The technique works by selecting a random minority class sample and finding its k nearest neighbors. The synthetic samples are then generated by randomly selecting one of the k neighbors and creating a new sample that is a linear combination of the original sample and the selected neighbor.

SMOTE is a popular technique because it can improve the performance of a classifier on imbalanced datasets without requiring additional data collection. It can be used with a variety of classifiers, including decision trees, Naive Bayes, and support vector machines.

In summary, SMOTE is an oversampling technique that generates synthetic samples of the minority class by interpolating between existing minority class examples. It is a powerful tool for addressing the problem of imbalanced data sets and can improve the performance of a classifier without requiring additional data collection.

How to use SMOTE?

To use SMOTE for balancing imbalanced datasets, follow these simple steps:

- Identify the minority class in your dataset. This is the class that has fewer samples than the majority class.

- Split your dataset into training and testing sets. It is important to ensure that the minority class is represented in both sets.

- Apply random undersampling to the majority class to reduce the number of examples in that class. This will help to balance the class distribution.

- Apply SMOTE to the minority class to oversample it. This will create synthetic examples of the minority class to balance the class distribution.

- Combine the under-sampled majority class with the oversampled minority class to create a balanced dataset.

- Train your machine learning model on the balanced dataset.

It is important to note that SMOTE should only be used on the training set and not on the testing set. This is because SMOTE creates synthetic examples that are similar to the minority class, which can lead to overfitting if used on the testing set.

In addition, it is recommended to experiment with different values of the SMOTE parameters, such as the number of nearest neighbors to use, to find the optimal balance between oversampling and avoiding overfitting.

Overall, SMOTE is a powerful technique for dealing with imbalanced datasets, but it should be used with caution and in conjunction with other techniques, such as random undersampling, to achieve the best results.

Frequently Asked Questions

What is the purpose of using SMOTE for imbalanced data?

SMOTE is a popular oversampling technique used to address the problem of imbalanced data. The technique generates synthetic minority class samples by interpolating between existing minority class samples. This helps to balance the class distribution and improve the performance of machine learning models trained on imbalanced data.

How does SMOTE algorithm work?

The SMOTE algorithm works by identifying minority class samples that are close to one another and then creating synthetic samples along the line segments that connect them. The synthetic samples are generated by randomly selecting one of the two minority class samples and then linearly interpolating between the two samples to create a new synthetic sample.

What are the advantages of using SMOTE over other oversampling techniques?

One of the main advantages of using SMOTE over other oversampling techniques is that it generates synthetic samples that are more representative of the minority class. This helps to reduce the risk of overfitting and improve the generalization performance of machine learning models trained on imbalanced data. Additionally, SMOTE is a simple and easy-to-implement technique that can be applied to a wide range of machine-learning problems.

Can SMOTE be used for multiclass imbalance problems?

Yes, SMOTE can be used for multiclass imbalance problems. In this case, the technique is applied independently to each minority class in the dataset. Synthetic samples are generated for each minority class by interpolating between existing samples of the same class.

How do you implement SMOTE in Python using imblearn or sklearn?

SMOTE can be implemented in Python using the imblearn or sklearn libraries. Both libraries provide easy-to-use functions for oversampling imbalanced data using SMOTE. The implementation involves fitting the SMOTE algorithm to the training data and then using the generated synthetic samples to train a machine-learning model.

Are there any limitations or drawbacks to using SMOTE for imbalanced data?

One of the main limitations of SMOTE is that it can generate noisy samples if the minority class is highly overlapping with the majority class. Additionally, SMOTE can lead to overfitting if the synthetic samples are too similar to the original minority class samples. Finally, SMOTE does not address the problem of imbalanced data at the feature level, which can also affect the performance of machine learning models trained on imbalanced data.