Table of Contents

XGBoost and LightGBM are two of the most popular and powerful boosting algorithms used in machine learning. These algorithms are designed to improve the performance of models by combining the predictions of multiple weak models. Boosting algorithms work by iteratively training weak models on the residuals of the previous models until the desired level of accuracy is achieved.

XGBoost and LightGBM are both gradient boosting algorithms, which means they use gradient descent to minimize the loss function and improve the accuracy of the model. XGBoost is a tree-based algorithm that uses a regularized objective function to prevent overfitting. LightGBM, on the other hand, is a histogram-based algorithm that uses a novel technique called Gradient-based One-Side Sampling (GOSS) to reduce the number of data points used in training without sacrificing accuracy.

Both XGBoost and LightGBM have been shown to outperform other popular algorithms like Random Forest and Neural Networks in many real-world applications. They are widely used in various domains, including finance, healthcare, and e-commerce, where accuracy and speed are critical. In this article, we will explore the key features of XGBoost and LightGBM and how they can be used to achieve superior performance in machine learning.

Overview of Boosted Gradient Algorithms

What are Boosted Gradient Algorithms?

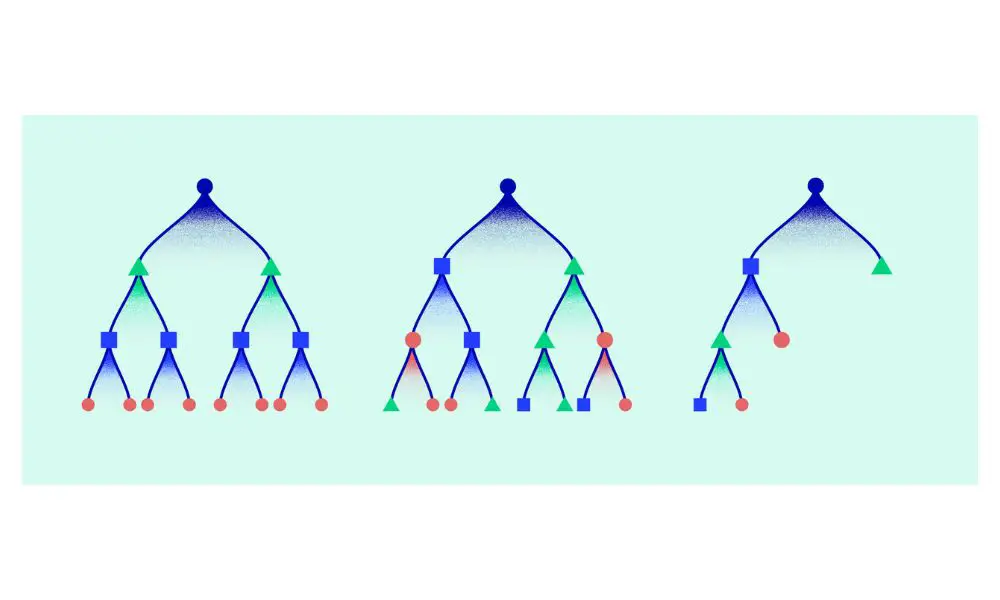

Boosted Gradient Algorithms are a type of ensemble learning method that combines multiple weak models to create a strong model. These models are decision trees that are trained in a sequential manner, where each new tree is trained to correct the errors of the previous tree. The term “gradient” refers to the optimization function that is used to minimize the error between the predicted and actual values.

Why are Boosted Gradient Algorithms Important?

Boosted Gradient Algorithms are important because they provide superior performance compared to other machine learning algorithms. They are especially useful for complex datasets with a large number of features and observations. These algorithms are also highly flexible, allowing for the optimization of various parameters to achieve the best results.

The two most popular Boosted Gradient Algorithms are XGBoost and LightGBM. XGBoost is known for its scalability and speed, while LightGBM is known for its efficiency and accuracy. Both algorithms have been widely used in various fields, including finance, healthcare, and e-commerce.

In summary, Boosted Gradient Algorithms are a powerful tool for machine learning that can provide superior performance compared to other algorithms. They are highly flexible and can be optimized to achieve the best results. XGBoost and LightGBM are two of the most popular Boosted Gradient Algorithms, each with their own unique strengths.

XGBoost Algorithm

What is XGBoost?

XGBoost (eXtreme Gradient Boosting) is a popular machine learning algorithm that is used for predictive modeling problems, such as classification and regression on tabular data. It was introduced by Tianqi Chen and is currently a part of a wider toolkit by DMLC (Distributed Machine Learning Community).

How does XGBoost Work?

XGBoost works by building an ensemble of decision trees, where each tree is built to correct the errors of the previous tree. The algorithm starts with a single decision tree and then iteratively adds new trees to the ensemble. Each new tree is built to minimize the errors of the previous trees.

One of the key features of XGBoost is that it uses a technique called gradient boosting to optimize the ensemble. Gradient boosting is a method of updating the weights of the samples in the training set based on the errors of the previous trees. This allows XGBoost to focus on the samples that are most difficult to classify, which leads to better overall performance.

What are the Benefits of XGBoost?

XGBoost has several benefits that make it a popular choice for machine learning practitioners. Some of these benefits include:

- Speed: XGBoost is designed to be computationally efficient, which makes it ideal for large datasets and real-time applications.

- Scalability: XGBoost can be easily parallelized across multiple CPUs and GPUs, which allows it to scale to very large datasets.

- Accuracy: XGBoost is known for its high accuracy and has been used in many winning solutions to machine learning competitions, such as those on Kaggle.

- Flexibility: XGBoost can handle a wide range of data types, including numerical, categorical, and text data.

- Interpretability: XGBoost provides several ways to interpret the results of the model, such as feature importance scores and partial dependence plots.

Overall, XGBoost is a powerful algorithm that can be used to solve a wide range of machine learning problems.

LightGBM Algorithm

What is LightGBM?

LightGBM, or Light Gradient Boosting Machine, is a gradient boosting decision tree algorithm created by Microsoft. It is designed to be fast, efficient, and scalable, making it well-suited for large-scale machine learning tasks. LightGBM uses a histogram-based algorithm that bins continuous feature values into discrete bins, which speeds up the training process and reduces memory usage.

How does LightGBM Work?

LightGBM works by iteratively building decision trees that predict the residual errors of the previous iteration. It uses a gradient-based approach to determine the optimal splits for each node in the tree, which helps to reduce bias and improve accuracy. LightGBM also uses a leaf-wise approach to tree building, which means that it grows the tree by adding new leaves instead of new levels, which can help to reduce overfitting and improve performance.

What are the Benefits of LightGBM?

There are several benefits to using LightGBM for machine learning tasks. One of the main advantages is its speed and efficiency. Because it uses a histogram-based algorithm and a leaf-wise approach to tree building, it can train models much faster than other gradient boosting algorithms. It also has lower memory usage, which makes it well-suited for large-scale datasets.

Another benefit of LightGBM is its accuracy. By using a gradient-based approach to determine the optimal splits for each node in the tree, it can reduce bias and improve accuracy compared to other decision tree algorithms. It also has built-in support for handling categorical features, which can be challenging for other algorithms.

Overall, LightGBM is a powerful algorithm that can be used for a wide range of machine learning tasks. Its speed, efficiency, and accuracy make it a popular choice for large-scale datasets and time-sensitive applications.

Comparison of XGBoost and LightGBM

Performance Comparison

XGBoost and LightGBM are both popular and highly effective gradient boosting algorithms. However, they differ in their approach to boosting and performance optimization. XGBoost uses a technique called gradient-based one-side sampling (GBDT), while LightGBM uses a histogram-based approach.

In terms of performance, both algorithms have been shown to outperform other popular algorithms like Random Forest and SVM. However, there have been some studies that suggest LightGBM may perform slightly better in certain scenarios, especially when dealing with large datasets with many features.

Ease of Use Comparison

When it comes to ease of use, XGBoost has a more user-friendly interface and is easier to implement. It also has a more established community and a larger number of available resources and tutorials. On the other hand, LightGBM can be more challenging to set up and requires more advanced knowledge of gradient boosting algorithms.

Scalability Comparison

Both XGBoost and LightGBM are highly scalable and can handle large datasets with millions of observations and thousands of features. However, LightGBM has been shown to be more efficient in terms of memory usage and training time, especially when dealing with high-dimensional data.

Overall, both XGBoost and LightGBM are powerful and effective gradient boosting algorithms that can be used in a wide range of applications. The choice between the two will depend on the specific needs of the project, including the size of the dataset, the complexity of the problem, and the level of expertise of the user.

| Metric | XGBoost | LightGBM |

|---|---|---|

| Performance | Highly effective | Highly effective (may perform slightly better in some scenarios) |

| Ease of Use | More user-friendly | More advanced knowledge required |

| Scalability | Highly scalable | More efficient in terms of memory usage and training time (especially for high-dimensional data) |

Frequently Asked Questions

What are the differences between XGBoost and LightGBM in terms of performance?

XGBoost and LightGBM are both gradient boosting algorithms that can be used for classification and regression problems. However, LightGBM is known to be faster and more memory-efficient than XGBoost. In terms of accuracy, both algorithms are comparable, but LightGBM may perform better on large datasets.

How does LightGBM achieve faster performance compared to XGBoost?

LightGBM achieves faster performance by using a novel technique called Gradient-based One-Side Sampling (GOSS). GOSS filters out data instances for finding a split value, which reduces the amount of computation needed. Additionally, LightGBM uses a Histogram-based algorithm for computing the best split, which is faster than XGBoost’s pre-sorted algorithm.

What are the advantages and disadvantages of using XGBoost and LightGBM?

One advantage of using XGBoost and LightGBM is that they are both highly accurate and can handle complex datasets. However, XGBoost may be more suitable for smaller datasets, while LightGBM may be better for larger datasets due to its faster performance. One disadvantage of using these algorithms is that they can be difficult to tune and require a lot of computational resources.

What are the key features of gradient boosting algorithms?

Gradient boosting algorithms are a type of ensemble machine learning algorithm that combines multiple weak learners to create a stronger model. They work by iteratively adding new models that correct the errors of the previous models. Gradient boosting algorithms are known for their high accuracy and ability to handle complex datasets.

How do XGBoost and LightGBM compare to other popular algorithms like Random Forest and CatBoost?

XGBoost and LightGBM are both gradient boosting algorithms, while Random Forest is a decision tree-based algorithm and CatBoost is a gradient boosting algorithm that is optimized for categorical features. In terms of accuracy, XGBoost and LightGBM are comparable to Random Forest and CatBoost, but may perform better on certain types of datasets.

Which algorithm is recommended for gradient boosting based on performance?

The choice between XGBoost and LightGBM depends on the specific needs of the project. If speed and memory efficiency are important factors, LightGBM may be the better choice. If accuracy is the primary concern and the dataset is not too large, XGBoost may be a good option. Ultimately, it is recommended to try both algorithms and compare their performance on the specific dataset.