Table of Contents

Voting classifiers and regressors are powerful tools in the field of machine learning that allow us to harness collective wisdom. They enable us to combine the predictions of multiple models, each trained on different subsets of data, to make more accurate predictions than any individual model could achieve on its own.

Voting classifiers and regressors are ensemble learning techniques that combine the predictions of multiple individual models to make a final prediction. These methods harness the collective wisdom of diverse models and have proven to be powerful tools in machine learning

One of the key advantages of voting classifiers and regressors is that they can help to mitigate the risk of overfitting. By training multiple models on different subsets of data and aggregating their predictions, we can reduce the impact of any individual model’s biases or errors. This can lead to more robust and reliable predictions, even in complex and noisy datasets.

There are many different types of voting classifiers and regressors, each with their own strengths and weaknesses. Some popular examples include bagging, boosting, and random forests. Each of these methods has its own unique approach to combining the predictions of multiple models, and may be more or less suitable depending on the specific problem at hand. By understanding the strengths and weaknesses of each approach, we can select the most appropriate method for our particular use case.

Voting Classifiers

Voting classifiers are a type of ensemble learning method that combines multiple machine learning models to achieve better predictive performance. The idea behind voting classifiers is to harness collective wisdom by aggregating the predictions of multiple models. In this section, we will discuss the definition, types, advantages, and disadvantages of voting classifiers.

Definition

A voting classifier is a machine learning model that combines the predictions of multiple models to make a final prediction. The models used in a voting classifier are often different in terms of the algorithms, hyperparameters, and training data used to train them. The predictions of the models can be combined using different methods, such as majority voting, weighted voting, and soft voting.

Types

There are two main types of voting classifiers: hard voting and soft voting. In hard voting, the final prediction is based on the majority vote of the models. In other words, the class with the most votes is chosen as the final prediction. In soft voting, the final prediction is based on the weighted average of the predicted probabilities of the models. The class with the highest probability is chosen as the final prediction.

Advantages

Voting classifiers have several advantages over single models. First, they can improve the predictive performance of models by combining the strengths of multiple models. Second, they can reduce the risk of overfitting by using multiple models with different biases. Third, they can be more robust to noisy data by averaging out the errors of individual models.

Disadvantages

Voting classifiers also have some disadvantages. First, they can be computationally expensive, especially when using a large number of models. Second, they can be less interpretable than single models, as the final prediction is based on the predictions of multiple models. Finally, they can be less effective when the models used in the voting classifier are highly correlated, as the predictions of the models may be too similar to provide any additional benefit.

Voting Regressors

Definition

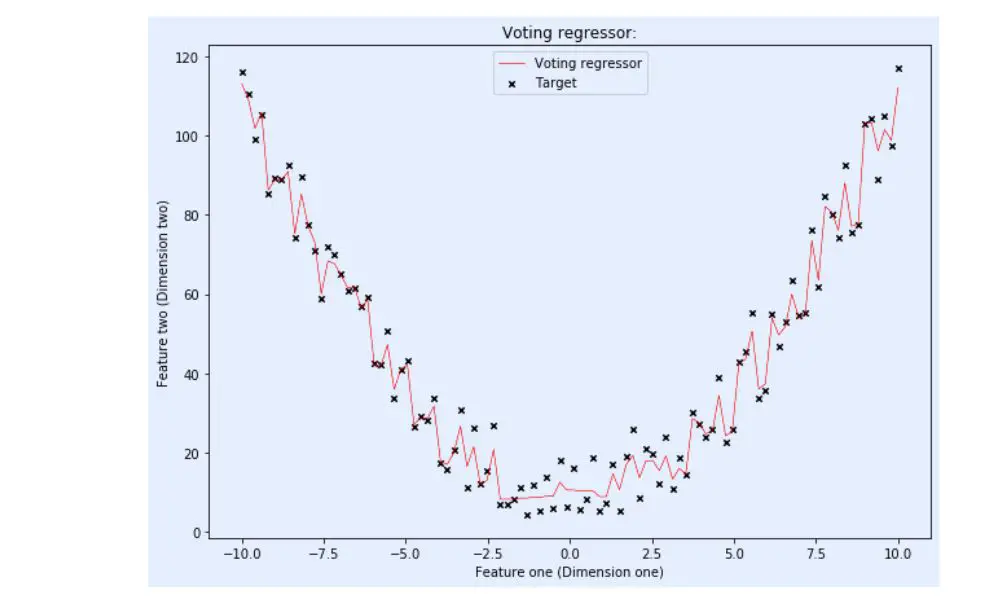

A voting regressor is an ensemble meta-estimator that combines the predictions of several base regressors to form a final prediction. It fits each base regressor on the entire dataset and then averages their individual predictions. The goal is to leverage the collective wisdom of multiple models to improve the accuracy and robustness of the final prediction.

Types

There are two types of voting regressors: hard voting and soft voting. In hard voting, the final prediction is based on the majority vote of the base regressors. In soft voting, the final prediction is based on the weighted average of the predicted probabilities of the base regressors. Soft voting is generally preferred because it takes into account the confidence of each base regressor in its prediction.

Advantages

The main advantage of voting regressors is that they can improve the accuracy and robustness of the final prediction compared to a single regressor. By combining the predictions of multiple models, voting regressors can reduce the impact of individual model biases and errors, and capture a wider range of patterns and relationships in the data.

Disadvantages

One potential disadvantage of voting regressors is that they can be computationally expensive and require more memory than a single regressor. This is because voting regressors need to store the predictions of multiple base regressors and combine them during prediction. Additionally, if the base regressors are highly correlated or have similar biases, the voting regressor may not improve the accuracy of the final prediction.

Ensemble Methods

Ensemble methods are a powerful technique in machine learning that involves combining the predictions of multiple models to improve overall performance. The idea behind ensemble methods is that by combining the predictions of multiple models, we can reduce the impact of individual model errors and improve overall accuracy. There are three main types of ensemble methods: bagging, boosting, and stacking.

Bagging

Bagging, or bootstrap aggregating, is a type of ensemble method where multiple models are trained on random subsets of the training data. The idea behind bagging is that by training multiple models on different subsets of the data, we can reduce overfitting and improve the overall accuracy of the model. The predictions of the individual models are then combined to make a final prediction.

One popular example of bagging is the random forest algorithm, which combines multiple decision trees to make a final prediction. Random forests are a powerful and flexible algorithm that can be used for both classification and regression tasks.

Boosting

Boosting is another type of ensemble method where multiple models are trained sequentially, with each subsequent model focusing on the errors of the previous model. The idea behind boosting is that by training multiple models in sequence, we can gradually improve the overall accuracy of the model.

One popular example of boosting is the AdaBoost algorithm, which combines multiple weak classifiers to make a final prediction. AdaBoost is a powerful algorithm that can be used for both classification and regression tasks.

Stacking

Stacking is a type of ensemble method where multiple models are trained and their predictions are used as input to a meta-model, which makes the final prediction. The idea behind stacking is that by combining the predictions of multiple models, we can improve the overall accuracy of the model.

One popular example of stacking is the voting classifier, which combines the predictions of multiple classifiers to make a final prediction. Voting classifiers are a powerful and flexible algorithm that can be used for both classification and regression tasks.

In summary, ensemble methods are a powerful technique in machine learning that can be used to improve the overall accuracy of a model. Bagging, boosting, and stacking are three popular types of ensemble methods that can be used for both classification and regression tasks. By combining the predictions of multiple models, we can reduce overfitting and improve the overall accuracy of the model.

Applications

Voting classifiers and regressors are widely used in machine learning applications to harness collective wisdom. The ensemble technique relies on the idea that aggregation of many classifiers and regressors will lead to a better prediction. In this section, we will explore some of the applications of voting classifiers and regressors.

Classification

Voting classifiers are commonly used in classification problems. The idea behind a voting classifier is to combine the predictions of multiple classifiers to improve the accuracy of the final prediction. The classifiers can be trained on the same dataset using different algorithms or on different subsets of the data. The most popular voting classifiers are:

- Majority Voting: The class with the most votes is selected as the final prediction.

- Weighted Voting: Each classifier is assigned a weight and the class with the highest weighted votes is selected as the final prediction.

- Soft Voting: The probabilities of each class are averaged across all classifiers and the class with the highest probability is selected as the final prediction.

Voting classifiers have been used in a variety of applications such as image classification, sentiment analysis, and fraud detection.

Regression

Voting regressors are used in regression problems where the goal is to predict a continuous value. The idea behind a voting regressor is similar to that of a voting classifier. The predictions of multiple regressors are combined to improve the accuracy of the final prediction. The most popular voting regressors are:

- Mean Voting: The mean of the predicted values is selected as the final prediction.

- Weighted Voting: Each regressor is assigned a weight and the weighted average of the predicted values is selected as the final prediction.

Voting regressors have been used in a variety of applications such as stock price prediction, weather forecasting, and traffic prediction.

Anomaly Detection

Voting classifiers can also be used for anomaly detection. Anomaly detection is the process of identifying data points that are significantly different from the rest of the data. Voting classifiers can be trained on a dataset that contains both normal and anomalous data points. The classifiers can then be used to predict whether a new data point is normal or anomalous. The most popular voting classifiers for anomaly detection are:

- Majority Voting: If the majority of the classifiers predict that the data point is anomalous, it is classified as anomalous.

- Weighted Voting: Each classifier is assigned a weight and the weighted votes are used to classify the data point.

Voting classifiers have been used in a variety of anomaly detection applications such as credit card fraud detection, network intrusion detection, and medical diagnosis.

In Conclusion, voting classifiers and regressors harness the collective wisdom of multiple individual models, enabling improved accuracy and robustness in both classification and regression tasks. By understanding the concept, advantages, and practical considerations discussed in this guide, you can effectively leverage voting-based ensembles to enhance your machine learning

Frequently Asked Questions

What is stacking in ensemble-based classifiers?

Stacking is an ensemble-based method that involves training multiple base classifiers on a dataset and then using a meta-classifier to combine their predictions. The meta-classifier is trained on the predictions made by the base classifiers, and it learns to make the final prediction based on the collective output of the base classifiers.

How does a meta-classifier work in stacking?

A meta-classifier is a classifier that is trained on the predictions made by the base classifiers in an ensemble. It learns to combine the predictions of the base classifiers to make the final prediction. The meta-classifier can be any classifier, including decision trees, logistic regression, or neural networks.

What are some techniques for hyperparameter tuning in stacking classifiers?

Some techniques for hyperparameter tuning in stacking classifiers include grid search, random search, and Bayesian optimization. Grid search involves defining a grid of hyperparameters and testing each combination of hyperparameters. Random search involves randomly sampling hyperparameters from a distribution and testing them. Bayesian optimization involves using a probabilistic model to predict the performance of different hyperparameters and selecting the best ones.

Can you explain the concept of collective wisdom in ensemble methods?

The concept of collective wisdom in ensemble methods refers to the idea that multiple models can outperform a single model by combining their predictions. Ensemble methods use multiple models to make predictions, and they combine the predictions using various techniques, such as averaging, voting, or stacking. The collective output of the models can be more accurate and robust than the output of a single model.

How do you combine two classifiers in Python?

You can combine two classifiers in Python using various techniques, such as averaging, voting, or stacking. Averaging involves taking the average of the predictions made by the two classifiers. Voting involves taking the majority vote of the predictions made by the two classifiers. Stacking involves training a meta-classifier on the predictions made by the two classifiers.

What is the voting method in ensemble learning?

The voting method in ensemble learning involves combining the predictions made by multiple models using a voting rule. The voting rule can be either hard or soft. In hard voting, the final prediction is based on the majority vote of the models. In soft voting, the final prediction is based on the average of the predicted probabilities of the models. The voting method can be used in both classification and regression problems.