A confusion matrix is a powerful tool used to evaluate the performance of classification models. It provides a clear and concise summary of how well the model is performing, allowing you to identify areas for improvement. The matrix is a tabular format that shows predicted values against their actual values. This allows you to understand whether the model is making correct predictions or not.

Confusion matrices can be used to calculate a variety of performance metrics for classification models. These include accuracy, precision, recall, and F1 score, among others. Accuracy is the most common metric used and is calculated by dividing all true positive and true negative cases by the total number of cases. However, accuracy alone can be misleading if you have an unequal number of observations in each class or if you have more than two classes in your dataset. This is where confusion matrices come in handy, as they allow you to see the performance of the model for each class separately.

What is a Confusion Matrix

Definition

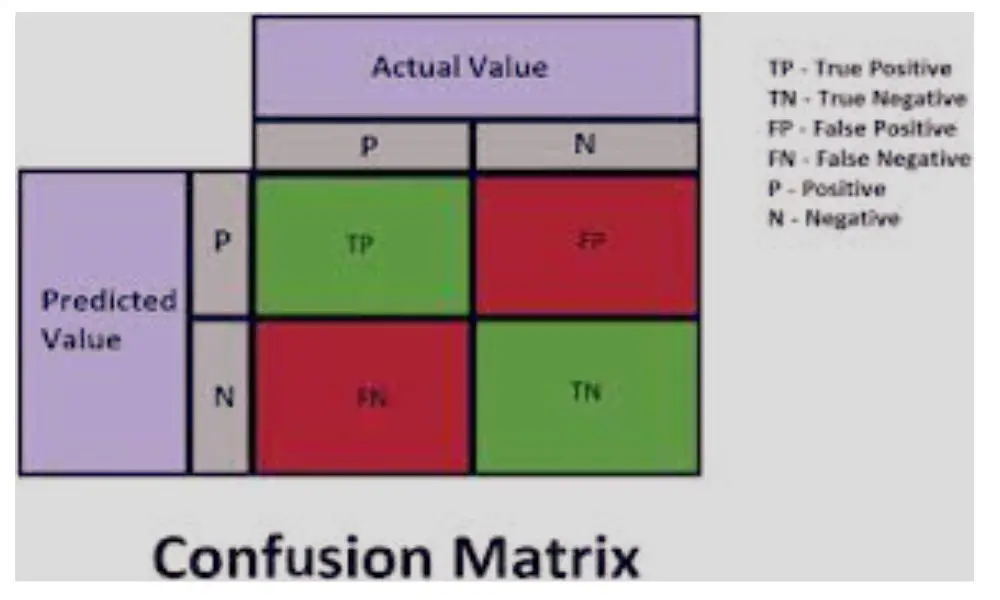

A confusion matrix is a table that summarizes the performance of a classification model by comparing the predicted and actual values of a test dataset. It is a useful tool for evaluating the accuracy of a model’s predictions and identifying where it may be making errors. The matrix provides a detailed breakdown of the number of true positives, true negatives, false positives, and false negatives.

Components

A confusion matrix consists of four components:

- True Positives (TP): the number of correctly predicted positive values.

- True Negatives (TN): the number of correctly predicted negative values.

- False Positives (FP): the number of incorrectly predicted positive values.

- False Negatives (FN): the number of incorrectly predicted negative values.

These components can be used to calculate various performance metrics, such as accuracy, precision, recall, and F1 score.

The confusion matrix is often presented in a table format, with the predicted values along the top row and the actual values along the left column. An example of a confusion matrix is shown below:

| Actual/Predicted | Positive | Negative |

|---|---|---|

| Positive | TP | FP |

| Negative | FN | TN |

In this example, the model correctly predicted 80 positive cases (TP) and 20 negative cases (TN), but incorrectly predicted 10 negative cases as positive (FP) and 5 positive cases as negative (FN).

Overall, a confusion matrix provides a useful way to visualize the performance of a classification model and identify areas for improvement.

Why is a Confusion Matrix Important?

A confusion matrix is an essential tool in evaluating the performance of a classification model. It provides a clear and concise representation of the model’s performance by summarizing the number of correct and incorrect predictions in a tabular format.

Evaluating Model Performance

The confusion matrix allows us to calculate various metrics such as accuracy, precision, recall, and F1-score. These metrics help us to evaluate the model’s performance and identify areas that require improvement. For instance, accuracy can be a misleading metric when dealing with imbalanced datasets, where the number of observations in one class is significantly higher than the other. In such cases, precision, recall, and F1-score provide a more accurate representation of the model’s performance.

Identifying Errors

The confusion matrix allows us to identify the types of errors the model is making. For instance, false positives occur when the model predicts a positive outcome when the actual outcome is negative. False negatives occur when the model predicts a negative outcome when the actual outcome is positive. Identifying these errors can help us to fine-tune the model and improve its performance.

In conclusion, a confusion matrix is an important tool in evaluating the performance of a classification model. It provides a clear and concise representation of the model’s performance and allows us to identify areas that require improvement. By using the confusion matrix, we can fine-tune the model and improve its accuracy, precision, recall, and F1-score.

How to Read a Confusion Matrix

A confusion matrix is a table that summarizes the performance of a classification model by comparing the predicted labels to the true labels. It is a powerful tool that provides insights into the types of errors a model makes. In this section, we will discuss how to read a confusion matrix and interpret its results.

True Positives and True Negatives

True positives (TP) are the number of positive instances that are correctly classified by the model. True negatives (TN) are the number of negative instances that are correctly classified by the model. In other words, TP and TN are the instances that the model got right.

False Positives and False Negatives

False positives (FP) are the number of negative instances that are incorrectly classified as positive by the model. False negatives (FN) are the number of positive instances that are incorrectly classified as negative by the model. In other words, FP and FN are the instances that the model got wrong.

A confusion matrix can be presented in different ways, but the most common format is a 2×2 table with the predicted labels on one axis and the true labels on the other axis. Here is an example of a confusion matrix for a binary classification problem:

| Predicted Positive | Predicted Negative | |

|---|---|---|

| True Positive | 100 | 20 |

| True Negative | 10 | 500 |

From this confusion matrix, we can see that the model correctly classified 100 positive instances and 500 negative instances, which are the true positives and true negatives, respectively. The model incorrectly classified 20 negative instances as positive (false positives) and 10 positive instances as negative (false negatives).

In conclusion, reading a confusion matrix can provide valuable insights into the performance of a classification model. By understanding the true positives, true negatives, false positives, and false negatives, we can evaluate the strengths and weaknesses of our model and make informed decisions about how to improve it.

Interpreting a Confusion Matrix

When it comes to evaluating the performance of a classification model, a confusion matrix is a powerful tool. It provides a clear and concise way to visualize how well a model is performing and where it is making errors. In this section, we will discuss how to interpret a confusion matrix and the key metrics derived from it.

Accuracy

Accuracy is a commonly used metric in classification problems. It measures the proportion of correctly classified instances out of the total number of instances. In other words, it tells us how often the model is correct. However, accuracy alone can be misleading, especially when the classes are imbalanced. For instance, if we have 95% of the instances in one class and only 5% in the other, a model that always predicts the majority class will have a high accuracy, but it will not be useful.

Precision

Precision is a metric that measures the proportion of true positives (TP) out of all positive predictions (TP + false positives (FP)). It tells us how often the model is correct when it predicts a positive class. A high precision means that the model is good at avoiding false positives.

Recall

Recall is a metric that measures the proportion of true positives (TP) out of all actual positive instances (TP + false negatives (FN)). It tells us how often the model predicts a positive class when it should. A high recall means that the model is good at avoiding false negatives.

F1 Score

The F1 score is a harmonic mean of precision and recall. It provides a balance between the two metrics. A high F1 score means that the model has both good precision and recall. It is a useful metric when we want to optimize both precision and recall simultaneously.

In conclusion, a confusion matrix is a valuable tool for evaluating the performance of a classification model. By interpreting the key metrics derived from it, we can gain insights into how well the model is performing and where it needs improvement.

Frequently Asked Questions

How can the confusion matrix be used to evaluate the performance of a classification model?

A confusion matrix is a table that summarizes the performance of a classification model by comparing the predicted and actual class labels of a set of test data. It is a powerful tool that can be used to evaluate the performance of a classification model and identify areas where it needs improvement.

What are the performance metrics that can be derived from a confusion matrix?

The most common performance metrics derived from a confusion matrix are accuracy, precision, recall, and F1 score. Accuracy measures the overall correctness of the classification model, while precision measures the proportion of correctly predicted positive instances. Recall measures the proportion of actual positive instances that are correctly predicted, and F1 score is the harmonic mean of precision and recall.

What is the significance of a confusion matrix in visualizing model performance?

A confusion matrix provides a visual representation of the performance of a classification model. It allows us to identify the strengths and weaknesses of the model, and to make informed decisions about how to improve its performance.

How can we visualize a confusion matrix in Python using Scikit-learn?

Scikit-learn is a popular Python library for machine learning. It provides a convenient way to visualize a confusion matrix using the confusion_matrix() function. This function takes the actual and predicted class labels as inputs and returns a confusion matrix as a NumPy array.

What is the accuracy of a multi-class classification model and how can it be calculated using a confusion matrix?

The accuracy of a multi-class classification model is the proportion of correctly classified instances out of all the instances in the test data. It can be calculated using a confusion matrix by summing the diagonal elements (true positives) and dividing by the total number of instances.

What is the difference between sensitivity and specificity in multi-class classification and how can they be calculated using a confusion matrix?

Sensitivity and specificity are two performance metrics that are commonly used in binary classification. In multi-class classification, they can be calculated by treating each class as a binary classification problem. Sensitivity measures the proportion of actual positive instances that are correctly predicted, while specificity measures the proportion of actual negative instances that are correctly predicted. They can be calculated using a confusion matrix by summing the true positive and true negative elements for each class and dividing by the total number of instances in that class.