Table of Contents

R-squared score is a statistical measure used to determine the goodness of fit of a regression model. It is a crucial metric that helps researchers and data scientists to evaluate the accuracy of their models. The R-squared score ranges from 0 to 1, with higher values indicating a better fit between the model and the data.

In simple terms, the R-squared score measures the proportion of variability in the dependent variable that is explained by the independent variables in the model. This means that the closer the R-squared score is to 1, the better the model is at predicting the dependent variable. However, it is important to note that a high R-squared score does not necessarily mean that the model is perfect, as there may still be other factors that affect the dependent variable that are not accounted for in the model.

Evaluating the R-squared score is an essential step in regression analysis, as it helps researchers to determine whether their model is suitable for the data at hand. In this article, we will explore the importance of R-squared score in evaluating regression model fit, and provide insights into how to interpret and use this measure to improve the accuracy of your models.

What is R-squared Score?

Definition

R-squared score, also known as the coefficient of determination, is a statistical measure that evaluates the goodness of fit of a regression model. It is a value between 0 and 1 that represents the proportion of variance in the dependent variable that is explained by the independent variable(s) in the model.

In other words, R-squared score measures how well the regression model fits the observed data. A higher R-squared score indicates a better fit of the model to the data, while a lower R-squared score indicates a weaker fit.

Interpretation

The interpretation of R-squared score depends on the context of the regression model. In general, a high R-squared score does not necessarily mean that the model is perfect or that it can predict future outcomes accurately. It only means that the model explains a large proportion of the variance in the dependent variable.

When interpreting R-squared score, it is important to consider other factors such as the sample size, the number of independent variables, and the nature of the data. For example, a high R-squared score may be achieved by overfitting the model to the data, which can lead to poor performance on new data.

In summary, R-squared score is a useful metric for evaluating the fit of a regression model to the data. However, it should be used in conjunction with other measures and careful consideration of the context and nature of the data.

How to Calculate R-squared Score?

Formula

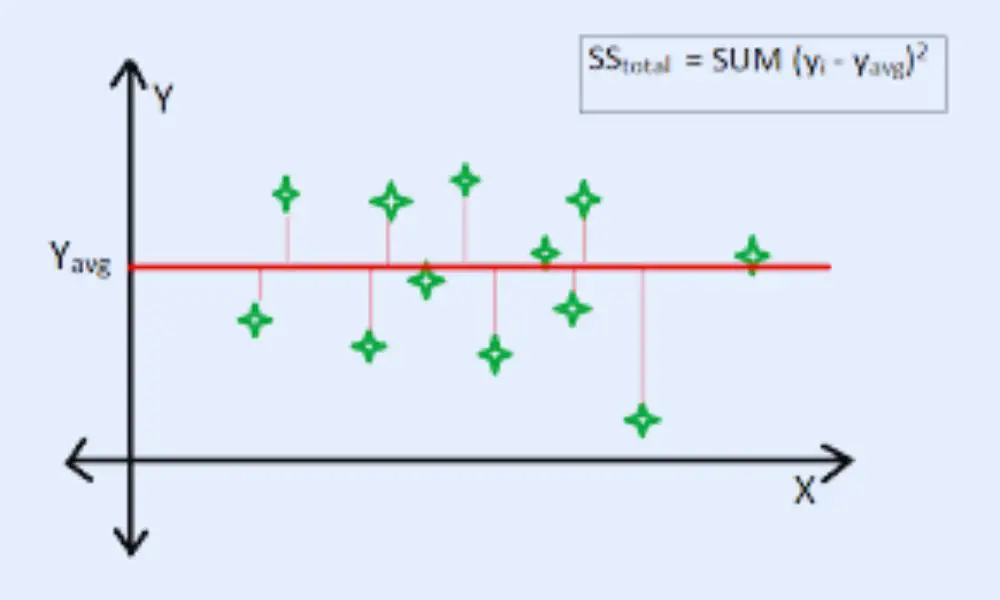

The R-squared score is a statistical measure that represents the proportion of the variance in the dependent variable that is predictable from the independent variable(s). It is calculated as the ratio of the explained variance to the total variance. The formula for calculating R-squared is:

R-squared = 1 - (SSres / SStot)

Where:

- SSres: Sum of squared residuals (also known as the sum of squared errors)

- SStot: Total sum of squares

Example

Let’s take an example to understand how to calculate the R-squared score. Suppose we have a dataset with the following observations:

| X | Y |

|---|---|

| 1 | 2 |

| 2 | 4 |

| 3 | 6 |

| 4 | 8 |

| 5 | 10 |

| 6 | 12 |

| 7 | 14 |

| 8 | 16 |

We want to fit a linear regression model to predict Y based on X. The regression equation is:

Y = β0 + β1*X

where β0 is the intercept and β1 is the slope of the line.

We can use the least squares method to estimate the values of β0 and β1 that minimize the sum of squared residuals. The regression line for this example is:

Y = 2*X

The R-squared score for this model can be calculated as follows:

SSres = Σ(yi - ŷi)^2 = Σ(yi - 2*xi)^2 = 0

SStot = Σ(yi - ȳ)^2 = Σ(yi - 8)^2 = 112

R-squared = 1 - (SSres / SStot) = 1 - (0 / 112) = 1

The R-squared score of 1 indicates that the regression model explains 100% of the variance in the dependent variable.

Limitations of R-squared Score

When evaluating regression model fit, the R-squared score is a commonly used metric. However, it is important to understand the limitations of this score in order to accurately interpret model performance.

Outliers

One limitation of the R-squared score is that it is sensitive to outliers in the data. Outliers can have a disproportionate impact on the score, leading to an overestimation of the model’s performance. Therefore, it is important to identify and address outliers in the data before relying solely on the R-squared score to evaluate model fit.

Multicollinearity

Another limitation of the R-squared score is that it does not account for multicollinearity, or the presence of high correlations between predictor variables. In cases of multicollinearity, the R-squared score may overestimate the model’s performance, as it cannot distinguish between the unique contributions of each predictor variable. Therefore, it is important to assess multicollinearity and consider alternative metrics, such as adjusted R-squared, when evaluating model fit.

Overall, while the R-squared score can provide valuable insights into the performance of a regression model, it should be used in conjunction with other metrics and considerations to ensure a comprehensive evaluation of model fit.

Alternatives to R-squared Score

When evaluating the fit of a regression model, R-squared is a commonly used metric. However, there are other metrics that can be used to evaluate the performance of a regression model. In this section, we will discuss two alternatives to R-squared: Adjusted R-squared and Mean Squared Error.

Adjusted R-squared Score

One limitation of R-squared is that it tends to increase as more predictors are added to the model, even if those predictors do not actually improve the model’s fit. Adjusted R-squared addresses this issue by penalizing models that include unnecessary predictors. Adjusted R-squared takes into account the number of predictors in the model and adjusts the R-squared value accordingly.

The formula for Adjusted R-squared is as follows:

Adjusted R-squared = 1 – [(1 – R-squared) * (n – 1) / (n – k – 1)]

Where n is the sample size and k is the number of predictors in the model. Adjusted R-squared ranges from 0 to 1, with higher values indicating a better fit.

Mean Squared Error

Mean Squared Error (MSE) is another metric that can be used to evaluate the performance of a regression model. MSE measures the average squared difference between the predicted and actual values of the outcome variable. A smaller MSE indicates a better fit.

The formula for MSE is as follows:

MSE = (1/n) * Σ(y – ŷ)^2

Where y is the actual value of the outcome variable, ŷ is the predicted value of the outcome variable, and n is the sample size.

One advantage of MSE is that it is easy to interpret and understand. Additionally, it is not affected by the number of predictors in the model, unlike R-squared and Adjusted R-squared.

In conclusion, while R-squared is a commonly used metric for evaluating the fit of a regression model, it is not the only metric available. Adjusted R-squared and Mean Squared Error are two alternatives that can provide additional insight into the performance of a model.

Frequently Asked Questions

What is a good R-squared value for a regression model?

A good R-squared value for a regression model depends on the specific context of the problem being solved. Generally, a higher R-squared value indicates a better fit of the model to the data. However, there is no fixed threshold for what constitutes a good R-squared value. It is important to consider the nature of the data, the complexity of the model, and the goals of the analysis when interpreting R-squared values.

How do you interpret R-squared in regression?

R-squared is a statistical measure that evaluates the scatter of the data points around the fitted regression line. It is also known as the coefficient of determination. R-squared values range from 0 to 1, with higher values indicating a better fit of the model to the data. However, R-squared does not provide information about the accuracy or precision of the estimates or predictions.

Is R-squared a good measure of fit?

R-squared is a widely used measure of fit in regression analysis. However, it has some limitations. R-squared does not provide information about the accuracy or precision of the estimates or predictions. Additionally, R-squared is sensitive to the number of variables in the model and can be biased by outliers or influential observations.

How do I assess the goodness-of-fit using R-squared?

To assess the goodness-of-fit using R-squared, you need to compare the R-squared value of the model to the R-squared value of a null model. The null model is a model that only includes the intercept term and does not include any predictor variables. The difference between the R-squared value of the model and the R-squared value of the null model provides an indication of how much of the variability in the response variable is explained by the predictor variables in the model.

What is the formula for calculating R-squared in regression?

The formula for calculating R-squared in regression is:

R-squared = 1 – (SSres / SStot)

where SSres is the sum of squares of the residuals (the difference between the observed values and the predicted values) and SStot is the total sum of squares (the difference between the observed values and the mean of the observed values).

How do you determine if a regression model is a good fit in R?

To determine if a regression model is a good fit in R, you can use various diagnostic plots and statistical tests. Some common diagnostic plots include residual plots, normal probability plots, and scatter plots of the predicted values versus the observed values. Some common statistical tests include the F-test for overall significance, the t-test for individual predictor variables, and the Durbin-Watson test for autocorrelation of the residuals.