Table of Contents

Stacking models is a powerful technique used to create ensemble predictions in machine learning. It involves combining the predictions of multiple well-performing models to improve the overall accuracy and robustness of the final prediction. Stacking is a popular technique because it can help to reduce the risk of overfitting and improve the generalization of the model.

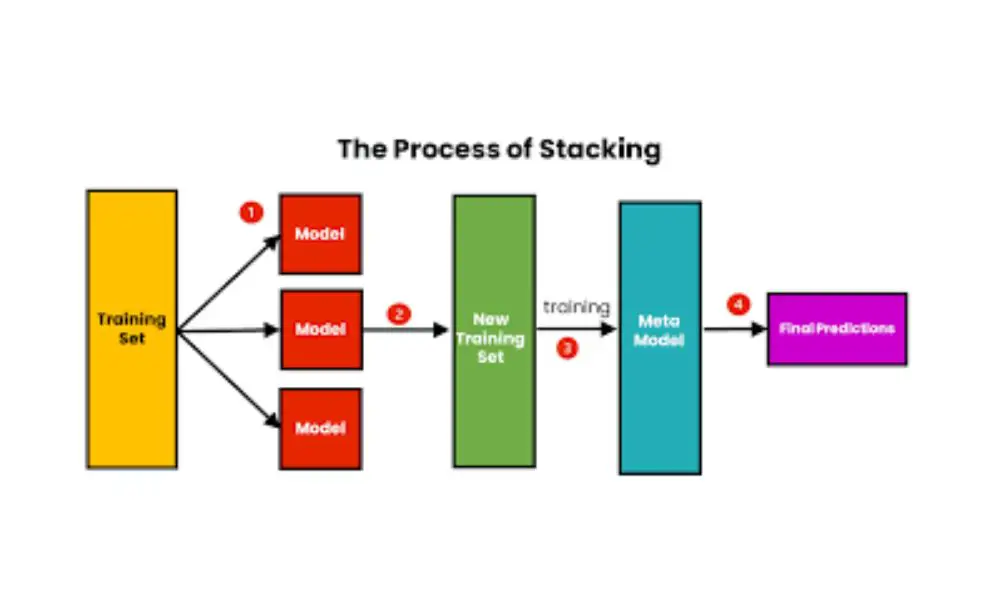

The basic idea behind stacking is to train several different models on the same data and then use their predictions as input to a final model. The final model then uses these predictions as input to make its own prediction. The key to making this work is to ensure that the base models are diverse and that they capture different aspects of the data. This can be achieved by using different algorithms, different hyperparameters, or different subsets of the data. By combining the predictions of these models, the final model can learn to generalize better and make more accurate predictions.

What is Stacking Models?

Definition

Stacking models, also known as stacked generalization or stacking ensembles, is an ensemble machine learning algorithm that involves combining the predictions of multiple models to create a more accurate prediction. In stacking, a meta-model is trained on the predictions of base models, which are themselves trained on the original training data. The meta-model is then used to make the final prediction.

The base models can be any machine learning algorithm, such as decision trees, support vector machines, or neural networks. The meta-model can also be any algorithm, but it is typically a simple linear model, such as logistic regression or linear regression.

Benefits

Stacking models has several benefits over using a single model. First, it can improve the accuracy of predictions by combining the strengths of multiple models. Second, it can reduce the risk of overfitting, as the meta-model is trained on the predictions of base models rather than the original training data. Third, it can handle complex relationships between variables that may be difficult for a single model to capture.

Stacking can also be used to create a more interpretable model. By using a linear meta-model, the importance of each base model can be quantified, allowing for a better understanding of the contribution of each model to the final prediction.

In summary, stacking models is a powerful technique for improving the accuracy and interpretability of machine learning models. By combining the predictions of multiple models, it can create a more accurate and robust prediction while reducing the risk of overfitting.

How to Stack Models

Stacking models is a powerful technique that combines the predictions of multiple models to produce more accurate and robust predictions. In this section, we will discuss the steps involved in stacking models.

Data Preparation

Before we can stack models, we need to prepare the data. This involves splitting the data into training and validation sets, and then training multiple base models on the training set. The predictions of these base models are then used as input to train a meta-model on the validation set.

Model Selection

The selection of base models is critical to the success of the stacking ensemble. We want to choose models that are diverse and complementary in their predictions. This can be achieved by selecting models with different architectures, hyperparameters, and training algorithms.

Ensemble Methods

There are several methods for combining the predictions of base models to form the input to the meta-model. These include:

- Simple Average: The predictions of the base models are averaged to form the input to the meta-model.

- Weighted Average: The predictions of the base models are weighted according to their performance on the validation set.

- Stacking: The predictions of the base models are used as input to a meta-model, which learns to combine them optimally.

In conclusion, stacking models is a powerful technique that can significantly improve the accuracy and robustness of predictions. By following the steps outlined above, we can create a stacking ensemble that is both diverse and complementary in its predictions.

Best Practices for Stacking Models

When it comes to stacking models, there are certain best practices that can help you create powerful ensemble predictions. In this section, we will discuss three important sub-sections: Avoiding Overfitting, Choosing Appropriate Models, and Evaluating Performance.

Avoiding Overfitting

Overfitting is a common issue in machine learning, and it can be particularly problematic when stacking models. To avoid overfitting, it is important to use a diverse set of base models. This means that you should choose models that have different strengths and weaknesses, rather than simply using multiple versions of the same model.

Another way to avoid overfitting is to use cross-validation. This involves splitting your data into multiple folds, training your models on some of the folds, and testing them on the remaining folds. By doing this, you can get a more accurate sense of how your models will perform on new data.

Choosing Appropriate Models

When choosing models to use in your stack, it is important to consider their individual strengths and weaknesses. You should choose models that are good at different aspects of the problem you are trying to solve. For example, if you are working on a classification problem, you might choose one model that is good at handling categorical data and another that is good at handling numerical data.

It is also important to consider the complexity of the models you are using. More complex models may be more powerful, but they can also be more prone to overfitting. It is often a good idea to use a mix of simple and complex models in your stack.

Evaluating Performance

Finally, it is important to evaluate the performance of your stacked models. One way to do this is to use a holdout set, which is a portion of your data that you set aside for testing. You can train your models on the rest of the data and then evaluate their performance on the holdout set.

Another way to evaluate performance is to use cross-validation, as mentioned earlier. By doing this, you can get a more accurate sense of how your models will perform on new data.

In summary, to create powerful ensemble predictions using stacked models, it is important to avoid overfitting, choose appropriate models, and evaluate performance carefully. By following these best practices, you can create models that are more accurate and robust than any individual model could be on its own.

Real-World Applications

Stacking models have been used in a variety of industries to improve the accuracy of predictions. In this section, we will explore some real-world applications of stacking models in finance, marketing, and healthcare.

Finance

In finance, stacking models have been used to predict stock prices, detect fraud, and assess credit risk. By combining the predictions of multiple models, stacking can help reduce the risk of false positives or false negatives. For example, a bank may use stacking to assess the creditworthiness of a loan applicant by combining the predictions of multiple models that analyze credit history, income, and other factors.

Marketing

In marketing, stacking models have been used to predict customer behavior, optimize advertising campaigns, and personalize recommendations. By combining the predictions of multiple models, stacking can help identify patterns and trends that may not be apparent from a single model. For example, an e-commerce website may use stacking to personalize product recommendations by combining the predictions of multiple models that analyze purchase history, browsing behavior, and demographic data.

Healthcare

In healthcare, stacking models have been used to diagnose diseases, predict treatment outcomes, and identify risk factors. By combining the predictions of multiple models, stacking can help improve the accuracy of diagnoses and reduce the risk of misdiagnosis. For example, a hospital may use stacking to diagnose cancer by combining the predictions of multiple models that analyze medical history, imaging data, and genetic information.

Overall, stacking models have proven to be a powerful tool for improving the accuracy of predictions in a variety of industries. By combining the predictions of multiple models, stacking can help reduce the risk of false positives or false negatives and identify patterns and trends that may not be apparent from a single model.

Frequently Asked Questions

What is model stacking and how does it differ from ensemble learning?

Model stacking, also known as stacked generalization, is a type of ensemble learning where multiple models are combined to improve prediction accuracy. Unlike traditional ensemble learning, where models are combined at the same level, stacking involves training a meta-model to combine the predictions of base models that are trained on the same dataset.

What are the benefits of using stacked ensemble models in machine learning?

Stacked ensemble models have several benefits in machine learning. They can improve prediction accuracy by combining the strengths of multiple models, reduce overfitting by using a meta-model to generalize the predictions of base models, and provide a more robust and reliable solution to complex problems.

How can stacking improve prediction accuracy compared to using a single model?

Stacking can improve prediction accuracy by combining the predictions of multiple models that are trained on the same dataset. This allows the strengths of each model to be utilized while minimizing their weaknesses. Additionally, the meta-model can generalize the predictions of base models, reducing overfitting and improving the accuracy of the final predictions.

What are some popular algorithms used in stacking ensemble models?

There are several popular algorithms used in stacking ensemble models, including random forest, gradient boosting, support vector machines, and neural networks. These algorithms are often used as base models and combined using a meta-model such as logistic regression or a neural network.

What are the disadvantages of using stacking ensemble models?

One disadvantage of using stacking ensemble models is that they can be computationally expensive, requiring significant resources and time to train and optimize. Additionally, they can be more complex to implement and require more expertise in machine learning than traditional models.

How can you implement a stacking classifier in Python?

A stacking classifier can be implemented in Python using libraries such as Scikit-learn and Keras. The process involves training multiple base models on the same dataset, using cross-validation to generate predictions, and combining the predictions using a meta-model. The resulting stacked ensemble model can then be used for prediction.