Introduction

Machine learning techniques have revolutionized the way we approach data analysis and prediction. Among the various methodologies, bagging and boosting have emerged as powerful ensemble learning techniques. In this comprehensive guide, we will delve into the concepts of bagging and boosting, their importance in machine learning, and explore real-world examples. Moreover, we will showcase the invaluable role played by Analytics Vidhya, a prominent platform in the machine learning community, in fostering knowledge exchange and empowering data enthusiasts.

I. Bagging in Machine Learning

1. Definition of Bagging

Bagging, short for bootstrap aggregating, is an ensemble learning technique that combines the predictions of multiple models to obtain a robust and accurate final prediction. It involves training multiple models on different subsets of the training data through a process called bootstrapping.

2. How does Bagging work?

Bagging works by creating multiple subsets of the original training data through random sampling with replacement. Each subset is used to train a separate model, such as decision trees or random forests. The final prediction is obtained by averaging the predictions of all the individual models or through a voting mechanism.

3. Advantages of Bagging

Bagging offers several advantages, including:

- Reduction of overfitting: By training models on different subsets of the data, bagging helps reduce overfitting and improves generalization.

- Increased stability: Bagging improves the stability of predictions by reducing the impact of outliers or noisy data points.

- Parallelization: The training of individual models in bagging can be easily parallelized, enabling faster processing.

4. Disadvantages of Bagging

Despite its merits, bagging has a few limitations, such as:

- Lack of interpretability: The final prediction obtained through bagging might lack interpretability, as it combines the outputs of multiple models.

- Increased computational complexity: Training multiple models and combining their predictions can be computationally expensive, especially for large datasets.

5. Examples of Bagging in Machine Learning

Bagging has been successfully applied in various domains. For instance:

- Random Forest: A popular bagging-based algorithm that combines multiple decision trees to tackle classification and regression problems.

- Ensemble of Neural Networks: Bagging can also be applied to neural networks, where each model is trained on a different subset of the data.

II. Boosting in Machine Learning

1. Definition of Boosting

Boosting is another ensemble learning technique that aims to improve the predictive performance of a model by sequentially training weak learners and combining their outputs. Unlike bagging, boosting focuses on adjusting the weights of training instances to give more importance to misclassified samples.

2. How does Boosting work?

Boosting works iteratively by training weak models, such as decision stumps or decision trees, where each subsequent model tries to correct the mistakes made by the previous ones. It assigns higher weights to misclassified instances, forcing the subsequent models to pay more attention to those samples.

3. Advantages of Boosting

Boosting offers several advantages, including:

- Improved predictive accuracy: Boosting can significantly enhance the performance of weak learners, resulting in highly accurate predictions.

- Ability to handle imbalanced data: Boosting can effectively handle imbalanced datasets by assigning higher weights to minority class samples.

- Interpretability of weak models: Unlike bagging, boosting often involves weak models that are interpretable, allowing for better understanding of the underlying patterns.

4. Disadvantages of Boosting

Despite its strengths, boosting has a few limitations, such as:

- Sensitivity to noise and outliers: Boosting can be sensitive to noisy or outlier data points, potentially leading to overfitting.

- Increased risk of model bias: Overfitting can occur if boosting iterates for too long or if the weak learners are too complex.

5. Examples of Boosting in Machine Learning

Boosting has found successful applications in various domains. Some notable examples include:

- AdaBoost: A widely used boosting algorithm that combines weak learners, often decision stumps, to solve binary classification problems.

- Gradient Boosting Machines (GBM): GBM iteratively builds an ensemble of weak learners, optimizing a loss function through gradient descent, and has achieved state-of-the-art performance in many competitions.

III. Differences Between Bagging and Boosting

1. Differences between Bagging and Boosting

Bagging and boosting differ in several aspects, including:

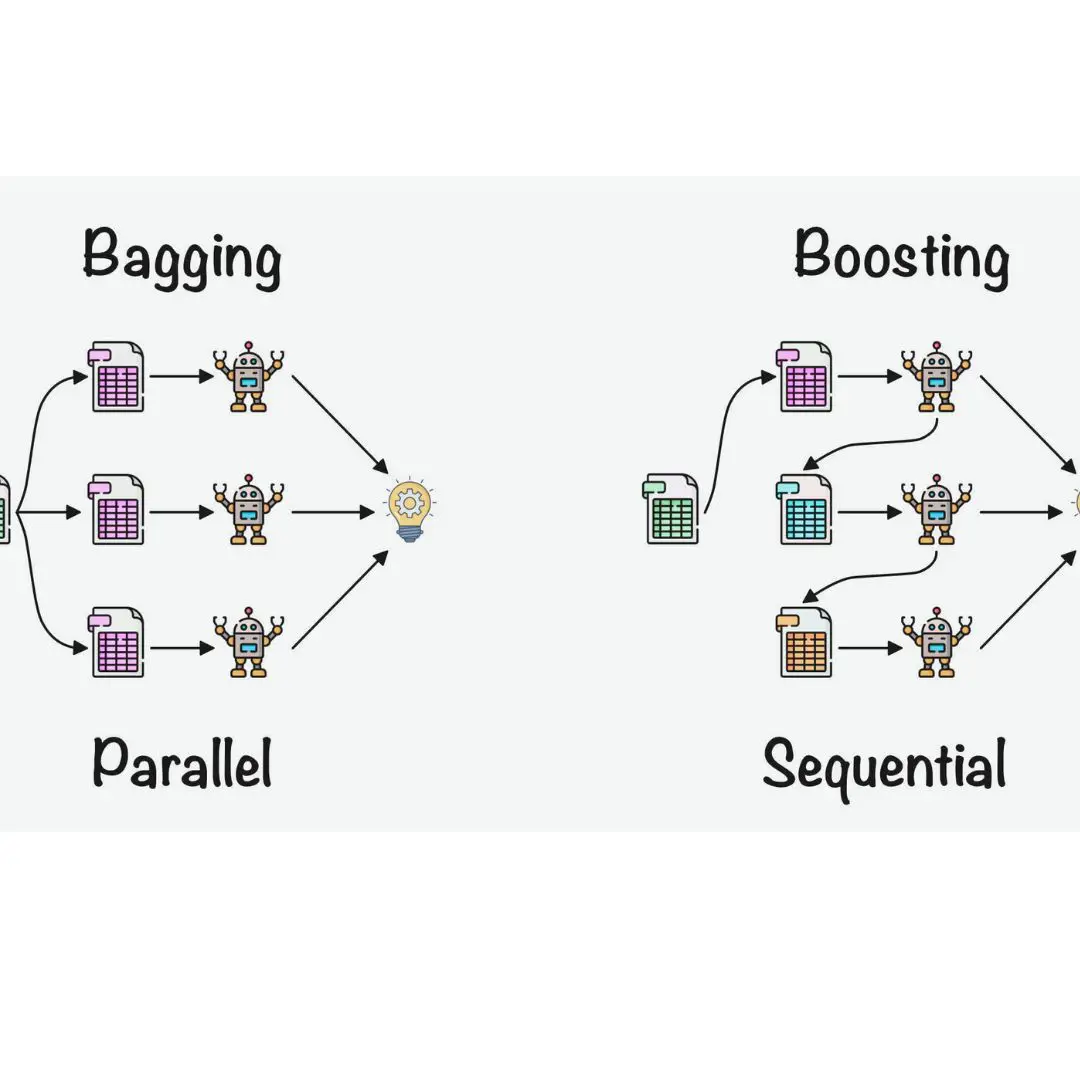

- Training approach: Bagging trains models independently, while boosting trains models sequentially, adjusting weights between iterations.

- Handling of misclassified instances: Bagging treats misclassified instances equally, whereas boosting assigns higher weights to misclassified samples.

- Final prediction: Bagging combines predictions through averaging or voting, while boosting assigns weights to each model’s prediction and combines them accordingly.

2. When to use Bagging vs. Boosting

The choice between bagging and boosting depends on the problem at hand:

- Bagging is generally preferred when the goal is to reduce variance and improve stability. It works well with complex models and large datasets.

- Boosting is suitable when the focus is on improving accuracy, especially for weak learners. It can handle imbalanced data and works well with smaller datasets.

IV. Bagging and Boosting Algorithms

1. Popular Bagging Algorithms

Some popular bagging algorithms include:

- Random Forest: A widely used bagging-based algorithm that combines multiple decision trees and addresses classification and regression tasks.

- Bagging meta-estimators: Techniques like ExtraTrees, which further randomize the feature selection process in random forests, can enhance the diversity of the models.

2. Popular Boosting Algorithms

Notable boosting algorithms include:

- AdaBoost: A classic boosting algorithm that adjusts the weights of training instances to sequentially train weak learners.

- Gradient Boosting Machines (GBM): GBM iteratively constructs an ensemble of weak models, optimizing a loss function using gradient descent.

3. How these Algorithms Work

Detailed explanations of how popular bagging and boosting algorithms work will be covered in future articles.

V. Implementing Bagging and Boosting in Python

1. Introduction to Python for Machine Learning

Before diving into implementation, it is essential to have a basic understanding of Python for machine learning. We will cover the fundamentals of Python and key libraries such as sci-kit-learn.

2. Implementing Bagging in Python

A step-by-step guide on implementing bagging algorithms in Python, utilizing sci-kit-learn’s ensemble module, will be provided.

3. Implementing Boosting in Python

Similar to bagging, we will provide a detailed guide on implementing boosting algorithms in Python using popular libraries such as XGBoost and LightGBM.

VI. Conclusion

In conclusion, bagging and boosting are powerful ensemble learning techniques that contribute to improved predictive performance and model robustness in machine learning. Bagging focuses on reducing variance and improving stability while boosting aims to enhance accuracy by iteratively training weak learners. The future of bagging and boosting lies in their continued integration with advanced machine learning algorithms, enabling the development of more accurate and robust models.

FAQs

Q: Can bagging and boosting be combined?

A: Yes, bagging and boosting can be combined to create an ensemble method called “Boosting with Bagging

.” This technique combines the strengths of both approaches, leveraging the advantages of boosting to improve weak learners and the stability of bagging to reduce overfitting.

Q: Are bagging and boosting only applicable to classification problems?

A: No, bagging and boosting can be applied to both classification and regression problems. The underlying concept of combining multiple models holds for various types of predictive tasks.

Q: Do bagging and boosting require labelled data?

A: Yes, bagging and boosting algorithms typically require labelled data for training the models. The quality and availability of labelled data play a crucial role in the performance of these techniques.

Q: Are there any trade-offs between bagging and boosting?

A: Yes, there are trade-offs to consider. Bagging tends to be more computationally efficient while boosting often achieves higher predictive accuracy. The choice depends on the specific requirements of the problem.

Q: How do bagging and boosting handle missing data?

A: Both bagging and boosting can handle missing data to some extent. However, imputation techniques or specific modifications may be necessary to ensure the optimal utilization of the available information.

As machine learning continues to advance, bagging and boosting techniques remain fundamental tools for improving predictive performance and model robustness. Analytics Vidhya, with its vast array of educational resources and a thriving community, plays a pivotal role in fostering knowledge exchange and empowering data enthusiasts to explore the exciting world of machine learning.