Table of Contents

Support Vector Machines (SVM) is a powerful machine learning algorithm that can be used for both classification and regression tasks. They are particularly useful in classification problems, where the goal is to predict a categorical variable. SVMs work by finding the hyperplane that best separates the data into different classes, with the largest margin possible.

Despite their effectiveness, SVMs can be intimidating for beginners due to their mathematical complexity. However, with the right guidance, anyone can learn how to use SVMs to solve classification problems. In this article, we will demystify SVMs and provide a beginner-friendly guide to understanding and implementing them. We will cover the basic concepts behind SVMs, explain the different types of SVMs, and provide examples of how to use them in practice. By the end of this article, you will have a solid understanding of SVMs and be able to use them to solve classification problems on your own.

Understanding SVM

What is SVM?

Support Vector Machines (SVM) is a popular machine learning algorithm used for classification and regression problems. It is a supervised learning technique that analyzes data and recognizes patterns. SVM is based on the idea of finding the best hyperplane that separates the data into different classes.

1 Understanding the Basics:

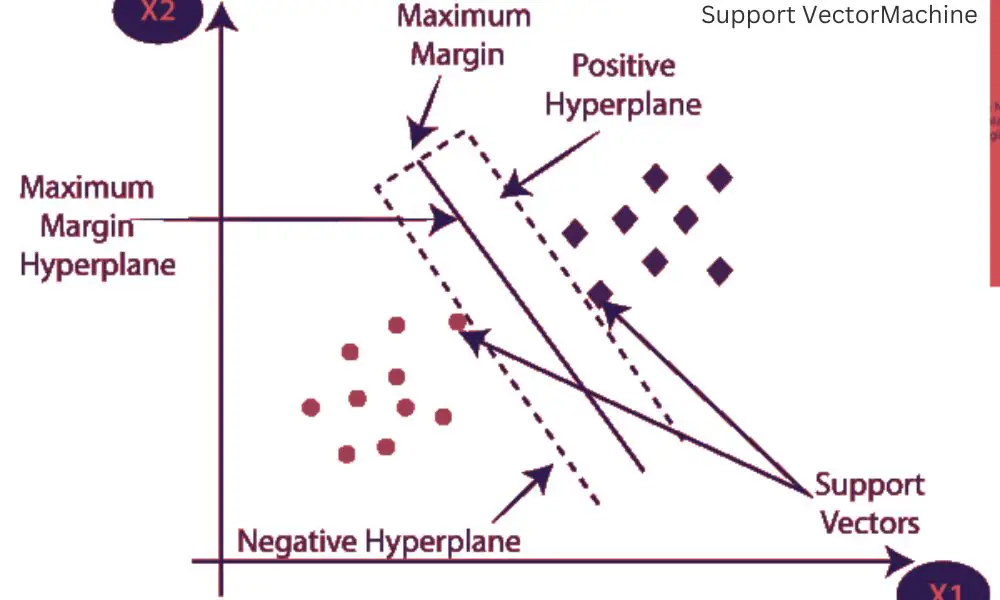

1.1. Linear Classification: SVM employs a linear classification approach, aiming to find an optimal hyperplane that separates different classes of data points.

1.2. Margin Maximization: SVM maximizes the margin between the hyperplane and the nearest data points of each class, enhancing its generalization ability.

1.3. Support Vectors: These are the data points closest to the decision boundary and play a crucial role in defining the hyperplane.

2 Kernel Functions:

2.1. Linear Kernel: The linear kernel is a straightforward choice for linearly separable data.

2.2. Non-linear Kernel: SVM can handle non-linearly separable data using kernel functions, such as the polynomial, radial basis function (RBF), and sigmoid kernels.

3 Soft Margin Classification:

3.1. Handling Overlapping Classes: SVM introduces a soft margin approach to handle overlapping classes by allowing some misclassifications while still maintaining a reasonable margin.

3.2. Penalty Parameter (C): The penalty parameter C controls the trade-off between maximizing the margin and allowing misclassifications. It influences the model’s generalization ability.

4 Multi-Class Classification:

4.1. One-vs-One (OvO): SVM can be extended to handle multi-class classification by training multiple binary classifiers using the OvO strategy.

4.2. One-vs-All (OvA): Alternatively, the OvA strategy trains individual binary classifiers for each class against the rest.

5 Dealing with Imbalanced Datasets:

5.1. Class Weights: SVM allows assigning different weights to different classes, enabling it to handle imbalanced datasets effectively.

5.2. Cost-Sensitive SVM: Cost-sensitive SVM adjusts misclassification costs to address the class imbalance problem.

How does SVM work?

SVM works by finding the best hyperplane that maximizes the margin between the two classes. The margin is the distance between the hyperplane and the closest data points from each class. SVM tries to find a hyperplane that separates the two classes with the maximum margin.

In cases where the classes are not linearly separable, SVM uses a technique called kernel trick. The kernel trick maps the data into a higher-dimensional space where the classes can be separated by a hyperplane.

Types of SVM

There are different types of SVM that can be used based on the requirements of the problem. Some of the popular types of SVM are:

- Linear SVM: This type of SVM is used when the data is linearly separable. It finds the best hyperplane that separates the data into different classes.

- Non-Linear SVM: This type of SVM is used when the data is not linearly separable. It uses a kernel function to map the data into a higher-dimensional space where the classes can be separated by a hyperplane.

- Multi-Class SVM: This type of SVM is used when there are more than two classes in the data. It uses a technique called one-vs-all to classify the data into multiple classes.

- Probabilistic SVM: This type of SVM is used when the probability of the data belonging to a particular class is required. It uses a technique called Platt scaling to estimate the probabilities.

In conclusion, SVM is a powerful machine-learning algorithm that can be used for classification and regression problems. It works by finding the best hyperplane that separates the data into different classes. There are different types of SVM that can be used based on the requirements of the problem.

SVM in Classification

What is classification?

Classification is the process of categorizing data into different classes or groups based on their features or attributes. It is a supervised learning technique that involves training a model on a labeled dataset to predict the class of new, unseen data.

How SVM is used in classification?

Support Vector Machines (SVM) is a popular classification algorithm that works by finding the best hyperplane that separates the data into different classes. The hyperplane is chosen such that it maximizes the margin, which is the distance between the hyperplane and the closest data points from each class. SVM can be used for both binary and multiclass classification tasks.

To use SVM for classification, we first need to train the model on a labeled dataset. During training, the algorithm learns the optimal hyperplane that separates the data into different classes. Once the model is trained, we can use it to predict the class of new, unseen data points.

Advantages of SVM in classification

SVM has several advantages that make it a popular choice for classification tasks. Some of these advantages are:

- Effective in high-dimensional spaces: SVM works well even when the number of features is much larger than the number of samples.

- Robust to noise: SVM can handle noisy data by ignoring outliers or mislabeled data points.

- Versatile: SVM can be used for both binary and multiclass classification tasks.

- Memory efficient: SVM uses a subset of the training data to find the optimal hyperplane, making it memory-efficient.

Limitations of SVM in classification

While SVM has several advantages, it also has some limitations that should be taken into consideration. Some of these limitations are:

- Computationally intensive: SVM can be computationally intensive, especially when dealing with large datasets.

- Sensitivity to kernel choice: The performance of SVM can be highly dependent on the choice of kernel function. Choosing the wrong kernel can lead to poor performance.

- Limited interpretability: SVM does not provide much insight into the underlying data distribution or feature importance.

In summary, SVM is a powerful classification algorithm that can be used for a variety of tasks. It has several advantages, including its effectiveness in high-dimensional spaces, robustness to noise, versatility, and memory efficiency. However, it also has some limitations, including its computational intensity, sensitivity to kernel choice, and limited interpretability.

Kernel Functions in SVM

What are kernel functions?

Kernel functions are a crucial component of Support Vector Machines (SVM). They are used to transform data into a higher-dimensional space, where it is possible to find a hyperplane that separates the data into different classes. The kernel function measures the similarity between data points, and the choice of kernel function can significantly impact the performance of the SVM.

Types of kernel functions

There are several types of kernel functions that can be used with SVM. Some of the most commonly used kernel functions are:

- Linear Kernel: This kernel function is used for linearly separable data, and it simply computes the dot product between two data points.

- Polynomial Kernel: This kernel function is used for data that is not linearly separable. It maps the data into a higher-dimensional space using a polynomial function.

- Radial Basis Function (RBF) Kernel: This kernel function is used for data that is not linearly separable. It maps the data into an infinite-dimensional space using a Gaussian function.

Choosing the right kernel function

Choosing the right kernel function is crucial for achieving good performance with SVM. The choice of kernel function depends on the characteristics of the data and the problem at hand.

Linear kernel functions are typically used when the data is linearly separable, while polynomial and RBF kernel functions are used when the data is not linearly separable. The degree of the polynomial function and the width of the Gaussian function in the RBF kernel can also be tuned to improve performance.

It is important to note that different kernel functions can have different computational costs, and some kernel functions may be more suited for large datasets than others. Therefore, it is important to consider the computational cost of different kernel functions when choosing the right kernel function for a particular problem.

Hyperparameters in SVM

What are hyperparameters?

Hyperparameters are parameters that are not learned from the data but are set prior to training the model. In SVM, hyperparameters play a crucial role in determining the effectiveness of the model. They can be used to control the margin of the decision boundary, the trade-off between bias and variance, and the kernel function used for mapping the input data to a high-dimensional feature space.

Types of hyperparameters

There are two types of hyperparameters in SVM: regularization parameters and kernel parameters. Regularization parameters control the margin of the decision boundary and help in avoiding overfitting. Kernel parameters determine the similarity measure between the input data points in the high-dimensional feature space.

Tuning hyperparameters

Tuning hyperparameters is an important step in building an effective SVM model. The process involves finding the optimal set of hyperparameters that maximize the performance of the model. The most commonly used technique for tuning hyperparameters is grid search, where a range of values for each hyperparameter is specified, and the model is trained and evaluated for each combination of hyperparameters.

It is important to note that hyperparameter tuning can be computationally expensive and time-consuming, especially for large datasets. It is also important to avoid overfitting the hyperparameters to the training data, as this can result in poor generalization performance on unseen data.

In conclusion, understanding and tuning hyperparameters is essential for building an effective SVM model. Regularization parameters and kernel parameters play a crucial role in determining the performance of the model, and tuning them can help in avoiding overfitting and improving the generalization performance.

Frequently Asked Questions

How does SVM differ from other classification algorithms?

SVM is a supervised learning algorithm that aims to find the best hyperplane that separates data points of different classes. Unlike other classification algorithms, SVM can handle high-dimensional feature spaces and non-linear decision boundaries. SVM also uses a margin-based approach to classification, which means it tries to maximize the distance between the decision boundary and the closest data points of each class.

What are the advantages of using SVM for classification?

SVM has several advantages in classification, including:

- Effective in high-dimensional spaces.

- Works well with small and large datasets.

- Can handle non-linear decision boundaries.

- Can handle imbalanced datasets.

- Has a strong mathematical foundation.

What are the limitations of SVM in classification?

SVM also has some limitations in classification, including:

- Can be sensitive to the choice of kernel function and its parameters.

- Can be computationally expensive for large datasets.

- Can be difficult to interpret the resulting model.

- Does not provide probability estimates by default.

Can SVM be used for both binary and multi-class classification?

Yes, SVM can be used for both binary and multi-class classification. For binary classification, SVM tries to find the best hyperplane that separates the two classes. For multi-class classification, SVM uses techniques such as one-vs-one or one-vs-all to combine multiple binary classifiers.

What are some common kernel functions used in SVM classification?

Some common kernel functions used in SVM classification include:

- Linear kernel: used for linearly separable data.

- Polynomial kernel: used for non-linear data with polynomial decision boundaries.

- Radial basis function (RBF) kernel: used for non-linear data with complex decision boundaries.

- Sigmoid kernel: used for non-linear data with sigmoid decision boundaries.

How does SVM handle imbalanced datasets in classification?

SVM can handle imbalanced datasets by adjusting the class weights or using techniques such as oversampling or undersampling. By adjusting the class weights, SVM can give more importance to the minority class during training. Oversampling and undersampling techniques can also balance the class distribution by either duplicating the minority class or removing some instances from the majority class.