Naive Bayes is a simple yet effective classification algorithm used in a wide range of machine-learning applications. It is based on the Bayes theorem and is a probabilistic classifier that makes classifications using the Maximum A Posteriori decision rule in a Bayesian setting. Naive Bayes is a popular algorithm due to its simplicity, speed, and ability to handle high-dimensional data with ease.

The algorithm is particularly useful for text classification, spam filtering, sentiment analysis, and recommendation systems. Naive Bayes is easy to understand and implement, making it an ideal choice for beginners in machine learning. Despite its simplicity, Naive Bayes has shown to be highly accurate in many real-world applications, making it a popular choice among data scientists and machine learning practitioners.

In this article, we will dive deeper into the Naive Bayes algorithm, its working, and its applications. We will explore the different types of Naive Bayes classifiers, the advantages and disadvantages of using Naive Bayes, and how to implement Naive Bayes in Python. By the end of this article, you will have a clear understanding of the Naive Bayes algorithm and its potential uses in your own machine-learning projects.

Overview of Naive Bayes Algorithm

Naive Bayes is a simple yet powerful algorithm for classification tasks. It is based on conditional probability and counting, and it assumes that the features are independent of each other. Despite its simplicity, Naive Bayes has been used successfully in a wide range of applications, including spam filtering, sentiment analysis, and document classification.

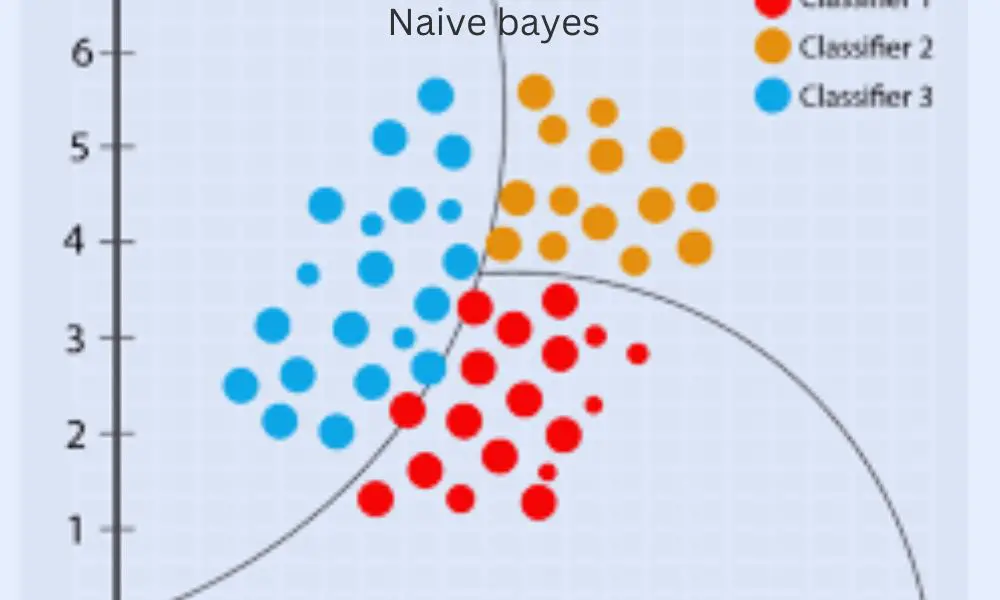

The Naive Bayes algorithm works by first building a probability table based on the training data. This table includes the probabilities of each class and the conditional probabilities of each feature given each class. To classify a new observation, the algorithm calculates the probability of each class given the features of the observation, and then selects the class with the highest probability.

One of the main advantages of Naive Bayes is its efficiency. It requires only a small amount of training data and can be trained quickly. Additionally, it can handle high-dimensional data and is relatively robust to irrelevant features.

However, Naive Bayes has some limitations as well. It assumes that the features are independent, which may not be true in some cases. Also, it may not perform well when the classes are highly overlapping or when the training data is imbalanced.

Overall, Naive Bayes is a simple yet effective classification algorithm that can be a good choice for many applications. Its simplicity and efficiency make it a popular choice in the machine learning community.

Types of Naive Bayes Algorithm

Naive Bayes is a simple yet effective classification algorithm that is widely used in machine learning. There are three main types of Naive Bayes algorithms: Gaussian Naive Bayes, Multinomial Naive Bayes, and Bernoulli Naive Bayes. In this section, we will discuss each of them briefly.

Gaussian Naive Bayes

Gaussian Naive Bayes is a classification algorithm that is used for continuous data. It assumes that the features follow a Gaussian distribution and calculates the mean and variance of each feature for each class. It then uses Bayes’ theorem to calculate the probability of each class for a given sample. It is commonly used in image classification and natural language processing.

Multinomial Naive Bayes

Multinomial Naive Bayes is a classification algorithm that is used for discrete data. It assumes that the features are generated from a multinomial distribution and calculates the probability of each feature for each class. It then uses Bayes’ theorem to calculate the probability of each class for a given sample. It is commonly used in text classification, spam filtering, and sentiment analysis.

Bernoulli Naive Bayes

Bernoulli Naive Bayes is a classification algorithm that is used for binary data. It assumes that the features are binary variables and calculates the probability of each feature for each class. It then uses Bayes’ theorem to calculate the probability of each class for a given sample. It is commonly used in text classification, where the presence or absence of a word is used as a feature.

In summary, Naive Bayes is a powerful classification algorithm that is widely used in machine learning. The choice of the type of Naive Bayes algorithm depends on the type of data being used. Gaussian Naive Bayes is used for continuous data, Multinomial Naive Bayes is used for discrete data, and Bernoulli Naive Bayes is used for binary data.

How Naive Bayes Algorithm Works

Naive Bayes is a probabilistic machine learning algorithm that can be used in a wide variety of classification tasks. Its simplicity and efficiency make it a popular choice for many applications, including filtering spam, classifying documents, sentiment prediction, and more.

At its core, Naive Bayes works by calculating the probability of a data point belonging to a certain class, given its features. This is done by applying Bayes’ theorem, which states that the probability of a hypothesis (in this case, a data point belonging to a certain class) given some observed evidence (the features of the data point) is proportional to the probability of the evidence given the hypothesis, multiplied by the prior probability of the hypothesis.

In other words, Naive Bayes calculates the probability of a data point belonging to a certain class based on how often that class appears in the training data, and how often the features of the data point appear in that class. This is done using conditional probabilities, which are calculated by dividing the number of occurrences of a certain feature in a certain class by the total number of occurrences of that feature across all classes.

One of the key assumptions of Naive Bayes is that the features of a data point are independent of each other, given the class. This is why it is called “naive” – it assumes that all features are equally important and contribute independently to the final classification. While this assumption may not always hold true in practice, Naive Bayes still performs surprisingly well on many real-world datasets.

Overall, Naive Bayes is a simple yet effective classification algorithm that can be used in a wide variety of applications. Its probabilistic nature and reliance on conditional probabilities make it a powerful tool for many machine learning tasks.

Advantages of Naive Bayes Algorithm

Naive Bayes is a popular classification algorithm that is widely used in machine learning and data science. Here are some of the key advantages of Naive Bayes algorithm:

- Simple and easy to implement: Naive Bayes classifier is a simple algorithm that is easy to implement. It does not require a lot of computation or training time. It can be used for both binary and multiple class classification related tasks.

- Handles missing data well: This algorithm is very useful for handling missing data as well. It can handle missing values in the dataset without any issues.

- Efficient on large datasets: Naive Bayes algorithm is efficient on large datasets since the time and space complexity is less. The run time complexity is O(d*c) where d is the query vector’s dimension, and c is the total number of classes.

- Performs well with high dimensional data: This algorithm performs well with high dimensional data. It can handle a large number of features and variables without any issues.

- Works well with categorical and numerical data: Naive Bayes algorithm works well with both categorical and numerical data. It can handle both types of data without any issues.

In summary, Naive Bayes algorithm is a simple yet effective classification algorithm that has a number of advantages. It is easy to implement, handles missing data well, is efficient on large datasets, performs well with high dimensional data, and works well with both categorical and numerical data.

Disadvantages of Naive Bayes Algorithm

While Naive Bayes is a simple and effective classification algorithm, it does have some disadvantages that should be considered when deciding whether to use it for a particular task.

1. Assumption of Independence

One of the main assumptions of Naive Bayes is that all features are independent of each other. This may not always be the case in real-world scenarios, where features may be correlated or dependent on each other. This can lead to inaccurate predictions and classifications.

2. Sensitivity to Outliers

Naive Bayes is sensitive to outliers in the data. Outliers can have a significant impact on the probability calculations, leading to incorrect classifications. It is important to preprocess the data and remove any outliers before applying Naive Bayes.

3. Limited Expressive Power

Naive Bayes has limited expressive power compared to other classification algorithms such as decision trees or neural networks. It may not be able to capture complex relationships between features and target variables. This can lead to lower accuracy in some cases.

4. Imbalanced Data

Naive Bayes assumes that the data is balanced, meaning that each class is represented equally in the training data. If the data is imbalanced, with one class having significantly more samples than the other, Naive Bayes may not perform well and may predict the majority class more often.

5. Handling of Continuous Variables

Naive Bayes assumes that all features are categorical or discrete. It may not perform well with continuous variables unless they are discretized into categories. This can lead to loss of information and accuracy.

Overall, while Naive Bayes is a simple and effective algorithm, it is important to consider its limitations and whether it is suitable for the specific task at hand.

Applications of Naive Bayes Algorithm

Naive Bayes is a simple yet effective classification algorithm that has a wide range of applications in various fields. In this section, we will discuss some of the popular applications of the Naive Bayes algorithm.

Email Spam Filtering

One of the most common applications of Naive Bayes is email spam filtering. With the increasing amount of spam emails, it has become essential to filter out the spam from the legitimate emails. Naive Bayes can be used to classify emails as spam or not spam based on the content of the email. The algorithm analyzes the words and phrases in the email and calculates the probability of the email being spam or not.

News Article Classification

Naive Bayes can also be used for news article classification. News articles can be classified into different categories such as sports, politics, entertainment, etc. Naive Bayes can be trained on a dataset of news articles and their respective categories. Once trained, the algorithm can classify new news articles into their respective categories based on the words and phrases used in the article.

Sentiment Analysis

Sentiment analysis is another popular application of Naive Bayes. It involves analyzing the sentiment or emotion behind a piece of text such as a tweet or a product review. Naive Bayes can be trained on a dataset of text and their respective sentiments such as positive, negative, or neutral. Once trained, the algorithm can analyze new text and predict the sentiment behind it.

In conclusion, Naive Bayes is a versatile algorithm that can be used in various fields such as email spam filtering, news article classification, and sentiment analysis. It is a simple yet effective algorithm that can provide accurate results with minimal computational resources.

Frequently Asked Questions

What is Naive Bayes algorithm and how does it work?

Naive Bayes algorithm is a probabilistic classification algorithm that is based on Bayes’ theorem. It assumes that the features are conditionally independent of each other given the class label. It works by calculating the probability of each class given the input features and selecting the class with the highest probability as the predicted class.

How is Naive Bayes algorithm used in machine learning?

Naive Bayes algorithm is used in machine learning for classification tasks such as spam detection, sentiment analysis, and document classification. It is particularly useful for text classification tasks where the number of features is large and the data is sparse.

What are the advantages of using Naive Bayes algorithm?

Some of the advantages of using Naive Bayes algorithm are its simplicity, efficiency, and ability to handle high-dimensional data. It also requires less training data compared to other classification algorithms and can work well even with noisy data.

Can Naive Bayes algorithm handle multi-class classification?

Yes, Naive Bayes algorithm can handle multi-class classification by using the one-vs-all approach. In this approach, multiple binary classifiers are trained, each one for a specific class, and the class with the highest probability is selected as the predicted class.

What are some real-world applications of Naive Bayes algorithm?

Naive Bayes algorithm is widely used in various industries such as finance, healthcare, and e-commerce. Some of the applications include email spam detection, sentiment analysis of social media data, and product recommendation systems.

What are the assumptions made by Naive Bayes algorithm?

The main assumption made by Naive Bayes algorithm is the conditional independence assumption, which assumes that the features are conditionally independent of each other given the class label. This assumption may not hold true in some cases, but Naive Bayes algorithm can still perform well in practice.