The world of machine learning has witnessed remarkable advancements in generative models and one of the most captivating innovations is Deep Generative Adversarial Networks (Deep GANs).

In this comprehensive guide, we will delve into the intricacies of Deep GANs, exploring their architecture, training process, and applications.

By the end of this article, you’ll have a solid understanding of this cutting-edge technology and its immense potential.

Table of Contents

Understanding Deep Generative Adversarial Networks (Deep GANs)

What are Deep GANs?

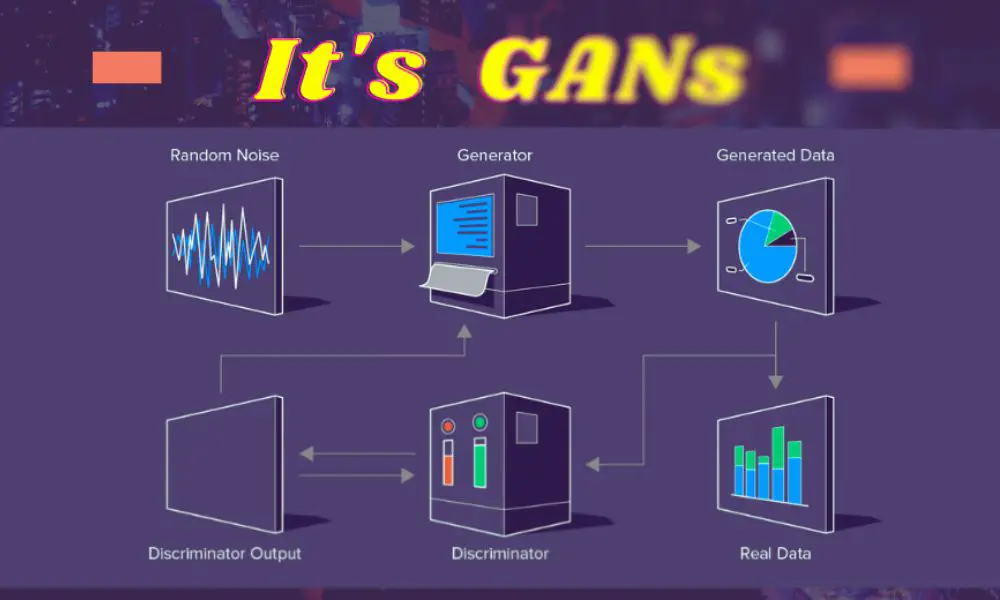

Deep Generative Adversarial Networks, or Deep GANs for short, are a subclass of generative models based on the GAN framework. They consist of two neural networks, the generator, and the discriminator, competing against each other in a zero-sum game.

The generator aims to create realistic data instances, while the discriminator’s objective is to distinguish between real and generated data. This adversarial setup leads to the creation of high-quality synthetic data.

Key Components of Deep GANs

1. Generator Network

The generator network takes random noise as input and generates data samples that resemble the real data distribution. It typically consists of convolutional or deconvolutional layers, and batch normalization is commonly employed to stabilize the training process. The generator’s architecture is crucial in determining the quality of the generated outputs.

2. Discriminator Network

The discriminator network evaluates the authenticity of the data it receives, classifying it as real or generated. It also consists of convolutional layers, with the final output being a probability score representing the likelihood of the input being real. The discriminator’s ability to distinguish between real and fake data improves over time as it competes with the generator.

The Adversarial Process

Deep GANs use an adversarial process to train both the generator and discriminator simultaneously. The generator attempts to generate data that can fool the discriminator, while the discriminator aims to correctly identify real and generated data. This iterative process continues until the generator becomes skilled at creating realistic data, and the discriminator becomes adept at distinguishing between real and fake data.

Loss Functions in Deep GANs

1. Generator Loss

The generator’s objective is to minimize the chances of the discriminator correctly classifying generated data as fake. This is achieved by maximizing the log-likelihood of the discriminator’s incorrect classification, encouraging the generator to produce more convincing samples.

2. Discriminator Loss

Conversely, the discriminator’s objective is to maximize its ability to distinguish between real and generated data. It aims to correctly classify real data as real and generated data as fake, which is accomplished by minimizing its log-likelihood loss.

Deep GAN Architecture

Deep GANs leverage sophisticated neural network architectures to achieve impressive results in generating realistic data. The architecture typically comprises convolutional neural networks (CNNs) with transposed convolutions (also known as deconvolutions) in the generator network. The use of batch normalization helps stabilize training and ensures faster convergence.

Training Deep GANs

Training Deep GANs can be challenging due to the delicate balance between the generator and discriminator. To achieve successful training, several techniques are employed.

Challenges in Training Deep GANs

Training Deep GANs is notoriously difficult due to issues such as mode collapse, where the generator produces limited varieties of data, and vanishing gradients, which hinder learning. Addressing these challenges requires careful tuning of hyperparameters and architectural modifications.

Data Preprocessing and Augmentation

Data preprocessing plays a crucial role in the success of Deep GANs. Proper normalization and scaling of data contribute to smoother convergence during training. Additionally, data augmentation techniques, such as flipping and rotation, help expand the dataset, providing more diverse examples for the GAN to learn from.

Mini-batch Training

Training GANs on large datasets can be computationally expensive. Mini-batch training, where only a subset of the data is used in each iteration, mitigates this issue and helps maintain a balance between the generator and discriminator.

GAN-specific Training Techniques

Several variations of GANs have been proposed to address specific challenges. Notably, Wasserstein GAN and Least Squares GAN offer stable training and improved convergence. Progressive Growing of GANs gradually increases the size of generated images, leading to higher-resolution and more realistic outputs.

Evaluating Deep GANs

Evaluating the performance of Deep GANs requires appropriate metrics and subjective evaluation by human observers.

Common Evaluation Metrics

1. Inception Score (IS)

The Inception Score measures the quality and diversity of generated images. It leverages a pre-trained Inception model to assess the generated samples’ visual quality and their diversity.

2. Frechet Inception Distance (FID)

FID calculates the distance between the feature representations of real and generated images in the Inception space. Lower FID values indicate better performance.

3. Precision and Recall

Precision and recall metrics assess the precision of generated samples in comparison to real data. High precision implies that most generated samples are of high quality, while high recall suggests that most real samples are captured.

Subjective Evaluation by Human Observers

Human observers play a crucial role in evaluating the perceived quality and realism of generated outputs. The human judgment provides valuable insights into the effectiveness of Deep GANs in generating realistic data.

Applications of Deep GANs

Deep GANs have found widespread applications in various fields, unleashing their creative potential in diverse ways.

Image Generation and Synthesis

Deep GANs excel at generating high-quality images, enabling applications in art, design, and content creation.

Super-Resolution and Image-to-Image Translation

By transforming low-resolution images into high-resolution counterparts or converting images between different domains, Deep GANs have shown exceptional performance.

Style Transfer and Domain Adaptation

Deep GANs can learn the underlying style of an image and apply it to another image, facilitating artistic style transfer and domain adaptation tasks.

Data Augmentation and Sample Generation

Deep GANs aid in augmenting datasets, increasing training sample sizes, and generating synthetic data for various machine-learning applications.

Text-to-Image Synthesis

Recent advancements in text-to-image synthesis using Deep GANs allow the generation of images based on textual descriptions.

Drug Discovery and Molecule Generation

Deep GANs have shown promise in drug discovery by generating novel molecular structures with desired properties.

Ethical Considerations and Challenges

The immense capabilities of Deep GANs also raise ethical concerns and pose challenges.

Deep GANs and Potential Misuse

The ability to generate highly realistic fake content, including deepfakes, can be misused for deception or misinformation.

The Issue of Fake Content and Deepfakes

The proliferation of fake content generated by Deep GANs raises concerns about trust, authenticity, and the spread of misinformation.

Bias and Fairness in Deep GANs

Deep GANs can inadvertently perpetuate biases present in the training data, leading to biased generated content.

Mitigating Ethical Concerns

Addressing these ethical challenges necessitates research and measures to ensure the responsible use of Deep GANs and their outputs.

Future Trends and Research Directions

As Deep GANs continue to evolve, several exciting trends and research directions are emerging.

Current State-of-the-Art in Deep GANs

Reviewing the state-of-the-art in

Deep GANs help us understand cutting-edge advancements in the field.

Emerging Trends in Deep GANs

New variations and architectures are constantly being explored, paving the way for novel applications and improved performance.

Cross-Domain and Multi-Modal Generation

Future research is likely to focus on generating data across different domains and modalities, such as images and text.

Reinforcement Learning and GANs

Combining reinforcement learning with GANs presents exciting opportunities for more sophisticated and dynamic data generation.

Combining GANs with other Generative Models

The integration of GANs with other generative models could unlock novel approaches for data synthesis.

Conclusion

Unleashing the creative potential of Deep GANs has revolutionized the world of generative models. Their ability to generate realistic data with far-reaching applications in various fields makes them a powerful tool for innovation. As research continues and ethical considerations are carefully addressed, the future of Deep GANs promises to be filled with even more fascinating developments.

FAQ

What are Deep GANs, and how do they work?

Deep GANs are a subclass of generative models based on the GAN framework. They consist of a generator network that creates synthetic data and a discriminator network that evaluates the authenticity of the data. Both networks are trained simultaneously through an adversarial process.

What challenges are associated with training Deep GANs?

Training Deep GANs can be challenging due to issues like mode collapse and vanishing gradients. Proper data preprocessing, mini-batch training, and specific GAN variations, such as Wasserstein GAN and Least Squares GAN, can help overcome these challenges.

How are Deep GANs evaluated?

Deep GANs are evaluated using metrics like Inception Score (IS), Frechet Inception Distance (FID), and precision and recall. Subjective evaluation by human observers is also essential to assess the perceived quality and realism of generated outputs.

What are the applications of Deep GANs?

Deep GANs find applications in image generation, super-resolution, style transfer, data augmentation, text-to-image synthesis, and even drug discovery through molecule generation.

What ethical concerns arise with the use of Deep GANs?

Deep GANs raise ethical concerns related to the potential misuse of fake content and deepfakes, as well as the perpetuation of biases present in the training data. Responsible use and mitigation strategies are crucial to address these concerns.