In artificial intelligence, privacy and collaboration are paramount.

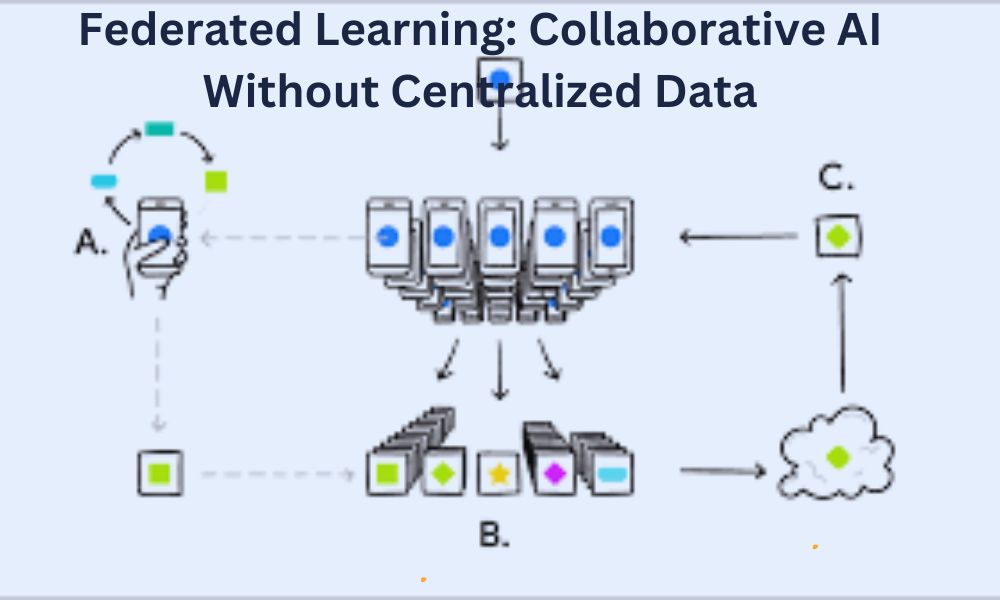

Federated Learning is a decentralized machine learning paradigm where models are trained collaboratively across devices without the need for raw data exchange.

This transformative approach addresses privacy concerns, marking a significant departure from traditional centralized methods. In a world increasingly concerned with data security, Federated Learning stands as a beacon for collaborative AI.

Federated Learning emerges as a groundbreaking approach, revolutionizing how machine learning models are trained without compromising data security.

This article is about the intricacies of Federated Learning, exploring its advantages, challenges, real-world applications, and future trends.

Table of Contents

Understanding Federated Learning

Core Principles

At its core, Federated Learning leverages the power of localized training. Models are sent to individual devices, and trained on local data, and only the model updates, not raw data, are transmitted back to the central server. This ensures data privacy while fostering collaborative model improvement.

Differentiation from Centralized Approaches

Unlike centralized models that require pooling data in a central repository, Federated Learning decentralizes the training process. Devices with local data contribute to model training, creating a collaborative ecosystem without the need for a centralized data warehouse.

Advantages of Federated Learning

Privacy-Preserving Nature

Avoiding Raw Data Exchange

In Federated Learning, raw data never leaves individual devices. Only model updates traverse the network, minimizing the risk of data breaches and preserving user privacy.

Localized Data Storage

By keeping data on local devices, Federated Learning aligns with privacy regulations and user expectations. This approach ensures compliance while facilitating efficient model training.

Efficiency and Reduced Latency

Local Computation for Model Training

Federated Learning reduces the need for massive data transfers by utilizing local computation. This not only streamlines the training process but also minimizes latency, ensuring real-time model improvements.

Mitigating Communication Challenges

While decentralized, Federated Learning introduces communication overhead. Strategies like model compression and quantization help mitigate these challenges, ensuring efficient collaboration.

Scalability and Edge Computing

Large-Scale Distributed Systems

Federated Learning is scalable, making it suitable for large-scale distributed systems. This scalability enhances its applicability in scenarios involving a vast number of devices.

Edge Devices with Limited Resources

The decentralized nature of Federated Learning makes it ideal for edge devices with limited resources. This adaptability ensures AI can thrive in resource-constrained environments.

Challenges in Federated Learning

Communication Overhead

Coordinating Models Across Devices

Coordinating models across decentralized devices introduces communication overhead. Techniques like Federated Averaging address this challenge by aggregating model updates effectively.

Strategies to Mitigate Communication Challenges

Implementing asynchronous communication and employing efficient compression algorithms are key strategies to mitigate communication challenges in Federated Learning.

Heterogeneity of Devices

Diversity in Capabilities and Data

The diverse nature of devices in a federated ecosystem introduces challenges related to varying capabilities and data distributions. Federated transfer learning and adaptive algorithms help address these disparities.

Ensuring Fair and Effective Collaboration

Ensuring fair and effective collaboration among devices with different capabilities is crucial. Federated optimization techniques, such as weighted updates, contribute to balanced model improvements.

Security Concerns

Protection Against Malicious Attacks

The decentralized nature of Federated Learning raises security concerns. Encryption, secure aggregation, and robust authentication mechanisms protect against malicious attacks, ensuring the integrity of the collaborative learning process.

Ensuring Integrity of Federated Learning

To maintain the integrity of Federated Learning, secure protocols must be in place. This includes secure model aggregation, tamper detection mechanisms, and continual monitoring for unusual activities.

Real-World Applications

Healthcare

Medical Research and Patient Data Analysis

Federated Learning plays a pivotal role in medical research and patient data analysis. Collaboration among healthcare devices ensures advancements in research without compromising patient privacy.

Ensuring Compliance with Healthcare Regulations

Strict healthcare regulations necessitate privacy-preserving approaches. Federated Learning aligns with these regulations, enabling healthcare institutions to leverage AI while ensuring compliance.

Financial Sector

Fraud Detection and Risk Assessment

In the financial sector, Federated Learning enhances fraud detection and risk assessment. Collaboration among financial institutions allows the development of robust models without exposing sensitive customer data.

Maintaining Confidentiality in Financial Data

Confidentiality is paramount in financial data. Federated Learning addresses this by ensuring that model updates, not raw financial data, are shared, safeguarding sensitive information.

Internet of Things (IoT)

Enhancing IoT Device Capabilities

Federated Learning empowers IoT devices by enhancing their capabilities through collaborative learning. This approach is particularly beneficial in scenarios where individual devices contribute to a collective intelligence without sharing sensitive data.

Privacy Considerations in IoT Environments

IoT environments demand stringent privacy considerations. Federated Learning aligns with these requirements, enabling IoT devices to learn collaboratively while preserving user privacy.

Federated Learning Frameworks and Technologies

TensorFlow Federated

Overview and Features

TensorFlow Federated is a leading framework for Federated Learning. Its features include support for various machine learning algorithms and a robust infrastructure for decentralized model training.

Use Cases and Examples

TensorFlow Federated has been successfully applied in scenarios such as federated health prediction models and privacy-preserving keyboard prediction models.

PySyft

Exploring PySyft Capabilities

PySyft is a powerful library for privacy-preserving machine learning. Its capabilities include federated learning, homomorphic encryption, and multi-party computation.

Integrating PySyft with Popular Frameworks

PySyft seamlessly integrates with popular machine learning frameworks like PyTorch and TensorFlow, providing developers with a versatile toolkit for privacy-preserving AI.

Future Trends and Developments

The evolution of Federated Learning is poised to shape the future of collaborative AI. Emerging technologies and frameworks promise to enhance its efficiency and applicability across diverse industries. The potential impact on the AI industry and society at large is substantial, paving the way for a more secure and collaborative approach to machine learning.

Privacy-Preserving Collaborative AI: A New Era Unveiled

Recapping the benefits and challenges of Federated Learning underscores its significance in privacy-preserving collaborative AI. This transformative approach ensures the advancement of machine learning models without compromising user privacy. As the industry evolves, Federated Learning stands as a testament to the power of decentralized collaboration in shaping the future of artificial intelligence.

Frequently Asked Questions (FAQs)

How does Federated Learning address privacy concerns in healthcare applications?

Federated Learning ensures privacy in healthcare by allowing models to be trained locally on patient data. Only model updates are shared, preventing the exchange of sensitive patient information. This aligns with healthcare regulations while fostering advancements in medical research.

Can Federated Learning be applied to small-scale IoT devices with limited resources?

Yes, Federated Learning is adaptable to small-scale IoT devices with limited resources. Its decentralized nature and scalability make it suitable for a variety of devices, enabling collaborative learning without overwhelming resource constraints.

How does Federated Learning protect against malicious attacks in a decentralized environment?

Federated Learning employs encryption, secure aggregation, and robust authentication mechanisms to protect against malicious attacks. These security measures ensure the integrity of the collaborative learning process, even in a decentralized setting.

What are some real-world examples of Federated Learning applications in the financial sector?

Federated Learning is applied in the financial sector for fraud detection and risk assessment. Banks and financial institutions collaborate to improve models without sharing sensitive customer data, maintaining confidentiality while enhancing security measures.

How can PySyft be utilized to enhance privacy in machine learning?

PySyft offers privacy-preserving features, including federated learning, homomorphic encryption, and multi-party computation. Developers can leverage PySyft to integrate these capabilities into popular machine learning frameworks, ensuring a comprehensive toolkit for privacy-preserving AI.