The integration of multiple sensory modalities has emerged as a key frontier.

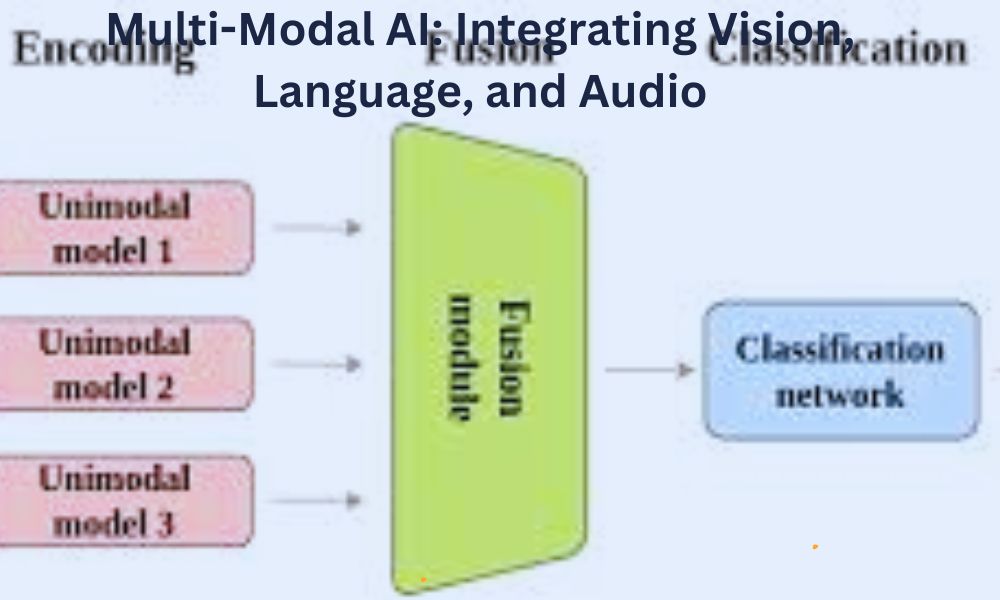

This article explains the exciting realm of Multi-Modal AI, exploring the seamless amalgamation of vision, language, and audio inputs. By understanding the challenges, advancements, and real-world applications, we unravel the intricate web of possibilities that arises when these modalities converge.

In the contemporary AI ecosystem, Multi-Modal AI stands at the forefront of innovation. The ability to combine vision, language, and audio not only enhances the depth of understanding but also broadens the scope of applications across various industries.

Table of Contents

Understanding Sensory Modalities

Definition of Sensory Modalities

Sensory modalities refer to the distinct channels through which humans and AI systems perceive information. In the context of Multi-Modal AI, the primary modalities include vision, language, and audio.

Vision as a Sensory Input

Vision, as a modality, involves the processing of visual data. Convolutional Neural Networks (CNNs) play a crucial role in deciphering images and videos, enabling machines to “see” and interpret the visual world.

Language as a Critical Modality

Natural Language Processing (NLP) forms the backbone of language understanding. Through sophisticated algorithms, machines can comprehend and generate human-like language, bridging the gap between human communication and AI.

Role and Significance of Audio Inputs

The integration of audio inputs, such as speech recognition, widens the spectrum of AI capabilities. Audio modalities contribute to a more holistic understanding of the environment.

Challenges in Multi-Modal Integration

Inter-Modal Variations and Complexities

One of the primary challenges in Multi-Modal AI is reconciling the differences in data formats and structures across modalities. Ensuring seamless communication between vision, language, and audio modules demands sophisticated synchronization mechanisms.

Handling Diverse Data Sources

The diversity of data sources, each with its unique characteristics, poses a formidable challenge. Developing models capable of effectively processing and interpreting varied data inputs is a key hurdle.

Ensuring Synchronization Among Modalities

Achieving real-time synchronization among vision, language, and audio components is crucial for coherent and contextually rich output. The challenge lies in minimizing latency and optimizing the flow of information.

Advancements in Multi-Modal AI

Recent breakthroughs in Multi-Modal AI have showcased the immense potential of integrated systems.

Overview of Recent Breakthroughs

Cutting-edge research has led to the development of models that not only understand individual modalities but also excel in processing information when multiple modalities are involved.

Case Studies Demonstrating Successful Integration

Exemplary cases, such as models capable of captioning images with contextual understanding or translating spoken language into text, highlight the successful integration of vision, language, and audio.

Improvements in Model Performance and Accuracy

Continuous refinements in algorithms and model architectures have resulted in significant improvements in performance and accuracy, making Multi-Modal AI more robust and reliable.

Vision in Multi-Modal AI

Convolutional Neural Networks (CNNs) for Visual Data

CNNs, designed for visual data, have become instrumental in extracting meaningful features from images and videos. Their integration into Multi-Modal AI enhances the system’s ability to interpret visual cues.

Cross-Modal Connections with Other Modalities

The synergy between vision and other modalities, such as language and audio, opens up new avenues for comprehensive understanding. For instance, interpreting a scene not only visually but also understanding spoken descriptions.

Applications and Use Cases of Vision Integration

Practical applications abound, from healthcare (medical image analysis) to autonomous vehicles (visual perception for navigation), showcasing the versatility of integrating vision into Multi-Modal AI.

Language Integration in Multi-Modal AI

Natural Language Processing (NLP) and Its Role

NLP, a cornerstone of language understanding, empowers AI systems to process, analyze, and generate human-like text. In Multi-Modal AI, language integration provides context and semantic understanding.

Cross-Modal Language Understanding

The ability to connect language with other modalities enables more nuanced interactions. For instance, understanding and generating text based on visual or auditory stimuli.

Generating Textual Output from Multi-Modal Inputs

The convergence of modalities allows AI models to generate textual output that captures the essence of information extracted from diverse sources, providing a coherent narrative.

Audio Integration in Multi-Modal AI

Importance of Audio Data in AI Models

Audio inputs, including speech recognition, enrich the perceptual capabilities of AI systems. The ability to understand spoken language enhances the overall user experience.

Speech Recognition and Its Integration

Advancements in speech recognition technology enable AI models to transcribe spoken words into text, facilitating seamless integration with language processing modules.

Challenges and Solutions in Processing Audio Information

Challenges in processing audio information, such as background noise and accent variations, have prompted innovative solutions. Deep learning techniques have been employed to enhance the robustness of audio integration.

Synergy of Vision, Language, and Audio

Interconnectedness and Mutual Influence

The true potential of Multi-Modal AI emerges when the modalities synergize, influencing and enhancing each other. A model that can both describe an image in natural language and understand spoken queries exemplifies this synergy.

Achieving a Holistic Understanding Through Multi-Modal Integration

The holistic understanding achieved through Multi-Modal integration surpasses the capabilities of individual modalities. It paves the way for more comprehensive AI systems that mimic human perception.

Real-World Applications and Benefits

From virtual assistants capable of understanding and responding to visual and verbal cues to augmented reality applications enriching user experiences, the real-world applications of Multi-Modal AI are vast and impactful.

Future Trends in Multi-Modal AI

Emerging Technologies and Methodologies

Ongoing research is exploring emerging technologies, including more advanced neural architectures and innovative training strategies, to further enhance Multi-Modal AI capabilities.

Potential Breakthroughs on the Horizon

Anticipated breakthroughs include an enhanced understanding of contextual nuances, improved real-time processing, and more effective integration of emerging modalities.

Implications for Various Industries and Sectors

As Multi-Modal AI matures, its implications across industries, from healthcare and education to entertainment and business, will continue to redefine the way we interact with technology.

A Visionary Culmination

In conclusion, Multi-Modal AI represents a paradigm shift in artificial intelligence, weaving together vision, language, and audio for a more nuanced and comprehensive understanding of the world. As the field progresses, the potential for transformative applications and groundbreaking innovations becomes increasingly evident.

Frequently Asked Questions

How does Multi-Modal AI benefit industries like healthcare?

Multi-modal AI in healthcare enhances diagnostic capabilities by integrating visual data from medical imaging, textual information from patient records, and potentially even audio cues from patient interactions. This holistic approach can lead to more accurate and timely diagnoses.

Can you provide an example of successful Multi-Modal AI integration in autonomous vehicles?

Certainly! Some autonomous vehicles use Multi-Modal AI to process visual data from cameras, understand spoken instructions from passengers, and analyze sounds from the surrounding environment. This integration ensures a comprehensive perception system for safer navigation.

Are there any privacy concerns associated with the integration of Multi-Modal AI?

Privacy concerns do arise, especially when dealing with sensitive data such as medical records or audio conversations. Ensuring robust data anonymization and encryption measures

is crucial to address these concerns and maintain ethical standards.

How can businesses leverage Multi-Modal AI for improved customer experiences?

Businesses can enhance customer experiences by implementing Multi-Modal AI in virtual assistants and chatbots. These systems can understand and respond to customer queries in a more natural and context-aware manner, improving overall user satisfaction.

What are the key considerations for developers working on Multi-Modal AI projects?

Developers should focus on seamless integration of modalities, effective synchronization, and ongoing model refinement. Additionally, staying updated on the latest advancements in individual modalities, such as computer vision or NLP, is crucial for building cutting-edge Multi-Modal AI systems.